Introduction

Basically, I grew up networking, starting with 2400 baud modems and Token Ring. By the time I was eleven, I was copying documents from paper files to electronic and networking the local ward offices (Chicago Democratic Machine). And by the time I was old enough to vote I felt like I had done it all…

…which left me completely unprepared when I was hired to create a network for a film post-production company.

They say everybody in the entertainment industry winds up in Hollywood sooner or later. I never did, so I suppose everybody else must eventually wind-up in Park City, Utah, the site of the Sundance Film Festival. I had worked on a post-production project that eventually wound-up on a Trauma Films DVD, so I had come down to watch the debut. Since I was never adverse to making a little money while on vacation, I responded to an ad by one of the numerous production companies that spring up in that part of the mountains every year.

Although I had little experience in the field of large-scale network multimedia, I got the job largely because every other network engineer in town had been snapped-up by the big production companies who had already decided to sign a number of the independent films to distribution contracts and were constructing temporary facilities to help with the promotion of these films.

By this point, I already had a good six years worth of professional experience under my belt, working on networks as large as that of the United Postal Service. But it only took one glance across the room to tell me that this would be a very different gig indeed. The building resembled nothing more closely than a giant, empty airport hanger that a bunch of set designers had already been hard at work in disguising to look like something out of the movie Studio 54Â… or perhaps Rolling Stone Magazine circa 1969.

The post production crews already had their workstations rolling on pallets carried by forklifts. Power was on and the PR and accounting departments were more or less up and running on a series of couches and tables where everyone was working from laptops and already eyeing me as they complained about the lack of Internet access. Network Storage units were being wheeled in one by one and as soon as I had signed my contract, the forklift operators began walking up to me asking where everything should go.

“Where” indeed?

Decide and Conquer

Everything seemed to be happening so fast—yet working so effectively in its own odd way—that I figured I might as well jump right in. The “office" personnel (accounting, PR, legal dept, etcÂ…) seemed to be an immediate priority, but I also wanted to get the video editors and graphic design artists up and running. So the first question I had to answer was: “What kind of a network am I going to build?”

Given the M.A.S.H. “mobile headquarters” feel of the place, and the fact that the majority of the office personnel were using, and would continue to use, laptops for the remainder of their stay in this rented warehouse, I decided that wireless networking would play an important role in the design. I also had to take the high volume of large files being transferred over the network into consideration. Given the number of workstations that had been wheeled in, the types of files (and fact that they’d be doing hourly backups), I decided that nothing less than a solid gigabit Ethernet network supporting jumbo frames would do.

But would the constant flow of information back and forth between the post production editors slow down the workflow of the office personnel? What type of wireless system would be most effective in this large warehouse environment? Should the less-demanding network traffic be offloaded on a different network altogether and later patched into the rest of the group?

I decided my first priority should be to get the production people up and running as quickly as possible so that I could gauge the kind of stress that extremely large file sharing and transfer would put on the main network. Since the office personnel already had some sort of a stopgap solution going, and the post-production crew were just sort of lounging around waiting for something to do, I enlisted the aid of a few of them to help me construct their network.

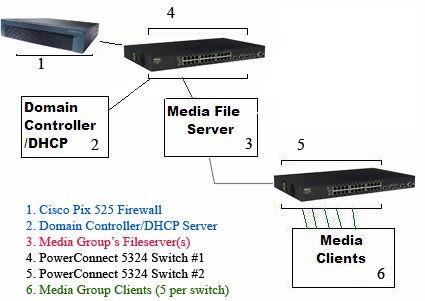

Figure 1: The basic layout for the main network.

Figure 1 shows the machine-to-machine layout of the base network that I decided to build. Note that, in this instance,

the Windows 2003 Server machine that is operating the Domain Controller is also running the network’s

DHCP Server. (There is also a backup Domain Controller and DHCP Server so that, just in case there’s a problem with

the main server, productivity will be uninterrupted.) The firewall is connected to the domain controller via the first switch and is set up to send only to the Domain Controller. A more comprehensive look at how switches and clients are connected can be found in Figure 4.

The first thing I had to do was secure some extremely high quality CAT 6 cabling. For many in the networking field this is a no-brainer. But imagine getting a 3000 watt RMS 7.1 (or even an 8.1) Dolby Digital Surround System with a six foot tall subwoofer and stadium-sized speakers and then trying to connect it all together with clipped headphone wires.

Given the nature of what I knew these people were going to be working on, I wanted the fastest, but also the most reliable network I could build in as short a period of time as possible. And the veins of that network would be good CAT 6.

Figure 2: Cisco’s PIX 525 Firewall.

For a firewall, I went with the Cisco PIX 525 (as opposed to some of the newer models), largely because I was familiar with the unit and it has 128 MB of RAM and supports a gigabit Ethernet expansion card. Other noteworthy features are an integrated VPN accelerator and failover support.

The 525 went through a Xeon-powered Windows 2003 Server set up to handle domain control and DHCP functions. Dell PowerConnect 5324 managed switches connected the domain controller to the media file servers and backup servers with another set of switches connected to the clients.

Hooking Up

Figure 3: Dell PowerConnect 5324.

Once the components were chosen, setup was more straightforward. After adding a domain controller and DHCP server on the Windows 2003 server, I simply plugged it into a port on the Dell PowerConnect 5324 and used HyperTerminal to configure it to recognize the DHCP server. Once I checked the IP addresses that were being handed out were ok, it was simply a matter of connecting the media fileservers and backup servers to the switches and running those servers into another set of switches which I then ran into Ethernet ports in each of the workstation cubicles.

The transfer of hundreds of gigabytes of video and audio files per hour between the media fileservers, backup servers and clients was a key concern in this network. This extremely heavy data flow could potentially slow the network to a crawl if the network wasn’t properly configured. Fortunately, "jumbo" frames were created specifically to help with data flows similar to mine and I decided to use the maximum MTU size (at the time) of 9K.

But jumbo frames were disabled by default on the 5324. However, correcting the problem was simple because the 5324—like most managed switches—comes with a very simple, web-based GUI. All I had to do was to log into the switch, navigate to the System -> Advanced Settings -> General Settings page and change the Jumbo Frame setting.

I also had to replace some of the older 10/100 Ethernet cards that were present in a few of the workstations with gigabit NICs from Signamax. Between the switches, cabling, and network interface cards, I had pretty much the most solid hardware base that I could conceive to fit the situation. Jumbo frames were enabled on all the switches, the user and security policies were set up, and the fileservers were ready to go.

Now it was time to see if this puppy could fly.

I turned the post-production crew loose on their machines and they began image acquisition and encoding. I wanted to get as many of them going as possible while I monitored network performance from the media fileserver to the clients and backup media fileserver. The results had me grinning into my clip board. Full duplex speeds were averaging about 25 megabytes a second—nearly a gig and a half per minute!

For the moment, non-server related activity such as e-mail, web browsing, and FTP seemed largely unaffected by the large file transfers that were going on in the background. The editors could send small clips back and forth to each other while hundreds of gigs of footage were being captured and digitized, and still be able to check their email and access the various logs and databases with very little interruption.

I even managed to set up a few interim Cisco access points for the office personnel that had mainly been working out of their hotels. But they were now showing up in increasing numbers on the neo-contemporary couches (which were now accompanied by shag rugs) which served as temporary departmental divisions. It was a simple matter of hanging the access points off of the switches, configuring them to recognize the DHCP server, and setting up WPA-AES encryption (all of these things I would later revise and streamline, which you’ll hear about in Part 3)

One of my main concerns was that the addition of the other file servers (PR, Accounting, Administration, Legal, etcÂ…) and their respective users might cause bottlenecks once all of them were regularly using the network at the same time. Considering this was the movie business—with deadlines, press releases, and maybe a sudden crunch for something like “opening night”—it was inevitable. The network had to handle the load of everybody intensely active on the main network at the same time, plus the additional 2-3X load of hourly backups.

Conclusion

I had already devised a contingency plan, should things slow to down to a point which I deemed unacceptable. By limiting the number of machines connected to each switch, I decreased the likelihood of traffic getting jammed on a high-demand node.

Each 5324 has 24 gigabit Ethernet ports. So you could theoretically connect 24 machines to each switch. This wouldnÂ’t be taking into account, however, the high volume scenario which, combined with backups, would likely slow network responsiveness enough to make me look bad. (And you never get the second chance to make a first impression.)

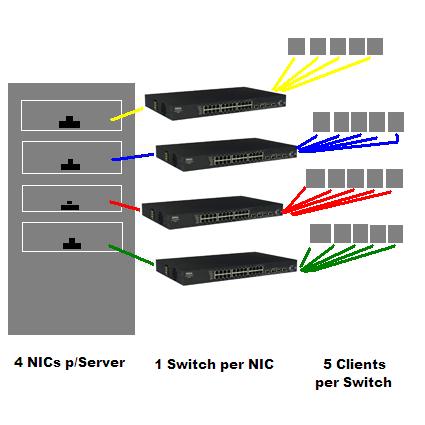

Figure 4: The Contingency Plan.

So by connecting fewer (in this case, five) machines to each switch and terminating each switch into its own separate server NIC, I reduced the chance of network congestion when things got “heavy.” (Figure 4 shows how ten production clients with two workstations each were connected to this network. Note that each fileserver also had a built-in NIC up front to connect to the domain controller switch, bringing the total to five NICs.)

But just how heavy would things get? It is entirely possible that I could’ve gotten away with ten or more clients per switch and spread out the switches a bit more once I connected the office personnel. What kind of load should I have been anticipating in this particular scenario? What exactly was the breaking point and would I ever even come close to reaching it? And finally, what was the most effective way to route traffic to minimize delays and maximize network stability?

The answers to this and more in Part TwoÂ…