Introduction

I was beginning to warm up to the new environment. In addition to the shag rugs and couches they went the additional mile and installed about six or seven hammocks. With the furnace on full-blast they would have been as at home as if they were still in Sacramento. This was not your typical networking gig by any means. What was bothering me was the thought that multiplying the amount of users by ten and adding them to the system would send the whole thing crashing down. WhatÂ’s more, there wasnÂ’t that much time even when I was hired, and now there was considerably less.

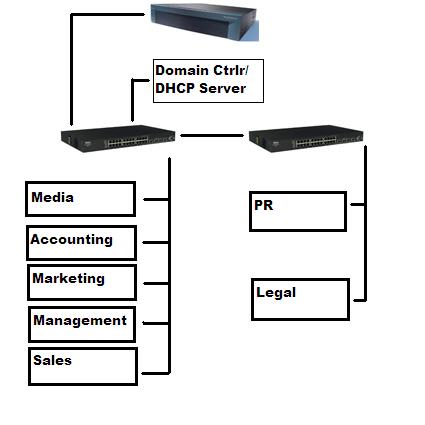

Figure 1 shows our advanced network layout. I’ve gathered all the file servers and added them as separate domains to the network. I also took the highly recommended step of adding each server to the other’s Hosts file so that, just in case for some odd reason the Domain Controller/DHCP Server and their backup went down, the clients would still be able to reach the servers.

Figure 1: The basic layout for the Advanced network.

I wanted as many fallback plans as possible when dealing with a large network where any number of things can go wrong. This isn’t so much of an issue when you’re only dealing with ten or fifteen machines. But once you get into the hundreds of nodes, it will save your network a lot of downtime while you pinpoint and fix the problem.

The key word is redundancy. There are a couple of ways that this network could have been set up. The users could have been split into different groups, with access to the individual servers divided by group (Sales, Finance, etc.). I chose to split users into (Windows) domains, largely because that’s how their company headquarters network was set up. So to save time, I simply imported their accounts. Keeping the domain organization will also be important in Part 3 when we create a VPN tunnel to the company headquarters network.

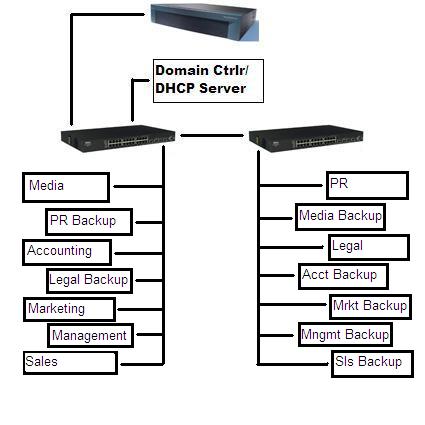

Figure 2 gives us a more complete picture of the network. (Note that the single lines in Figure 2 connecting the servers to the switches actually represent groups of individual connections between each server and a switch port. So there are a total of 7 ports used per switch. So if the first server switch is destroyed by molten lava or Crazy Beth from housekeeping… bringing every fileserver connected down with it… this network will survive for a number of reasons.

Figure 2: Detailed server layout for the Advanced network.

The first reason is the precaution we took in adding a hosts file with each server listed on each of the machines so that, even in the case of a Domain Controller freakout, productivity would not be interrupted. The backup servers could also replace their primary counterparts that they’re backing up at any time with a very minimal loss of active data. And finally my diagram was detailed enough that I posted it in the middle of the server room, and users could easily navigate their way around the servers with a pen drive if need be.

Backup

It was actually one of the Media editors who created the backup script that divided backups into 4 GB files that were copied hourly. This made it less likely that a file would be in the process of being edited while a backup was taking place. This method had a downside that the backups were not necessarily of the most recently edited material.

If the backup file size had been 50 or 100 GB, and an editor was working on that 100GB file at the time, then the entire 100GB would be unable to be copied. During the backup, each 4GB file was copied, locally, to a new name, sent to the Media Backup server, and removed. The script also had the provision that if a file could not be copied, it was put into a list of files to be attempted at the end of the backup. And if the file could still not be copied, then it was skipped until the next backup.

With this setup, the Media editors were capturing, editing, and making backups over the network while I added the rest of the file servers. The Dell PowerConnect 5324 switched were handling the load well with an average transfer rate still around 1.33 GB per minute.

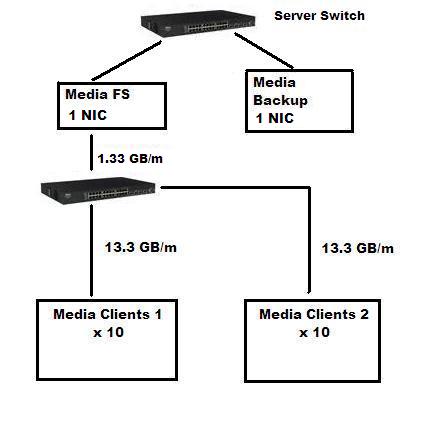

On the Media side, there were ten users with two workstations each for a total of twenty clients. You’ll remember from Part 1 that I had chosen to organize the Media group as five clients per switch, one switch per NIC, and four client NICs per server (with the additional built-in NIC being connected to the Domain ControllerÂ’s switch). From Part 1’s Comments it appears that my rationale for this organization was misunderstood, so IÂ’d like to flesh this out a bit.

There were actually two Media fileservers (weÂ’ll call them Media and Media Backup). The primary Media server is where the video/audio files were being transferred to and from and where audio/video capturing/encoding takes place. Remember also that each Media user had two machines. The first machine was connected to a capture device that also encoded the files to the desired format and size. This machine then automatically named and transferred each file to the Media fileserver into a folder. Once an entire reel or cassette was captured and named, the finished folder was then sent to the Media Backup server.

The second Media workstation was where the actual editing was taking place. This involved an eight hour series of transfers from the primary Media server to the ten media editing stations. Then the saved projects would be sent from the editing stations back to the Media server with an hourly backup being sent to the Media Backup server. Each transfer on the editing workstations was a minimum of 50 GB.

With ten editing stations, there was a minimum of 500 GB being transferred at a time, with the overall transfer rate of each workstation being 1.33 GB per minute. This means that each switch was being hit with about 13.3 GB per minute from the Media editing workstations alone. We can pretty much double that figure with the transfer from the capture/encoding workstations to the Media fileserver.

Figure 3 shows a hypthetical scenario in which we’re only using one NIC. As you can see, the two workstations used by each Media client are hitting the switch with around 26.6 GB per minute. But with only one gigabit connection on the Media fileserver, throughput is choked down to 1.33 GB per minute—the effective transfer rate of a gigabit NIC.

So while the main network, from the Firewall to the Domain Controller/DHCP Server down to the clients, was functioning efficiently, the Media editors would experience lag at the file server because a single NIC card is incapable of processing 26.6 GB per minute.

Figure 3: Hypothetical 1 NIC scenario.

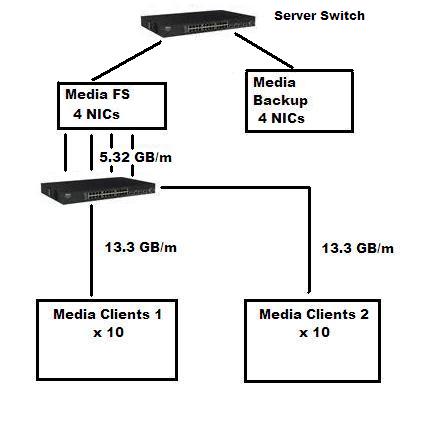

Figure 4 shows what was actually implemented. With the addition of 3 NIC cards, throughput increases to around 5.32 GB per minute (give or take a few MB in the name of CPU and Memory overhead). I opted use the network load balancing built into Windows to handle that issue. I suppose I could’ve writen a Java port listener to hand out routes “round robin” to each NIC… but life’s too short.

Figure 4: Four NIC card solution.

Note that I’ve also attached four NICs to the Media Backup server even though only one nic (the built-in NIC) is connected to the regular Media server through the Server Switch. This is, just in case there’s a problem with the Media’s main server, all I’ll have to do is move the connections over to the backup server. And if you’re wondering why I didn’t use 10 GB Ethernet, unfortunately, it was neither affordable, practical nor widely available at this time.

General Office requirements

With the heavy traffic portion of the network squared away, lets look at the other side of the network. Figure 5 shows the third group of clients: Marketing. This group pretty much hits the average in terms of number of clients per group (there were a couple with 20 there were a couple with 10). Also, all the Designers belonged to the Marketing department and represented the maximum size file transfers of any of the general office staff.

Figure 5: Marketing Clients Chart.

As you can see, with the Marketing group I had a little bit more flexibility when dealing with the number of clients per switch. Figuring each Media editor was transferring approximately 1.33 GB per minute and each Marketing client was only hitting about 200 MB an hour, I figured I’d be more than safe raising the number of clients per switch by 125 percent. First, though, I need to backtrack to where I came up with that 200MB per hour figure.

As an engineer with ten years worth of experience and an absolute business professional, I would like to tell you I chose my Test Client at random… I did not. “Mary” was chosen because her eyes were the deepest shade of blue that I had ever seen before. After a week of running around the warehouse and tripping over suede footrests and, what I can only hope were imitation Salvidor Dali sculptures, I found that whenever I was anywhere near Mary I couldn’t look at anything else. So why not drag her into the network auditing process?

As it turned out, Mary was the Marketing CIO and head designer in charge of putting together everything from movie posters to promotional material. As luck would have it, she also had a lot of work to do. So I connected her laptop to one of the first available Marketing switches and began to monitor her network utilization.

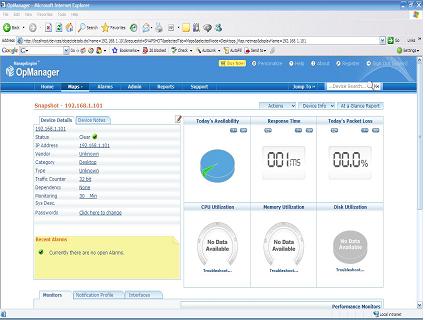

Figure 6: The latest version of Adventnet’s OpManager.

OpManager is a wonderful program that I just happened to stumble upon in a Google AdSense link. It allows you to monitor the CPU, disk, and memory usuage as well as the receive and transmit traffic on any client, group of clients, server, switch or router on your network. You can drill down to a single client, pull up every client on your network or look at all the clients connected to a particular switch. A program was almost custom-made for what I needed.

Mary had a ton of e-mail waiting for her concerning a film that was due to be shown in less that two weeks at the debut of the film festival. I asked her to describe the sizes of her file transfers as they came in. She received a 20 MB still image that had been captured from one of the movie frames. She also received several PDF files between 5-10 MB each. She responded to each of these emails, sometimes with an attached Word document with corrections and suggestions for the press release. Her job for the day was to come up with a full-sized publicity poster for the film. So she used the 20 MB scan as the centerpiece and designed text and figures around it eventually coming up with a two day average of 200 MB an hour.

This was more or less consistent with what the average Accountant and Finance Administrator was pulling, from constantly creating and saving invoices to the Accounting Server, bringing-up older invoices, and sorting and archiving the invoice directories. The Sales and Legal departments could pull in more or less on any given day (depending on what was going on at that particular time) but they all basically averaged out to be the same. So the whole thing was now rolling.

My biggest question at this point, was whether it would be better to offload the ninety or so Office workers onto a seperate network from the 20 heavy load Media clients. There are about a hundred and sixteen million ways that this can be done. They range from the basics of setting up a VLAN, to dividing the network into seperate subnets, to the extreme of putting a seperate switch on the Domain Controller and the Backup Domain controller and essentially running two different networks with absolutely no further stress on the Primary Domain Controller.

So now that all the file servers and all the clients were finally in place, I could see what the actual load on the network was and use that information to guide my next step. Since OpManager allowed me to view the total utilization of every device on the network at the same time, it was the natural choice. Keep in mind that we’ve got 20 Media computers doing upwards 1600 GB worth of transfer per hour and another 90 Office clients clocking in a maximum of about 200 MB per hour each.

I was stunned: Overall network utilization never went above eight percent! I had been monitoring the network utilization every half hour on all the servers via Windows Task manager since the loads hitting the Media servers were the highest at any given point on the network. But for the rest of the office, network utilization rarely ever reached one percent, and the Domain controller network utilization never went about eleven. Since most of the heavy traffic occured between the Media file servers and their clients, the overall utilization was pretty low.

Conclusion

After the ultimate near heart attack, it turned out that with the network at only 8% load and no signs of slowdown in the office segment, there would be no need to seperate the two networks. I took the precaution, however, of giving the Office domains their own subnet so it would be a bit easier to distinguish the lightweight Office transfers from the heavyweight encoding and backups that were going on in the Media department.

While I was at it, I discoved that while the overall network rolled along as smooth as a Cuban cigar, the Media fileservers would occasionally get bogged down when you had three or four editors transferring the same 500 GB file to their machines at the same time that the hourly backups were occuring. The slowdown usually wasn’t anything that lasted for longer than thirty seconds, but those thirty seconds were unacceptable to me. I wanted to find a more efficient way to route incoming and outgoing file transfers from the backups that were occuring in the background.

But the next priority would be to set up VPN into the company headquarters network. What kind of VPN would I use and how would I go about integrating it into the rest of the network? I was also told it would be desirable if certain users could be allowed to VPN from their hotels into, say, the Accounting or Marketing servers.

I also needed to come up with a somewhat more elaborate wireless networking scheme. I was dealing with a rather large area and clients that generally liked to move around a lot with their laptops. Plus, now that it had been proven that the overall network utilization was low, I had to begin the process of removing some switches and increasing the overall network efficiency.

The opening of the Grand Network had been a quantified success, but there were still improvements to be made before my clients and I could be one hundred percent satisfied. Could I improve the network, please the client, and get the girl with only a week and a half left before the festival? The answer to this and more in Part 3.