Introduction

WAN acceleration products that optimize application performance have historically been the domain of huge enterprises connecting far-flung operations. But that is starting to change. Even small companies are becoming more dispersed, with employees working from home or local offices, making collaboration essential. And the types of applications that were previously reserved for large companies with immense budgets and entire IT departments to run them, are increasingly used by everyone.

For these reasons, smaller organizations are experiencing the same application performance issues that have long plagued their larger brethren. Fortunately, new acceleration products, available in an increasing variety of forms, are starting to offer suitable solutions.

To understand if a WAN acceleration system will provide useful benefits for your network, it’s helpful to look under the hood to understand how these systems work and what they can accomplish.

It’s Magic

Back in the olden days, I worked at an early WAN acceleration vendor. Whenever we first installed a demo unit on a customer’s network and they saw how more responsive the applications suddenly became, their jaws would inevitably drop and they’d stare in amazement. “That’s incredible,” they’d gasp. “It’s like magic!”

It reminded me of the movie “Mosquito Coast” where the cranky inventor played by Harrison Ford tells a band of natives in rain forest, agog over the ice he’s created for them in a primitive freezer, “It’s not magic – it’s thermodynamics!”

So I would put on my best Han Solo voice (admittedly not distinct enough from my Luke Skywalker voice for anyone to notice the difference) and state, “It’s not magic – it’s WAN acceleration.”

WAN acceleration can seem like magic to anyone previously stuck waiting for minutes in a hotel room for a Powerpoint presentation on the company file server to open. But once you understand how it works, conceptually it’s not especially complicated. More importantly, if you understand how it works, you can ignore the vendor hype and determine the value WAN acceleration would bring to your network, as well as the best way to evaluate which products are the best fit for your applications.

So What’s the Problem?

WAN acceleration solves two separate problems – insufficient bandwidth and the inability of applications to use all the bandwidth that’s available.

The fact that low bandwidth WAN links causes sluggish performance is hardly surprising. On the LAN, files transfer quickly, videos play smoothly, and we usually don’t need to play Angry Birds to keep ourselves occupied while waiting for applications to load. But try to download a gigabyte file over a DSL line, and anyone who expects it to arrive instantaneously is lacking a good understanding of either physics or math (or probably both.)

What is surprising is how much latency and packet loss can affect performance, preventing applications from using all the bandwidth. To illustrate, I’ll show a few examples using a network emulator to simulate different WAN conditions.

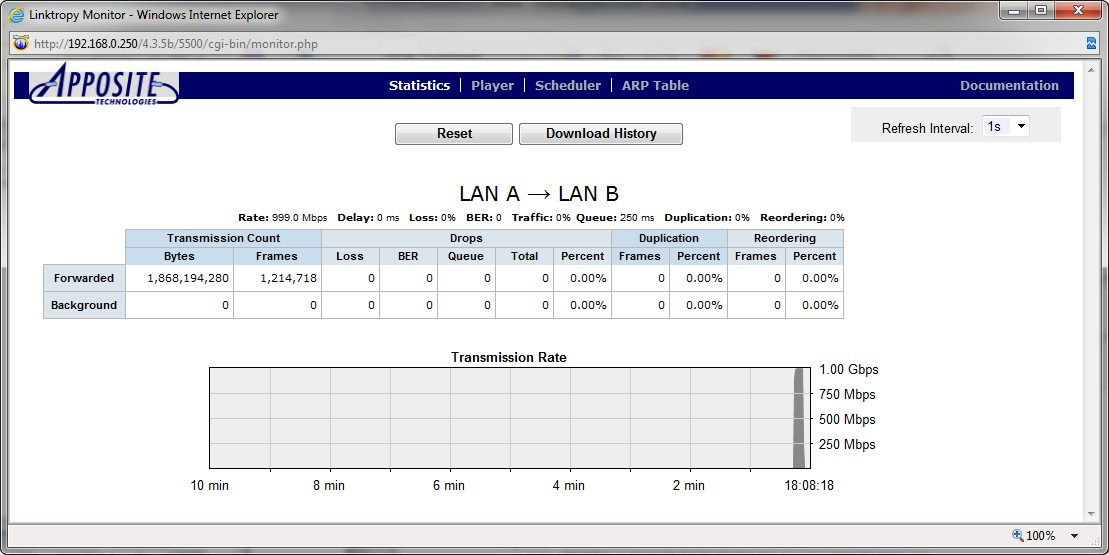

This first graph shows throughput of an FTP file transfer between two Linux servers on a LAN. Since there is no latency or loss, the transfer uses all of the 1 Gbps bandwidth available.

LAN – LAN FTP transfer

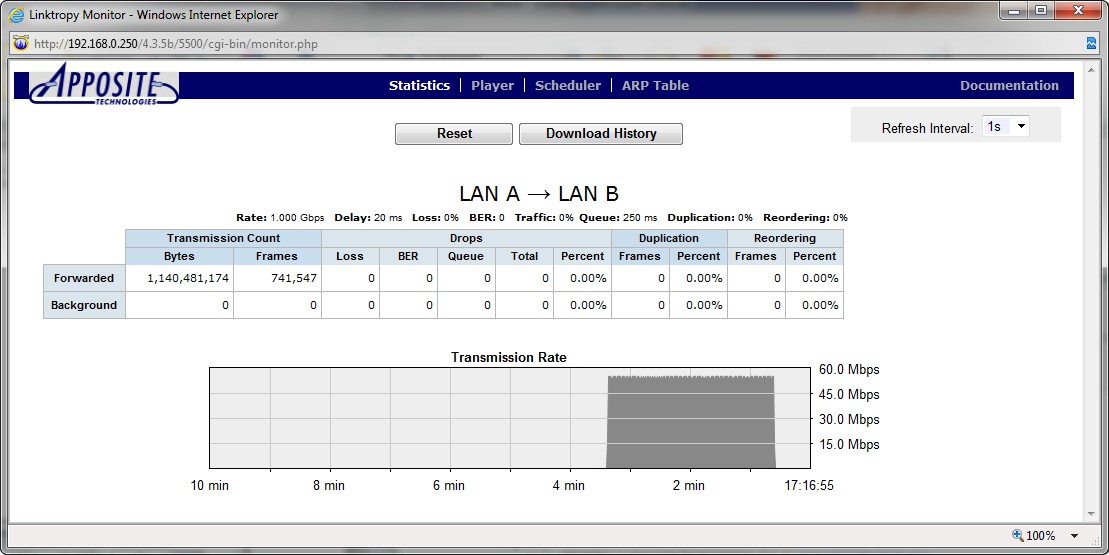

Next, I add 40 ms of delay, the round-trip time between my home and my office only a few miles away. (Of course, the packets don’t take the direct route of my commute, but instead follow the path the taxis use to ferry tourists from the airport by looping through a city an hour away.) To illustrate the point, I’ve left the bandwidth at 1 Gbps, but throughput is suddenly limited to only 55 Mbps!

(Note: The 20 ms delay shown in the screenshots is one-way. It is applied in each direction for a total 40 ms round-trip delay.)

LAN – LAN FTP transfer w/ 40 ms delay

Higher latency, for example, connecting from a local office to headquarters across country or to Europe or China reduces the throughput even further.

| Delay (RTT) | Throughput |

|---|---|

| 0 ms | 1 Gbps |

| 40 ms | 55 Mbps |

| 100 ms | 22 Mbps |

| 250 ms | 9 Mbps |

Table 1: Delay vs. throughput

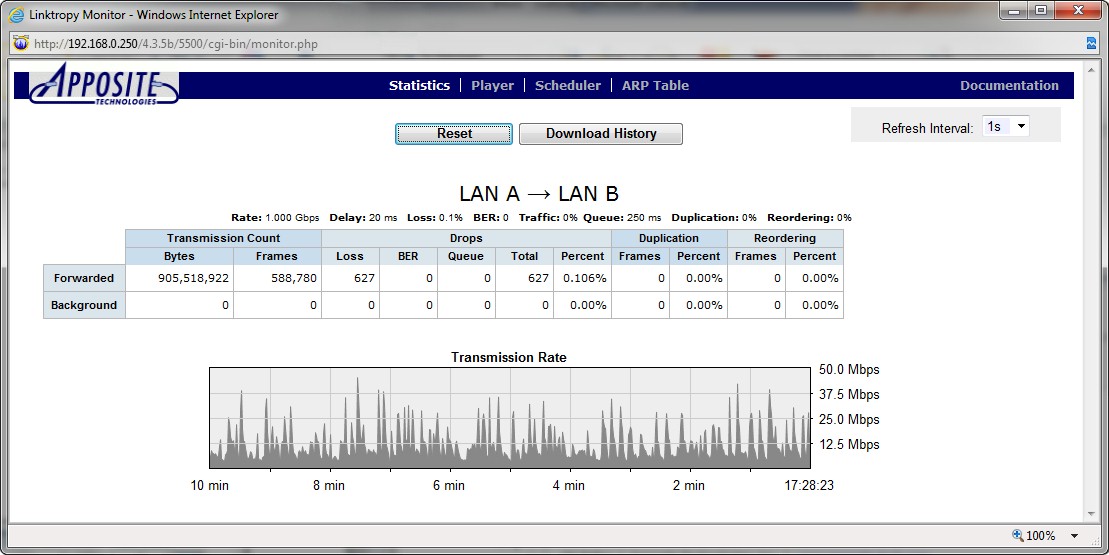

These tests assumed lossless links. But since I’m usually connected over Internet VPN, I should expect occasional packet losses. At 40 ms delay, even with a loss rate of only 0.1%, throughput drops from 55 Mbps to 14 Mbps, and becomes highly variable as shown below.

LAN – LAN FTP transfer w/ 40 ms delay, 0.1% loss

So what’s going on? Fundamentally, most applications weren’t designed to run over a WAN, and even the core networking protocols such as TCP, CIFS, and HTTP were created in an era when a 56K fixed line was a luxury and most remote users connected with modems measured in baud.

There may have been a few visionaries at the time who conceived of the modern, everything connected world. But they were too worried about making networking work at all to be concerned about optimizing performance for applications that wouldn’t exist for another couple of decades.

Although this is a vast oversimplification that might get me fined by the Internet police, conceptually, TCP guarantees delivery of data across a lossy network. It does this by sending a block of data and waiting for acknowledgements to return from the destination before sending more data. This works great on the LAN where acknowledgements return quickly and data is rarely lost. But as these examples show, even a small amount of latency or loss can be disastrous for performance.

On top of that are limitations within the application protocols. On notorious example is CIFS, the Microsoft file sharing protocol used to access networked drives. CIFS adds another layer that limits how much data can be sent while waiting for acknowledgements. Every user who has tried to open a file on a shared drive physically located at a different site has screamed to the IT team, “our network is too slow!” But the problem is not the network. With 40 ms of latency between a file server and a Windows 7 PC, drag and drop of a file takes ten times longer than transferring the same file using FTP.

From these examples, you can see why we call latency the silent killer. It’s not the delay itself that’s the problem, but how the delay impacts the ability of protocols and applications to transmit data. Fortunately, that means solutions are possible by changing how the protocols and applications operate.

Limited bandwidth

Since there are two separate problems – lack of bandwidth and the ability to use the available bandwidth, there are two different sets of optimizations included in any full-featured accelerator. Let’s start with the former.

Compression

The value of compression is easy to understand – if a file is compressed by 2x, it’ll use half the bandwidth to get the file across the network in half the time. So useful is compression, that many WAN acceleration vendors gained their start as developers of compression algorithms. Because compression can reduce the amount of bandwidth needed to support a site, it’s often the most effective component for demonstrating ROI on purchase of an accelerator.

Of course, the effectiveness of compression can vary widely depending on the traffic. A file consisting of nothing but the letter “A” repeated one billion times can be compressed down to just a few bytes, making for a very impressive demo.

But real data are more complicated, and so are the compression systems. Algorithms that work well on documents may be very different from ones that shrink already-compressed image files or streaming video, so most accelerators include multiple compression techniques and may adaptively adjust based on application.

However, compression is computationally very intensive, creating a trade-off between performance and the cost of building faster boxes or adding compression chips.

Caching

Like compression, caching reduces the amount of data sent over the WAN. The basic idea is simple – maintain a copy of everything sent over the WAN, and if a file has previously been sent, simply reference a local copy instead of sending it again. Of course, implementation can vary widely.

First, there’s the question of how much data to store. More is better, but more requires larger hard drives and faster CPUs to search for matches in a bigger library, raising costs. The secret sauce is in the algorithms that decide what to keep and how long to keep it.

The second question is which blocks of data to cache. Traditionally, caching was done at the file level. If the exact same document or image was sent previously, a cached copy could be used instead. But our company has a dozen versions of the corporate PowerPoint presentation on the file server for different situations that differ by a couple slides, and I often find myself opening each before I find the one I need.

Caching is now often done on smaller blocks of data, or by doing comparisons with cached files and transmitting a list of differences instead. But this requires considerably more powerful hardware.

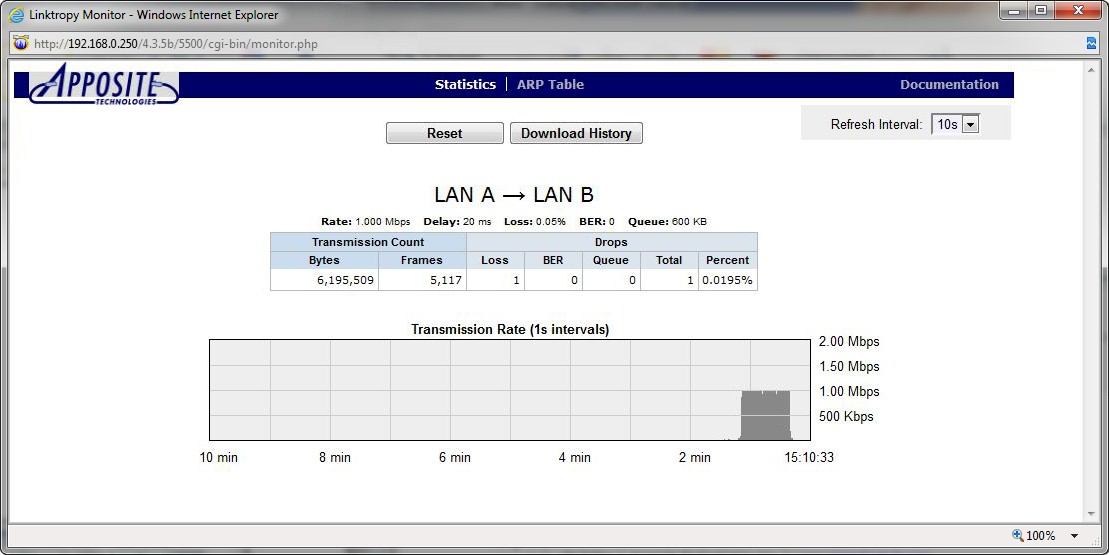

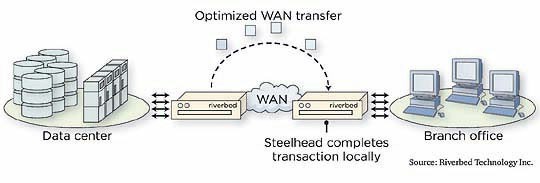

Riverbed Steelhead – without optimization

The two graphs above and below show how much difference caching can make. For transfer of a 10 MB file over a 1 Mbps link, a Riverbed Steelhead system reduced the bandwidth used by 98%.

Riverbed Steelhead – with optimization

QoS / Prioritization

Caching and compression can only go so far. At some point there just isn’t enough bandwidth to support all the applications. When this happens, the best solution is to prioritize traffic, sending time sensitive packets such as VoIP before bulk data transfers and reserving minimum bandwidth levels for critical applications.

Again, this creates a trade-off between efficiency and complexity/cost. A system to reserve bandwidth to particular IP addresses or prioritize voice traffic over file transfers is easy to implement and simple to configure. A system that can automatically identify Skype connections or catch music downloads while prioritizing different applications into multi-tiered hierarchies can be better at guaranteeing performance of important applications. But such a system is obviously more expensive to build and requires more effort to configure.

Inability to use available bandwidth

As we’ve seen, regardless of how much bandwidth is available, many protocols and applications can’t go at full speed when there is significant WAN latency or loss. Proxy techniques can overcome these limitations to allow applications to use all the bandwidth.

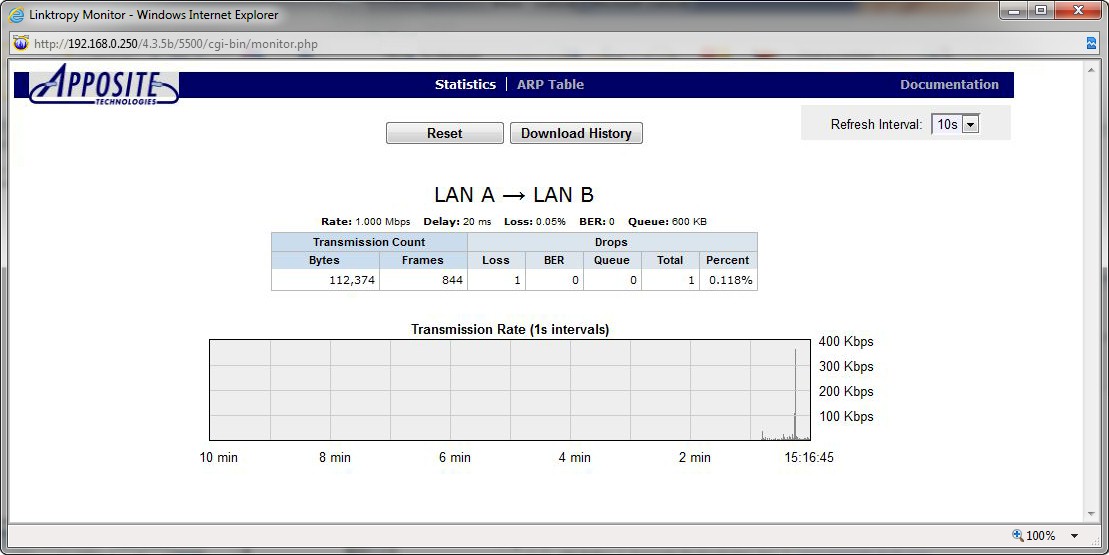

In the traditional WAN accelerator architecture illustrated below, a device located between the server and the WAN pretends to be the end node and intercepts the connection. The accelerator then sends the data over the WAN in a way that’s less sensitive to latency and loss. Finally, a second accelerator on the opposite side pretends to be the original server to transmit the data to the client. The process is transparent to the network and can optimize the performance of almost any protocol or application.

WAN acceleration architecture

(Courtesy: Riverbed)

TCP Acceleration

The sensitivity of TCP to latency and loss can be reduced in many ways, from simply increasing the amount of data to send at a time, to completely replacing TCP with a latency-optimized transport protocol.

CIFS Proxy

The accelerator can act as a CIFS client to collect the file and folder information from the server quickly, send it efficiently over the WAN (after accounting for cached objects and compressing the remainder), then act as a CIFS server to deliver file to the end client. This can make it much faster to browse folders and download or open files.

HTTP Acceleration

Web pages may contain a hundred or more separate images and objects, each of which has to be fetched in a separate transfer. The HTTP protocol is not particularly efficient, and combined with TCP handshaking, can require a number of round trips back and forth to establish a connection before even sending any data, then more round trips at the end to terminate the connection.

While some number of objects can be downloaded in parallel, web pages in far-away countries are often painfully sluggish to load. HTTP acceleration streamlines this process, not only improving how quickly Web pages load, but improving the responsiveness of the many corporate applications that use a browser-based user interface.

Other Application Proxies

Many other critical applications, from accounting packages, to databases, to virtual desktops, were designed to run locally. They hit serious performance issues when there is too much latency or loss between client and server. Most of these can be improved with a proxy module that bundles up the data locally, sends it efficiently over the WAN, and delivers it locally to the client. However, each application is unique, so availability of acceleration modules for particular applications can vary widely between products.

Appliances, Clients, and Clouds

The traditional architecture for WAN acceleration is two appliances, a small one at the branch office and a large one at the central site. This is the best architecture for multi-national organizations, but not necessarily for small networks. Fortunately, acceleration products are becoming available in a wider variety of formats.

People working from home or on the road need a simple way to accelerate their connection back to the office. Many vendors now offer client software to install on individual PCs to connect to the central appliance. A small branch office can use the same software, installed on each PC, instead of an appliance. Alternatively, some vendors offer a software version that can be loaded onto a local server as a virtual machine to use as a low-cost appliance.

Lastly, a few vendors have begun offering hosted acceleration. Instead of placing an appliance at the customer sites, the devices are hosted at a POP near each branch office to accelerate the connection over the Internet cloud to a POP near headquarters.

Conclusion

While bandwidths continue to grow, application performance issues never go away. Latencies don’t change and applications chew up extra bandwidth as quickly as it can be provisioned, while users become ever more demanding for responsive applications. Whether you run a big network or a small one, WAN acceleration is quickly becoming a critical component to take full advantage of the network.

DC Palter is president of Apposite Technologies, the developer of the Linktropy and Netropy WAN emulation products for testing application performance. He was previously vice-president of Mentat (acquired by Packeteer/BlueCoat), an early pioneer in WAN acceleration. He holds three patents in WAN acceleration and is the author of a textbook on WAN acceleration for satellite networks.