Introduction

Many SmallNetBuilder readers realize that the prices that companies like NETGEAR, QNAP, Synology, Thecus and others charge for their high-end "business class" NASes are significantly more than the cost of equivalent NASes that they could build themselves. Of course, we all realize that companies are in business to make a profit, so they aren’t going to be giving the fruits of their labor away.

But sometimes, the difference between what you can build yourself and what you have to pay for what you can buy is big enough to make you take the plunge into building your own NAS.

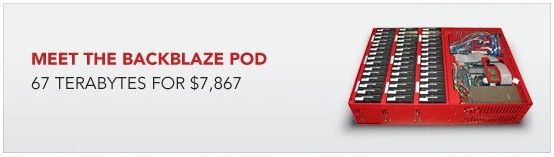

Cloud backup company Backblaze had the same idea when they looked at the cost of buying "big iron" storage. Backblaze provides "cloud" based unlimited backup for $5 per month per Mac OS or Windows computer, including multiple versions. So they need a lot of storage, multiple petabytes (1 PB = 1,000 TB) worth, in fact.

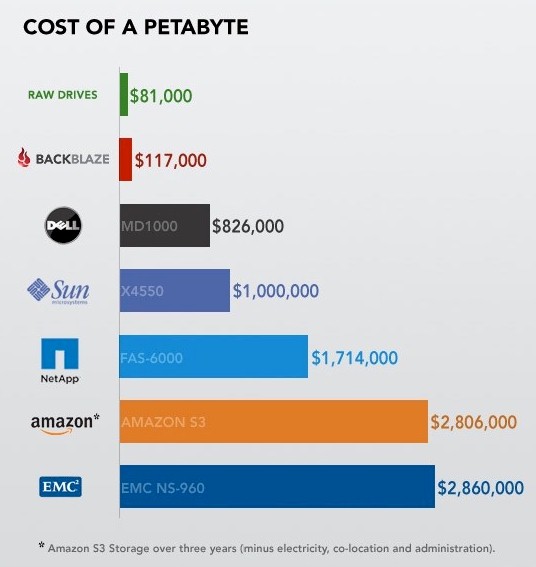

They first looked at commercial solutions. But after pondering the comparison chart in Figure 1, they decided that they could recoup the cost of designing and building their own storage pretty quickly.

Figure 1: Cost of a Petabyte

(Courtesy Backblaze)

I’m not going to go into all the details of Backblaze’s design. They do a great job of that in their blog post that this article draws heavily from. Instead, I’ll see what builders of much smaller DIY NASes can learn from Backblaze’s exercise.

I’ll note up front that Backblaze’s design is not designed to reach 100 MB/s speeds; their web-based application simply doesn’t demand it. But there are still valuable lessons to be learned.

The Basic Design

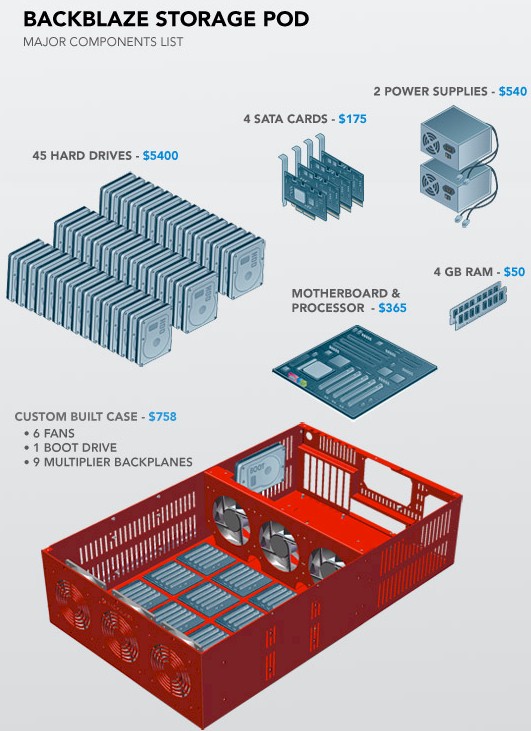

Backblaze’s Storage Pod is made up of a custom metal case with commodity hardware inside. One pod contains one Intel Motherboard with four SATA cards plugged into it (Figure 2). The nine SATA cables run from the cards to nine port multiplier backplanes that each have five hard drives plugged directly into them (45 hard drives in total).

Figure 2: Exploded view

(Courtesy Backblaze)

The two most important factors to note are that the cost of the hard drives dominates the price of the overall pod and that the rest of the system is made entirely of commodity parts.

The Case

The biggest hurdle that most NAS DIYers face is finding a suitable case. Having a custom case (Figure 2) designed and fabricated isn’t feasible for most individuals. But creatively modding something that’s close to what you want is.

For example, if you’re willing to go with a three drive RAID system, I like the "Better Box" design using an Apevia Case and 3 bay SATA module.

If you’re willing to spend a bit more for the benefit of no metal-bending, then Chenbro’s ES34069 is a popular choice for four-drive designs.

Figure 3: Storage pod case

(Courtesy Backblaze)

Thermal Design

The Pod uses six 120mm (~4.7") fans in a push / pull arrangement to keep the drives cool. Backblaze had a goal of keeping the ambient temp in the Pod to 50°C. The reasoning was that while most drives are rated for operation at 60°C or higher, power supplies are typically rated to only 50°C.

Backblaze says that drive temperature sensors typically report under 40°C, which means ambient is running lower, so the design goal was easily achieved. In fact, Backblaze said they found that they could run with only one or two fans and still achieve their thermal design goals. But the fans draw relatively little power and since they have a relatively high failure rate, using six fans provides a reliability boost through redundancy.

The fans blow from the front of the case to the rear (where the power supplies are), so that power supply heat isn’t blown onto the drives.

Motherboard

NAS DIYers frequently spend a lot of time agonizing over the choice of motherboard. But since NASes don’t demand a lot from a motherboard in terms of features, it’s more cost, form factor and CPU support that tend to be the biggest influencers.

One factor that didn’t influence Backblaze’s choice of an Intel BOXDG43NB LGA 775 G43 ATX Motherboard, was the onboard SATA ports. The Storage pod actually doesn’t use any onboard SATA because, despite Intel’s claims of port multiplier support in their ICH10 south bridge, Backblaze noticed "strange results" in their performance tests. Instead they relied on SATA controller cards and SATA expansion backplanes to support the 45 SATA drives (more shortly).

Backblaze didn’t have any exotic memory requirements either, so two DIMM slots holding a total of 4 GB of DDR2 800 RAM was fine. For cost reasons, however, they did compromise on the number of PCIe x1 slots. This led them to having to use both PCIe and PCI SATA controllers.

SATA

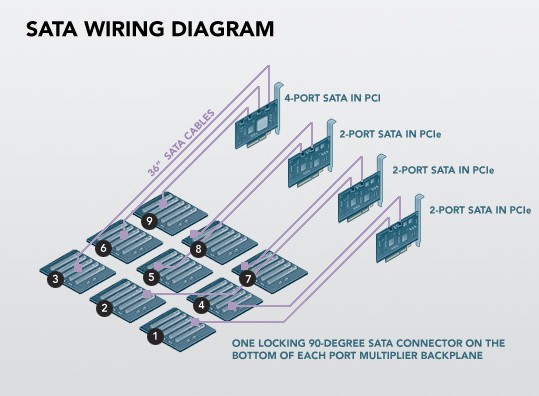

Speaking of SATA, Figure 4 shows the SATA subsystem, which is composed three two-port Syba SD-SA2PEX-2IR PCI Express SATA II controller cards and one Addonics ADSA4R5 4-Port SATA II PCI controller card. Each of nine SATA cables connect to a Chyang Fun Industry (CFI Group) CFI-B53PM 5 Port SATA backplane.

Figure 4: SATA connections

(Courtesy Backblaze)

The card and backplane choices were limited to products using Silicon Image devices. In Backblaze’s view, Silicon Image pioneered port multiplier technology, and Backblaze feels that their chips work best together. The port multiplier backplanes use a Silicon Image SiI3726, the SYBA cards use a SiI3132, and the Addonics card has a Silicon Image SiI3124.

I asked whether there was a performance hit from using the PCI-based card and Backblaze confirmed that the PCI-based card did yield lower performance than the PCIe cards. But with an overall throughput of 25 MB/s, the Addionics card was still fast enough for Backblaze’s needs. They noted that with 25 MB/s throughput, 2 TB of data can be written in a day and a Pod filled within a month.

Drives

As you might suspect, hard drive selection was very important. Backblaze chose Seagate 1.5TB Barracuda 7200.11 (ST31500341AS) drives because they were "more stable" in RAID arrays in their mass testing than WD equivalents and had good pricing for their capacity.

They said they also tested Samsung and Hitachi drives and they also worked well. But the Seagates 1.5 TB drives had the best price/capacity/density combination.

Backblaze also said that all new drives get put through a "pounding" during qualification. And that "every single drive deployed" goes through a burn-in test. They standardize only on a drive type and don’t try to match (or mix) lots, firmware revisions or manufacturing dates.

The non-critical (to performance at least) OS drive is a WD Caviar 80GB 7200 RPM IDE (WD800BB).

OS and File System

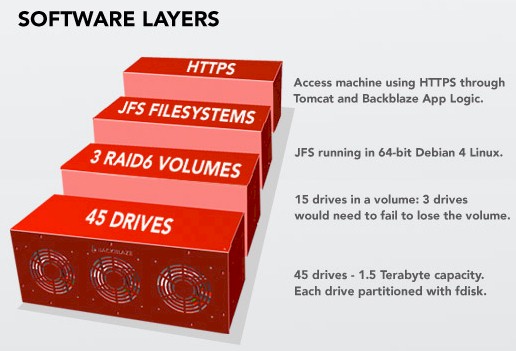

The Pod boots 64-bit Debian 4 Linux using the JFS file system, with all access to and from the Pod via HTTPS (Figure 5).

Figure 5: Backblaze Pod software architecture

(Courtesy Backblaze)

Backblaze went with JFS because they needed something that would support large TB volumes, run on Debian and have a good support community. Debian was chosen because "it is truly free". Backblaze found that some other free open source OSes are more entry-level options into paid offerings.

To create a volume, fdisk is first used to create one partition per drive. Then 15 1.5 TB drives are then combined into a single RAID6 volume using mdadm and finally, formatted with JFS using a 4 KB block size. Formatted (useable) space is about of 87% of the 67 TB of raw hard drive capacity or about 58 TB per Pod.

As noted earlier, all read/write is via HTTPS running in custom Backblaze application layer logic under Apache Tomcat 5.5. HTTPS was chosen over iSCSI, NFS, SQL, Fibre Channel, etc. because Backblaze believes that other technologies don’t scale as cheaply, reliably and big, or can be managed as easily as HTTPs.

Summing Up

The design process is always a battle of cost, time and performance, with successful designs achieving the right balance. Perhaps the most important thing to be learned from the Backblaze Pod design is how its creators made the their tradeoffs to achieve their design goals of low-cost, high density and large capacity.

The Pod ended up with two custom components: the case and Apache application logic. This minimized cost and time by leveraging mass produced components and, as Backblaze put it, being able to "stand on the shoulders of giants" and not have to re-invent the wheel.

The other key was in understanding how much performance was enough. Since the Pod is part of a web-based service, it doesn’t need to run at blazing speeds. Given the relatively slow Internet uplink speed and application logic that sit between a user’s computer and the Pod’s drive, Backblaze understood how fast the Pod had to be and designed to that goal. Pushing for 100 MB/s reads and writes would have made no sense when the larger system isn’t able to utilize it.

In fact, there may be other cost savings yet to be made. When I asked how they settled on a relatively expensive (and power hungry) Intel E8600 Core 2 Duo as the Pod’s CPU, Backblaze confessed that they didn’t do extensive testing. Given the tradeoff between saving perhaps $100 on the CPU and spending their time on other aspects of the design, they chose to throw a little money at the processor.

However, now with a completed design, they may look at lower power CPUs (and drives) in a future version of the Pod. Because the savings in power and facilities cooling can really make a difference in ongoing operational cost.