Introduction

Quite some time ago, a reader wrote to complain that he had to constantly power-cycle his access point because it kept locking up on him. He also posed the question: “If I spend more, do I get a more reliable product?”.

I thought that was a very good question, but didn’t really have a good way to answer it at the time. Although single-client throughput testing is fine for seeing how fast WLAN gear can go, a single wireless link really isn’t sufficient to make current-generation AP’s break a sweat.

Fortunately, time and the march of technology have provided a solution to simplifying WLAN load-testing in the form of Communication Machinery Corporation’s Emulation Engine (since acquired by Ixia). Armed with this handy little box, I set out to separate the 98 pound weaklings from the kings of the wireless beach. What I found, however, may surprise you…

CMC’s Emulation Engine

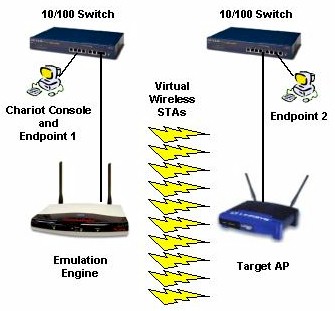

Think of the Emulation Engine (EE) as an access point in reverse. Instead of providing connection to a wired LAN for multiple wireless clients or stations (STAs), it generates multiple wireless STAs that can transmit and receive data to / from a target wireless AP or router. The data sent can be either simple internally-generated ping traffic, or come from an external traffic generator such as Ixia’s IxChariot.

Figure 1: CMC’s Emulation Engine

These multiple STAs aren’t the product of a bunch of radios crammed into the EE’s enclosure, but are instead virtual stations (vSTAs), all generated from a single mini-PCI radio. Each vSTA has its own IP and MAC address and individually authenticates, associates and transmits and receives data.

CMC produces a number of Emulation Engine models and recently upgraded its product line to its more powerful XT platform. They also introduced a model capable of exercising APs using data secured by Wi-Fi Protected Access (WPA). But I agreed to use a previous-generation EE a/b/g model, since its ability to test 802.11a,b and g APs with WEP enabled was fine for my purposes, and CMC was able to loan it to me for longer than any of the newer platforms.

The APs

Even though wireless routers are more popular with most consumers, I decided to focus on 802.11g access points – at least for my first pass at wireless load testing. Part of this was a bit of laziness on my part, since APs are easier to deal with because they don’t create a separate subnet. But the other reason was that I figured AP code would be simpler and more robust, therefore giving the products the best chance of surviving the tortures that I planned.

As far as my choice of 802.11g vs. 11b APs, I figured that even though 11b APs are more widely deployed, the future is most certainly in 11g (or dual-band) APs. And once again, in a nod toward giving the APs the best chance of success, I figured the designs and processors in 11g APs would better stand up to heavy traffic loads.

Table 1 shows the APs that I put to the test. I intended to have more “enterprise grade” APs on the list and asked Cisco for an Aironet 1100 and NETGEAR for a WG302. Cisco asked me to fill out a review request form, then never responded and NETGEAR originally agreed to supply a WG302, but never delivered it. I also asked D-Link if they wanted to participate in the review, but never received a reply.

| Manf. | 3Com | Asus | Buffalo Technology | Gigabyte | Linksys | SMC |

|---|---|---|---|---|---|---|

| Model | 3CRWE725075A | WL300g | WLA-G54C | GN-A17GU | WAP54G V2 | SMC2552W-G |

| Price | $338 | $76 | $69 | $108 | $60 | $314 |

| FCCID | HED WL463EXT |

MSQ WL-300g |

QDS- BRCM1005 |

JCK- GN-WIAG |

Q87- WAP54Gv2 |

HED SMC2552WG |

| Firmware | 2.04.51 |

1.6.5.3 |

2.04 | 2.03, Thu, 15 Jan 2004 06 |

v2.07 Apr 08 2004 |

v2.0.22 |

| Processor | Motorola PowerPC 8241 |

Broadcom BCM4702 | Broadcom BCM4702 | Renesas SH4,7751R | Broadcom BCM4712 | Motorola PowerPC 8241 |

| Wireless | Atheros AR5212 + AR2112 |

Broadcom AirForce |

Broadcom AirForce (4306KFB) |

Atheros AR5213 + AR2112 |

Broadcom AirForce |

Atheros AR5212 + AR2112 |

| RAM (16 bit word) |

8Mword | 8Mword | 32Mword | 8Mword | 4Mword | 8Mword |

| Flash (16 bit word) |

2Mword | 2Mword | 4Mword | 1Mword | 1Mword | 2Mword |

| Maximum STAs |

1000 | 253 | No spec. 35 typical |

64 | 32 | 64 |

|

Table 1: The Contenders

|

||||||

3Com 3CRWE725075A |

ASUS WL300g |

Buffalo Technology WLA-G54C |

Gigabyte GN-A17GU |

Linksys WAP54G |

SMC 2552W-G |

The APs – more

You’ll note from the table that products based on Broadcom and Atheros chipsets are equally represented, but that there aren’t any products based on Agere Systems, Conexant PRISM, TI, Marvell, Inprocomm, Realtek, RaLink or any other wireless chipsets.This wasn’t an intentional snub to any of these companies, but just the way things worked out.

You’ll also note that where you find Broadcom wireless chipsets, you also find their processors powering the APs, while the APs with Atheros chipsets use either Motorola PowerPC or Renasas processors.

So that you can see how (dis)similar the designs are, I’ve included board pictures for your perusal below.

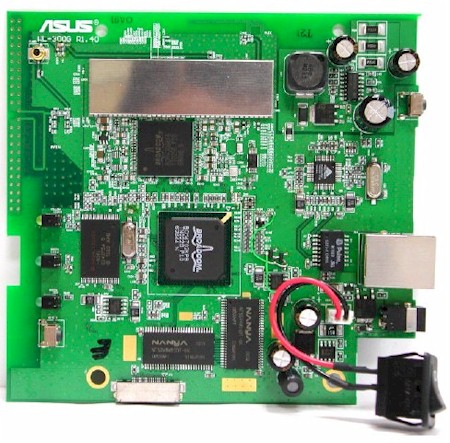

Figure 2: ASUS WL300g board

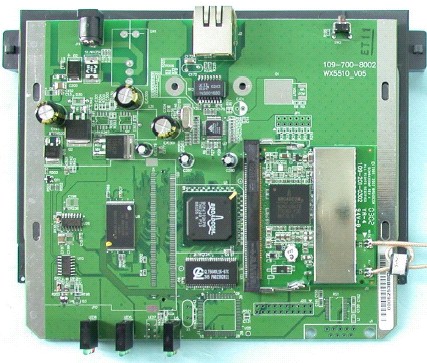

Figure 3: Buffalo Tech WLA-G54C board

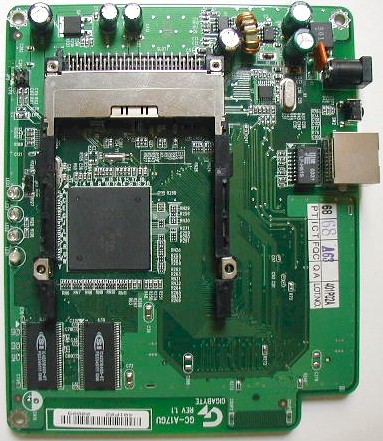

Figure 4: Gigabyte GN-A17GU board

Figure 5: SMC2552W-G and 3Com 3CRWE725075A board

Yes, that’s right, the SMC and 3Com products are twins on the inside (sourced from SMC’s parent Accton) but with different plastic shells on the outside.

Figure 6: Linksys WAP54G V2 board

Also worthy of note is that I tested the currently shipping Linksys WAP54G (the V2) shown in Figure 6 and not the original design shown in Figure 7. You can see that the V2 is a very different cost-reduced animal using Broadcom’s less-expensive 4712 AP-on-a-chip and sporting the smallest amount of RAM (4Mwords) of any of the products tested.

Figure 7: Linksys WAP54G original board (not tested)

The Setup

All tests used the setup shown in Figure 8. The IxChariot console and first endpoint were run on an Athlon 64 3000+ machine running WinXP Pro SP1 that was on its own private 10/100 network connected to a CMC Emulation Engine a/b/g.

The EE was used to generate from 1 to 64 802.11g vSTAs, which all were associated with the access point under test. Since the AP is just a bridge, a second computer – a 2.4GHz Pentium 4 running WinXP Home SP1 – was connected to the AP on a second private LAN and used as the second IxChariot endpoint to receive all the traffic.

Figure 8: The Test setup

The AP under test and Emulation Engine were located roughly 6 feet (2m) apart and no other 2.4GHz stations or interference was present. You may think that the latter statement is a bold one on my part, given how widespread the use of wireless networking equipment is. But I live in a rural location without access to broadband and believe me, the 2.4GHz airwaves are damned quiet around here!

As I mentioned previously, simple ping traffic generation can be done from the EE’s web interface. But I quickly abandoned that approach as not flexible enough. I instead opted to use the EE’s command-line interface and external mode that allowed vSTAs to accept traffic from an external generator – Ixia’s IxChariot in this case.

I set each vSTA so that it could be stimulated by IxChariot, and left everything else at the EE’s defaults. Note that the default settings make each vSTA a persistent connection, which means that after their initial authentication and association with the target AP, they are supposed to stay that way. This means my tests didn’t include vSTAs cycling through authentication, association, de-authentication and disassociation. The Emulation Engine is capable of doing this, but I didn’t have the time or the inclination to get into the Perl programming interface that is required to make the EE perform those tricks.

I decided to configure all the APs and EE so that 128bit WEP was used, since no self-respecting WLAN should be running without at least the protection – flawed though it may be – that WEP affords. I left all access points in their default mode settings, all of which were set to either “Auto” or “Mixed (11b/g)”.

I also left access point 11b protection settings at their defaults, figuring this didn’t matter since I made sure there were no 11b STAs in range during my testing. By the way, only the Buffalo Tech WLA-G54C came with protection enabled by default, and the SMC / 3Com twins didn’t provide this setting at all.

Finally, I left any “burst” mode settings at their defaults which mirrored the 11b protection settings – or lack thereof in the case of the SMC / 3Com twins. Note that everything involved in the test was powered through APC UPSes, to avoid problems due to power glitches.

The Tests – Single STA Throughput

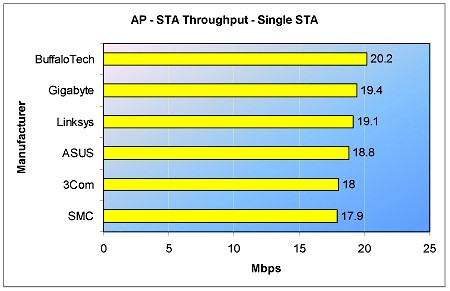

To shake things down and get the hang of using the EE, I started out nice and simple, using the EE to generate one virtual STA (vSTA) to check the maximum throughput of each AP with my test setup.

The results shown in Figures 9 and 10 represent the average throughput from a 5 minute IxChariot run using the throughput script with the default 1,000,000 Byte file size per timing record.

Figure 9: STA to AP throughput – One STA – 5min.

Figure 10: AP to STA throughput – One STA – 5 min.

I started out letting the Emulation Engine use its automatic rate algorithm, but noticed lower performance than expected during my initial testing. After consulting with a CMC applications engineer, I decided to force the EE’s rate to 54Mbps. This definitely improved the measured throughput for the products using the Broadcom chipset, but degraded the STA to AP speed for the 3Com and SMC Atheros-based APs.

By looking at the statistics accumulated by the Emulation Engine, I was able to see that the main contributor to the lower forced-rate speed was that vSTA transmit errors increased by about 8X when the rate was forced for the 3Com and SMC twins. I also found that the uplink speed of the Gigabyte AP was the same whether the EE rate was forced or left to autorange. I attribute this to the fact that the Gigabyte AP uses the same Atheros AR2112 radio, but the newer AR5213 MAC/baseband processor instead of the AR5212 MAC/baseband used by the 3Com / SMC AP.

So the results in Figure 9 reflect letting the EE auto-range its transmit rate for the 3Com and SMC AP runs, while being forced to 54Mbps for all the other products. All the test runs shown in Figure 10 have the EE rate forced to 54Mbps.

The Tests – 32 STA Throughput

Once I had the test setup shaken down, I started in on load testing. I first jumped directly to trying the maximum load that the EE could generate – 64 vSTAs – but ran into too many problems, with too many possible causes. So I dropped back to using a 32 STA load, since all the manufacturers said their products could handle at least that many STAs. Note that Linksys said that 32 was the maximum number of STAs that the WAP54G is designed to handle.

I also decided to increase the test run time from 5 minutes to 4 hours for the 32 STA tests. There’s nothing magic about 4 hours, I just chose it because it was as good a place to start as any and let me get three to four test runs in per day.

Figure 11: STA to AP throughput – 32 STA – 4 hours

Figure 11 shows that all products made it through the four hour uplink test with results similar to those of the single STA tests. Note again that the 3Com / SMC tests were done with the EE set to autorange its transmit rate, while all other tests were done with the rate forced to 54Mbps.

The throughput numbers in the graph represent the sum of the average throughput measured for 32 vSTAs. The actual per-STA throughputs were, of course, much lower – with averages typically about 1/32 of the total throughput. So for the range of results shown, this means that each STA was putting along at an average rate of at best, slightly over 0.5Mbps and at worst slightly under 0.4Mbps.

The only surprise in the results was the change in the Linksys WAP54G’s throughput, which increased from 12.3 in the single STA case to 14.7Mbps! My explanation for this is that the single STA run result was skewed by the WAP54G’s high throughput variation and periodic “dropouts” over the shorter 5 minute test. Both these effects were mitigated by the longer test time and lower per-STA average throughput.

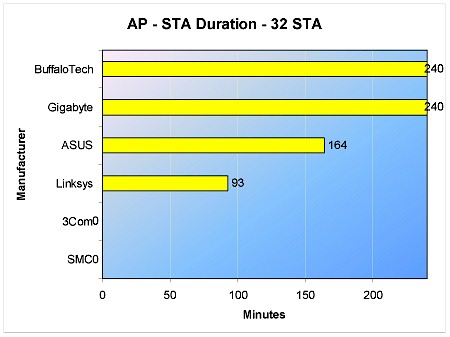

Downlink takes some APs Down

The 32 STA test in the AP to STA (downlink) direction pushed some of the APs to their breaking point. Only the Buffalo Tech WLA-G54C and Gigabyte GN-A17GU AP’s finished the full four hours with average total throughput of 21.5 and 20Mbps respectively. The run time before first error (I stopped the test after one error from any of the STAs) for the other APs is shown in Figure 12.

Figure 12: AP to STA Test Duration – 32 STA Throughput test

The exact time-before-first-error isn’t important, but I found by repeating the test for the failing AP’s that the times are indicative of the relative robustness of the products for this test. The zero times for the 3Com / SMC twins indicates that I couldn’t even get those products to start the test.

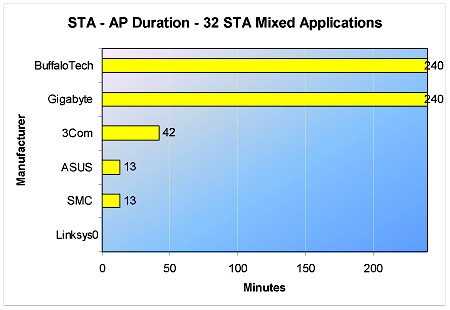

The Tests – 32 STA Mixed Application

Hitting the APs with 32 STAs going full blast was too much for some to handle, and to be fair, isn’t really indicative of the type of load likely to be seen in actual use. So I devised a test intended to be more realistic that used a mix of applications with random delays used between script executions.

I mixed six different IxChariot scripts that emulated multiple wireless clients performing the following web-based activities: HTTP graphics download (5), POP3 mail download (5), SMTP mail send (5), FTP upload (7), FTP download (5)and Realaudio audio-video stream (5). The number in parentheses is the number of clients running each script. Details of the scripts can be found at the bottom of this page.

I had the scripts use random delays (with Normal distribution) of between 1 and 1000ms between each execution. All scripts sent or received data as fast as they could except for the Realaudio streaming test, which used a 300kbps rate.

The resulting test was intended to still represent a very heavily loaded AP, but using traffic more representative of what might be found in actual use.

Figure 13: STA to AP Test Duration – 32 STA Mixed Application test

Figure 13 shows that all APs except the Linksys WAP54G managed to survive the test for at least some time. And, once again, the Buffalo Tech WLA-G54C and Gigabyte GN-A17GU were the only products to complete all four hours.

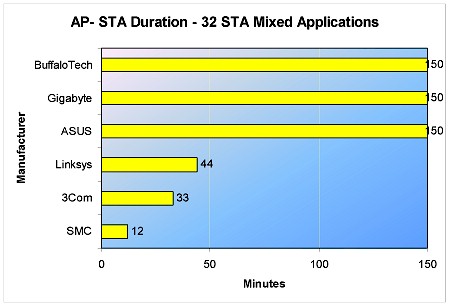

I also ran this test in the AP to STA direction and the results are shown in Figure 14. With my deadline looming, however, I had to abbreviate these runs to 2.5 Hours (150 minutes) each.

Figure 14: AP to STA Test Duration – 32 STA Mixed Application test

The results show that all the APs completed the test, with both the Linksys WAP54G and ASUS WL300g moving up in the relative standings.

(excerpted from the IxChariot Messages and Application Scripts Guide)

POP3, Receive E-mail

SMTP, Send E-mail

These scripts emulate typical e-mail transfers. The default size of an e-mail message is 1,000 Bytes, with an

additional 20-Byte header.

FTP Get

FTP Put

These scripts emulate sending a file from Endpoint 2 from Endpoint 1, using TCP/IP’s FTP application. The default file size is 100,000 Bytes.

There are three sections in this script, each with its own connection. The first section emulates a logon by Endpoint 1 to Endpoint 2. The second, timed section emulates Endpoint 1’s request for, and the transfer of, a 100,000-Byte file. The third section emulates a user logging off.

HTTP GIF Transfer

This script emulates the transfer of graphics files from an HTTP server. The default size of a graphics file is 10,000 Bytes.

RealAudio audio-video Stream Smart

Realmed emulates a RealNetworks server streaming a combined audio and video file over a fast Ethernet link. The file was encoded with Stream Smart technology, using the G2 version of the encoder, and played with the G2 version of the server.

Summary and Conclusions

The main objective of this article was to see how a sampling of both consumer and enterprise-grade 802.11g access points perform under heavy traffic loads. I have to admit that I was operating in uncharted territory, since manufacturers provide no guidance – other than the maximum number of STAs – regarding the loads their APs are designed to handle.

One could argue that the loads that I subjected the APs to far exceeds what they would experience under normal use – especially in a properly-designed WLAN. But, in my mind, how products behave under stress is indicative of the robustness of the underlying design.

Given the unknowns involved, I want to thank 3Com, ASUS, Buffalo Technology, Gigabyte, Linksys and SMC for agreeing to participate in this review. I also want to thank CMC for making this test possible at all by the loan of their Emulation Engine. It’s an interesting piece of gear that makes what would otherwise be a daunting task, easy.

All that being said, the most surprising result was how relatively poorly the most expensive “enterprise-grade” APs – the 3Com 3CRWE725075A and SMC2552W-G “twins” – fared under heavy loads. I wasn’t able to determine the specific cause of their poorer performance, but at least under the test conditions that I used, they consistently were beaten by the less expensive consumer-grade products.

The other observation that I feel safe in making is that only the Broadcom-based Buffalo Tech WLA-G54C and Atheros-based Gigabyte GN-A17GU completed all the 32 STA load tests. I’m not sure why the diminutive WLA-G54C bested the other Broadcom-based designs, but its robustness was consistent throughout the testing. I’m guessing that the Gigabyte’s use of the Renasas processor and newer Atheros MAC / baseband chip made the difference over the other Atheros-based products.

There are obviously many factors that need to be considered when choosing an access point and I wouldn’t choose a product solely on the basis of these tests. But I know I learned something by this exercise and hope that you have, too. Let me know if you’d like to see more of this type of test.