Introduction

![]() NOTE:This article describes the Wi-Fi Mesh test procedure for products tested after July 1 2021. The previous Revision 1 process can be found here.

NOTE:This article describes the Wi-Fi Mesh test procedure for products tested after July 1 2021. The previous Revision 1 process can be found here.

Updated 7/30/21: Changed 10GbE NIC make/model.

It’s been almost four years since we tested mesh Wi-Fi systems with our Mesh Revision 1 process. Since then, Wi-Fi 6 has come to mesh systems, so our test system must change too.

The Rev 2 process brings a move to using multiple clients for some tests and adds latency testing for both wired and wireless benchmarks.

Router Benchmarks

The Rev 2 wired routing performance process is the same as the Revision 11 Wi-Fi Router process, measuring both throughput and latency under load. Two machines are used for the test, one connected to the DUT WAN port; the other to one of its LAN ports. The WAN-side machine is a Dell Optiplex 9010 Small Form Factor with quad-core Core i5 3570 CPU @ 3.4 GHz, loaded with 16 GB of RAM, running Ubuntu 18.0.4 LTS. The LAN-side machine is a Dell Optiplex 790 Small Form Factor with quad-core Core i5-2400 @ 3.1 GHz, also loaded with 16 GB of RAM, also running Ubuntu 18.0.4 LTS. Both machines connect to the device under test (DUT) via an Intel XT540-T2 10GbE NIC TP-Link TX401 10GB PCIe Network Card (Aquantia-based) that supports 1/2.5/5/10 Gigabit speeds.

The WAN-side server is connected to the Router WAN port via a QNAP QSW-M408-4C 10 GbE managed switch. The switch also brings in internet connection, so that products that require a constant internet connection can be tested. The port management feature allows matching the speed of the switch’s port to the DUT’s WAN port speed to prevent frame fragmentation.

The test uses OpenWrtScripts’ netperfrunner.sh. Like the betterspeedtest.sh script used in the Rev 10 process, netperfrunner uses netperf to generate TCP/IP traffic while simultaneously running a ping test from LAN to WAN. The main difference between betterspeedtest and netperfrunner is that netperfrunner simultaneously runs downlink and uplink traffic. So there is only one latency score generated. I’m using 100 traffic streams in each direction vs. netperfrunner’s 4. The test is run for 5 minutes and produces two throughput and two latency benchmarks:

- WAN to LAN Throughput – TCP

- LAN to WAN Throughput – TCP

- Latency Score – Average

- Latency Score – 90th percentile

The benchmark values are published as Latency Score = (1 / Measured delay from betterspeedtest.sh script output in ms) x 1000, with higher values being better. This inverts and scales latency values so that they may be properly ranked, since the ranker always sorts values from high to low. To convert back to milliseconds, calculate 1 / (score / 1000).

The 90th percentile measurement is used instead of maximum to eliminate outliers. It means 90% of the measured latency values are less than or equal to this number.

Wi-Fi Benchmarks

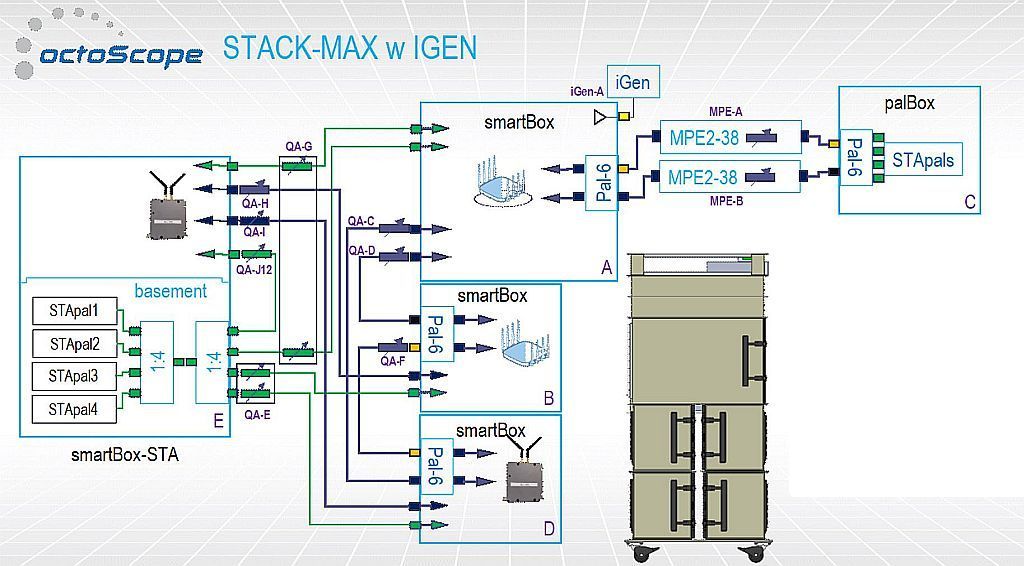

octoScope has generously loaned its top-of-the-line STACK-MAX test system that is used for the Revision 2 Mesh process. The new process brings a move to using multiple clients for some tests. The benchmarks use up to three octoScope STApal devices. The STApal is based on the Intel AX200, a Wi-Fi 6 M.2 format adapter that supports both OFDMA and AX MU-MIMO. The STACK MAX has 20 of these guys that can give any router or Wi-Fi system a run for its money.

Testing is controlled by python scripts, to ensure consistent test execution.

NOTE: 6 GHz band tests only are done using a single octoScope Linux STApal 6E, based on an Intel AX210. All other tests use the AX200 based STApals.

Revision 11 platform – octoScope STACK-MAX test system

Refer to the system block diagram below for the following Wi-Fi benchmark descriptions.

octoScope STACK-MAX block diagram

Our standard STA will now be configured as a two-stream Wi-Fi 6 device, using WPA3 security if the DUT supports it, WPA2-AES if it does not.

The device under test (DUT) will be configured as follows. Note that all features listed might not be supported

- 2.4 GHz band: Channel 6, 40 MHz channel bandwidth

- 5 GHz band: Channel 36, 80 MHz channel bandwidth

- 6 GHz band: Channel 37, 160 MHz channel bandwidth

- Standards-based (Wi-Fi 5/6, aka "explicit") beamforming and MU-MIMO features enabled

- "Universal" / "implicit" beamforming: left at manufacturer settings

- Airtime Fairness: left at manufacturer settings

- All supported Wi-Fi 6 features enabled, including:

- 2.4 and 5 GHz MCS rates

- OFDMA DL (download)

- OFDMA UL (upload)

- AX MU-MIMO DL

- AX MU-MIMO UL

A big change from all our previous wireless benmarks is that all wireless tests are now run through the router firewall, i.e. the traffic generator will be attached to the DUT WAN port. The main reasons for this change are:

- Today’s routers are capable of at least 1 Gbps routing throughput. If they’re not, they should be.

- Two-stream Wi-Fi 6/6E STA throughput can easily exceed 1 Gbps when 160 MHz channel bandwidth is used.

- Total throughput for two 5 GHz radios on tri-radio Wi-Fi 6 routers, even at 80 MHz channel bandwidth, can easily exceed 1 Gbps.

- 160 MHz bandwidth is our standard for 6 GHz testing

- So a > 1 Gbps port is needed to connect the traffic generator so that bandwidth is not limited

- Some routers have a > 1 Gbps port only for WAN

- So all testing must be done with the traffic generator connected to the DUT WAN port in order to not limit Wi-Fi throughput

If a product has a "multi-Gig" 2.5, 5 or 10 GbE Ethernet port, it will be configured to act as a WAN port. The WAN-side server described above in the Router Benchmarks is used as the traffic generator. It runs octoScope’s multiperf endpoint, which is based on iperf3. The traffic generator connects to the DUT WAN port via the QNAP QSW-M408-4C 10 GbE managed switch. Switch port management is used to match the speed of the switch’s port to the DUT’s WAN port speed to prevent frame fragmentation.

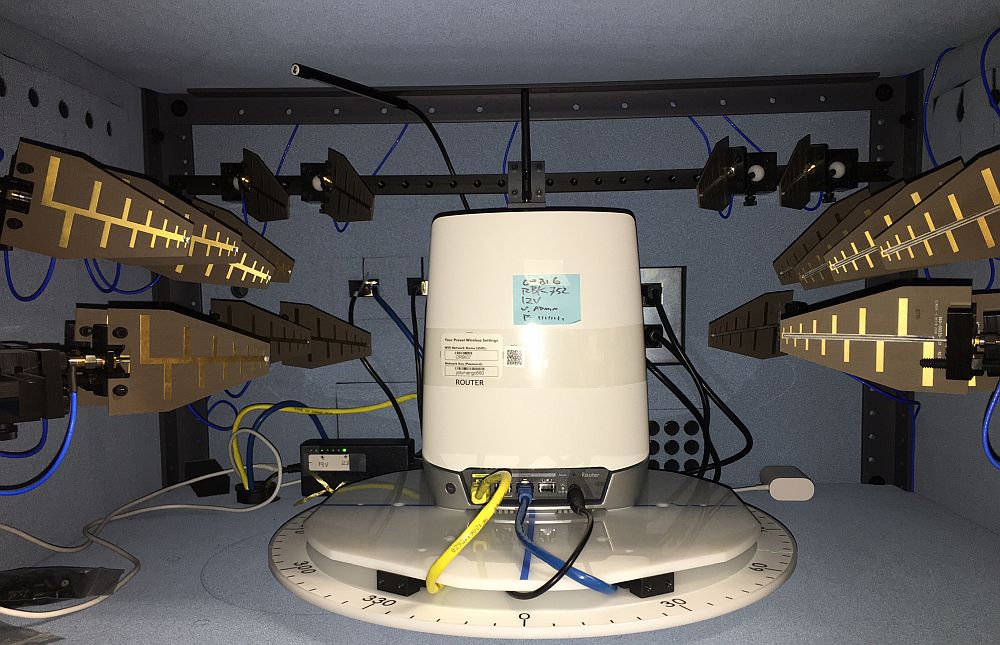

The "root" node DUT (connected to internet) is centered on the upper test chamber turntable in both X and Y axes. If the DUT has external antennas, they are centered on the turntable. If the antennas are internal, the router body is centered. Initial orientation (0°) is with the DUT front facing the rear chamber antennas.

NETGEAR Orbi WiFi 6 in Test Chamber

"Leaf" nodes go into the STACK MAX "B" and "D" boxes. These 18" boxes are a pretty tight fit for larger mesh nodes, but we make it work.

NETGEAR Orbi WiFi 6 in Box B Test Chamber

Note: "Downlink" means data flows from WAN, through the Device Under Test (DUT) to client device. "Uplink" reverses this flow.

Path attenuation between mesh nodes is set to provide a moderate-strength link, with the same attenuation used for all products tested.

Throughput vs. Attenuation Test

This test measures how throughput varies as signal levels are reduced. It is run on only the root node.

The throughput vs. attenuation test configuration uses a Linux STApal for 2.4 and 5 GHz and a Linux STApal 6E for 6 GHz. The STA runs five simultaneous traffic pairs; unlimited TCP/IP. STAs are configured to support 20 MHz channel bandwidth @ 2.4 GHz, 80 MHz channel bandwidth @ 5 GHz and 160 MHz channel bandwidth @ 6 GHz.

The test sequence is:

- Move the turntable to "0 degree" starting position.

- Set the attenuators to 0 dB

- Associate the STA.

- Run iperf3 and ping traffic for 35 seconds, while simultaneously rotating the DUT counter-clockwise 360° @ 2 RPM. The first 5 seconds of each test are excluded as settling time.

- Increase path attenuation by 3 dB and start the next test, rotating the device 360° in the other direction.

- Repeat Steps 4 and 5 until attenuation limit is reached.

The above sequence is repeated downlink and uplink in each band to yield throughput for each STA. The test sequence is typically run once. The test will be repeated if unusual results are found and the best of the runs will be entered into the Charts database.

NOTE: Moving the DUT through an entire 360° while the iperf3 test is running removes orientation bias from the test and eliminates the need to determine the "best" run out of multiple fixed-position tests. Because throughput typically varies during the test run, the averaged result can be lower than a fixed position test. Rotation at lower signal levels, particularly in 5 GHz, may cause connection to be dropped sooner than if the DUT were stationary.

A word on "Range"

Although there is no specific benchmark for range, it’s an important factor in determining ranking. As has been SNB’s past practice, range is a throughput value taken from the throughput vs. attenuation test for each band and direction. For Revision 11, the range values are 57 dB for 2.4 GHz, 45 dB for 5 GHz and 54 dB for 6 GHz. These values are intended to be pretty far out on the RvR curve, but still represent usable throughput. They do not represent the point of disconnection.

Multiband Test

This benchmark measures the throughput and latency of two STApals configured as two-stream Wi-Fi 6 devices, with simultaneous traffic on 2.4 and 5 GHz radios. For mesh systems, this test is run on each node, so it provides insight into backhaul bandwidth and latency through the mesh.

The test is run at 40 MHz channel bandwidth for 2.4 GHz, and 80 MHz channel bandwidth for 5 GHz. It is not run on 6 GHz at this time. These conditions should produce maximum link rates of 574 Mbps @ 2.4 GHz and 1201 Mbps @ 5 GHz with Wi-Fi 6 DUTs.

Two streams of traffic are run to each STA:

- iperf3, unlimited TCP/IP, 5 parallel connections.

- ping (200 ms interval). Ping direction is from STA to DUT to WAN-side traffic generator

The test sequence is:

- Set the attenuators to 0 dB.

- Associate the STAs to each DUT radio.

- Run traffic for 300 seconds (5 minutes).

- Discard first 5 seconds of iperf3 data, calculate average of remaining data.

TCP/IP traffic is run downlink; ping traffic is run uplink.

The following benchmarks are produced by this test for each band tested:

- Multiband Throughput

- Multiband Latency Score (90th percentile)

Multiband Latency Score = (1 / Measured delay in ms) x 1000, with higher values being better. This inverts and scales latency values so that they may be properly ranked, since the ranker always sorts values from high to low. To convert back to milliseconds, calculate 1 / (score / 1000).

The default chart view shows the average of values recorded for each band tested. Values for each band can also be viewed and compared.

Mesh System Capacity

The test connects a single STA to each mesh node and runs simultaneous TCP/IP traffic to all connected STAs. It measures how well overall system throughput is managed in a simple loading scenario. It is similar to the Multiband test above, but connects one 5 GHz, 2 stream AX STA per mesh node vs. one STA per radio (band) on a single mesh node.

Traffic is limited to 250 Mbps per STA. Since two-stream AX STAs can produce around 900 Mbps of throughput, this load does not penalize three-node systems. Two node, tri-radio systems should be able to handle this test with no problem and produce 500 Mbps of total throughput. Three node systems could be challenged, depencing on the backhaul configuration and bandwidth.

Two streams of traffic are run to each STA:

- iperf3, 250 Mbps TCP/IP, 1 connection.

- ping (200 ms interval). Ping direction is from STA to DUT to WAN-side traffic generator

The test sequence is:

- Set the attenuators to 0 dB.

- Associate the STAs to each mesh node.

- Run traffic for 300 seconds (5 minutes).

- Discard first 5 seconds of iperf3 data, calculate average of remaining data.

TCP/IP traffic is run downlink; ping traffic is run uplink.

The following benchmarks are produced by this test for each band tested:

- Mesh Capacity Throughput

- Mesh Capacity Score (90th percentile)

The default chart view shows the average of values recorded for each mesh node tested. Values for each node can also be viewed and compared.