Introduction

In our last installment, I found that the combination of Windows Home Server as a NAS and Vista SP1 as a client could achieve read performance of almost 80 MB/s. But that impressive speed was reached only with filesizes that were smaller than the amount of RAM in both the client and NAS systems. Once caches were exceeded, performance fell to slightly under 60 MB/s, limited by the speed of a single SATA drive; WHS’ key weakness.

I then made a detour into the low end to see how much performance could be squeezed from Intel’s Atom mini-ITX board. I used both FreeNAS and Ubuntu Sever as OSes and found the latter able to get me up to read speeds of just shy of 60 MB/s with a RAID 0 array.

So I had high hopes of achieving significantly better performance when I installed the Ubuntu Server, mdadm and Webmin combination on the former WHS test bed. But try as I might, despite days of testing, I’m still far from the 100 MB/s read / write goal.

The problem isn’t due to any limitation on the machine running iozone. It’s a Core 2 Duo machine with a PCIe gigabit Ethernet connection that has been tested to read and write data at over 100 MB/s. And iozone doesn’t access the system hard drive during testing, so that’s not what is holding me back.

So I asked iozone’s creator and filesystem expert Don Capps for any wisdom that he could shed on the subject. His response was illuminating, as usual:

RAID 0 can improve performance under some circumstances, specifically, sequential reads, with an operating system that does plenty of read-ahead. For this case, the read-ahead permits drives to overlap their seeks and pre-stage the data into the operating system’s cache.

RAID 0 can also improve sequential write performance, with an application and an operating system that permits plenty of write-behind. In this case, the application is doing simple sequential writes and the operating system is completing the writes when the data is in its cache. The operating system can then issue multiple writes to the array and again overlap the seeks. The operating system may also combine small writes into larger writes.

The controller and disks may also support command tag queuing. This would permit multiple requests to be issued to the controller or disks and then the controller, or disks, could re-order the operations such that it reduces seek latencies.

These mechanisms can also occur in other RAID levels, but may be hampered by slowness in the XOR engine, controller or bus limitations, stripe wrap, parity disk nodalization, command tag queue depth, array cache algorithms or several other dozen factors…

Many of these clever optimizations may also have less effect as a function of file size. For example, write-behind effect might decrease as the file size exceeds the size of RAM in the operating system. Once this happens, the operating system’s cache is full, and the array can not drain the cache as fast as the writer is trying to fill it.

In this case, performance deteriorates to a one-in / one-out series, or may deteriorate to a "sawtooth" function, if the operating system detects full cache and starts flushing in multi-block chunks. This permits the seek overlap to re-engage, but the full condition rapidly returns (over and over) as the writer can remain faster than the drives can receive the data.

Read-ahead effects can also fade. If one application is performing simple sequential reads, then read-ahead can overlap the disk seeks. But as more applications come online, the array can become saturated with read-ahead requests, causing high seek activity.

Finally, as the number of requesting applications rise, the aggregate data set can exceed the operating system’s cache. When this happens, read-aheads complete into the operating system’s cache. But before the application can use the read-ahead blocks, the cache might be reused by another application. In this case, the read-ahead performed by the disks was never used by the application, but it did create extra work for the disks. Once this threshold has been reached, the aggregate throughput for the array can be lower than for a single disk!

So it’s important to understand that application workload can have a very significant impact on RAID performance. It’s easy to experience a performance win in some circumstances. But it’s also not so easy to prevent side effects or to keep the performance gain from fading away.

Don also provided some insight into how other RAID modes can affect filesystem performance.

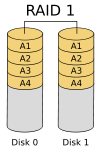

RAID 1

RAID 1 is a "mirrored" set of disks, with no parity. A reader can go to disk 1 or disk 2 and it is up to the RAID controller to load balance the traffic. A writer must place the same data on both disks.

RAID 1 is a "mirrored" set of disks, with no parity. A reader can go to disk 1 or disk 2 and it is up to the RAID controller to load balance the traffic. A writer must place the same data on both disks.

Write performance is the same or lower than that of a single drive. However, read performance might be improved, depending on how well the controller overlaps seeks.

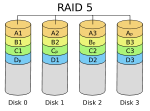

RAID 5

In RAID 5, a reader goes to disk 1, then disk 2. Writer goes to disk 1 then disk 2, and parity is written to disk 3. Read and write performance can be lower than that of a single disk.

In RAID 5, a reader goes to disk 1, then disk 2. Writer goes to disk 1 then disk 2, and parity is written to disk 3. Read and write performance can be lower than that of a single disk.

Performance can be limited by disk 1, or disk 2 or disk 3. Parity is generated by doing an XOR of block 1 and block 2. This permits the reconstruction of either block 1 or block 2 by doing an XOR with the remaining good disk and the parity disk.

However, the XOR operation takes time and CPU resources. It can easily become a bottleneck unless there is a hardware assist for the XOR. Performance is also greatly improved by having multiple parity engines and NVRAM to permit asynchronous writes of the data blocks and the parity blocks.

RAID 10

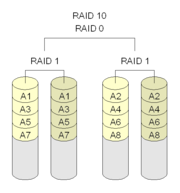

RAID 10 (or RAID 1+0) is a nested-RAID array that combines striping and mirroring. It can provide improved data integrity (via mirroring), and improved read performance. But it requires additional disk space (four drives, minimum) and more sophistication in the RAID controller.

RAID 10 (or RAID 1+0) is a nested-RAID array that combines striping and mirroring. It can provide improved data integrity (via mirroring), and improved read performance. But it requires additional disk space (four drives, minimum) and more sophistication in the RAID controller.

A reader can read block 1 from either disk 1 or disk 2, which permits load balancing. The striping permits more than 2 disks to participate in the mirroring.

Examples

Let’s look at some actual RAID 0 data to see how all of this translates into real life. Table 1 summarizes the test bed system used. It is the same system used in the Windows Home Server testing, but with only 1 GB of RAM and WD VelociRaptor drives. It was running Ubuntu Server 8.0.4.1 and using mdadm for RAID.

| Components | |

|---|---|

| Case | Foxconn TLM776-CN300C-02 |

| CPU | Intel Core 2 Duo E7200 |

| Motherboard | ASUS P5E-VM DO |

| RAM | 1 GB Corsair XMS2 DDR2 800 |

| Power Supply | ISO-400 |

| Ethernet | Onboard 10/100/1000 Intel 82566DM |

| Hard Drives | Western Digital VelociRaptor WD3000HLFS 300 GB, 3 Gb/s, 16 MB Cache, 10,000 RPM (x2) |

| CPU Cooler | ASUS P5A2-8SB4W 80mm Sleeve CPU Cooler |

Table 1: RAID 0 Test Bed

Figure 1 contains iozone write test results with the following test configurations:

- RAID 0 XP – Baseline RAID 0 run. 4k block size, 64K chunk. iozone machine running XP SP2

- RAID 0 XP stride32 chnk64 – Same as #1, but with a stride of 32

- RAID 0 XP stride64 chnk128 – Same as #2, but with a stride of 64 and 128K chunk

- RAID 0 Vista stride32 chnk64 – Same as #2, but with iozone Vista SP1 machine

- Single Drive XP – test with one ext3 formatted drive

- RAID 0 XP tweaks – same as #1, but with all tweaks suggested in this Forum post

Figure 1: RAID 0 Write comparison

As Don predicted, it’s hard to see a difference among the RAID 0 write results. The only run that is drastically different is the one with the "tweaks" that were supposed to enhance performance!

The stride experimentation resulted from this Forum suggestion. According to this HowTo, Stride is related to chunk and block size and is supposed to direct the mkfs command to allocate block and inode bitmaps so that they don’t all end up on the same physical drive. This, in turn, is supposed to improve performance. But it’s hard to see any significant difference in write performance.

We do see a performance gain between the single drive and the RAID 0 runs. But using Vista SP1 instead of XP SP2 on the iozone machine doesn’t seem to affect the results much. In all the runs I did, the best non-cached performance was always under 68 MB/s.

Figure 2 shows the read results for the same tests. This time Vista running on the iozone machine provides a noticeable improvement and the "tweaks" run is still the worst performer.

Figure 2: RAID 0 Read comparison

But you have to look pretty closely, and beyond the 1 GB filesize (after OS and NAS caching is no longer in effect) to see a difference in the other tests. There is a definite difference between single drive and all the RAID 0 runs. And while the best RAID 0 run with iozone running on XP is with the larger stride and chunk size, it’s not a huge improvement.

It does appear, however, that some of the RAID 0 mechanisms that Don described are working to bump read performance up to 74 MB/s. But that’s only when file sizes were well below the RAM sizes in both the NAS and iozone machine. Once both RAM sizes are exceeded, performance falls to around 53 MB/s.

Closing Thoughts

If the "Fast NAS" series is teaching me anything, it’s that the whole subject of networked file system performance is pretty complex. Hell, just the simple act of copying a file from one machine is more complicated than I ever imagined!

I have a new appreciation for the legions of techies who toil away anonymously, trying to improve the performance and reliability of this fundamental building block of computing.

At this point I need to regroup a bit to determine what the next step will be. I have a hardware RAID controller arriving any day now. So, maybe that’s a logical next step. I also might see what a Vista-based "NAS" might do for performance with a Vista client. In the meantime, keep the suggestions and feedback coming in the Forums!