Introduction

Updated 5 April 2018: Zyxel retested

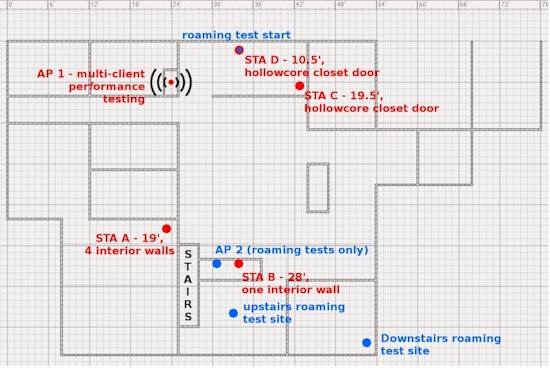

Test floorplan

In our first round of testing, each access point was thoroughly disassembled, dissected, and put through its paces in SmallNetBuilder’s octoScope test chamber. My approach is different. I set each access point up in a real physical environment designed to resemble real-world deployments, and hit them with workloads similarly tailored after real-world protocols. My goal is to expose the strengths and weaknesses of each access point when used under more real life conditions.

For performance tests, one AP is set up in the location shown on the floorplan above. Then four devices ( STA A,B,C, D) are sited as shown above, with distances and obstructions as listed.

Station D is intended to have the highest throughput, sitting 10.5′ away with no significant obstructions. Stations B and C are progressively a little farther and a little less clean a shot to the access point; and then there’s station A. Station A sits at 19′, four interior walls, and some miscellaneous cabinetry away from the AP. This is farther than you should plan to deliberately support with any access point in a multi-AP deployment. But despite your best planning, you always end up with one like this, so we test it.

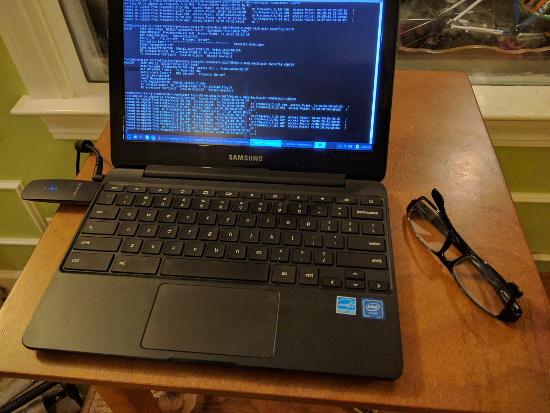

Samsung Chromebook on its test stand at Station D

The stations themselves are four identical Samsung Chromebooks running GalliumOS equipped with a built-in Intel Dual Band Wireless-AC 7265 and Linksys WUSB-6300 external wireless adapters. The WUSB-6300 is my reference adapter and used for most performance testing, because there’s less variation between the four NICs than there is between the internal NICs in the Chromebooks, and also because they have exceptional TX performance.

The current Linux driver for the WUSB-6300 does not support 802.11k or v, though, so we shift back to the onboard Intel 7265 for testing of roaming and band steering behavior. I have not been able to find definitive information from Intel regarding the technologies supported in their Linux driver. However, Microsoft says Windows 10 supports 802.11k,v and r and Intel says the Wireless-AC 7265 (Rev. D) also supports all three roaming assistance standards. Empirically, it is very clear that AP-assisted roaming works on the AC 7265 under Linux, due to the dramatic differences in behavior when connected to different models of AP.

Performance Tests

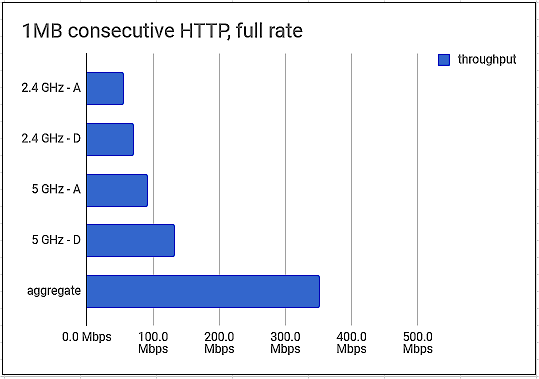

Performance testing is done in two phases, both using netburn to load the stations with HTTP traffic. The single-station phase downloads a 1 MB file repeatedly, with no other traffic on the network. This is done for 2.4 GHz and 5 GHz, with separate test runs using Station D (the nearest and best sited) and Station A (the furthest and worst sited). This single-client testing is roughly similar to an iperf3 run, but it’s a little more heavily impacted by TX performance of the station because it must repeatedly issue an HTTP request.![]()

What is netburn?![]()

netburn is part of a suite of open source tools written in Perl, used for testing network performance using HTTP to generate network traffic. By using different filesizes and concurrent downloads, netburn can more realistically simulate real-world network traffic than single or even multi-stream TCP/IP or UDP transfers using tools like IxChariot or iperf.![]()

netburn can not only measure raw throughput, but also how a Wi-Fi system responds under multi-client load by measuring the time it takes to issue a set of HTTP requests and when the last of the requests is complete. This method can measure application latency, which in turn reveals weaknesses in how well a wireless router or access point schedules airtime. ![]()

If the AP does a poor job of managing airtime, stations will have to wait longer to issue their HTTP requests, which in turn delays request completion. The real-world effect of HTTP delay can vary from slow webpage loading to poor video streaming performance. Raw throughput also plays a part in performance, since a faster AP can get the same 2 MB total of “page” data delivered in less airtime than a slower one.![]()

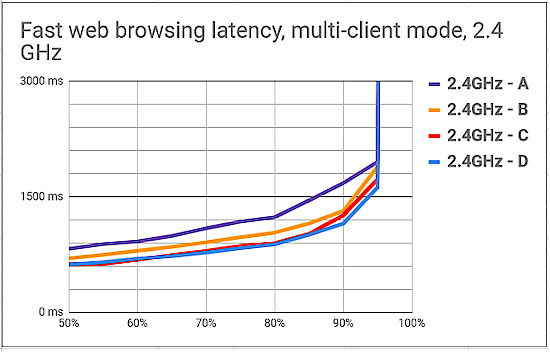

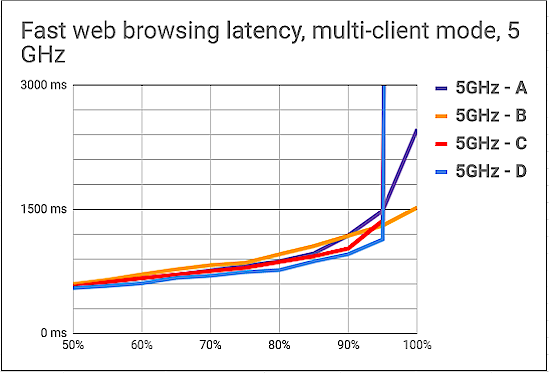

The second phase is multi-client testing, in which all four stations are active simultaneously. Here the net-hydra controller is used to kick off a session of netburn on each station simultaneously. Netburn is configured to fetch sixteen 128 Kbyte files in parallel via HTTP, representing a “page load”, and throttled to 8 Mbps per station overall by sleeping between “page” fetches. Up to 500 ms of jitter is injected randomly to avoid pathological pattern interaction between stations. The test is run for 5 minutes, after which application latency is plotted and analyzed.

I have found netburn-based multiple client testing is a much more reliable indicator of the actual experience of using a Wi-Fi system, compared to a simpler iperf3 test, or even the single-client maximum throughput tests done with netburn.

Instead of just looking at an arithmetic mean of the results returned by our 5 minute test run, I prefer to look across the entire dataset. (Actually, that’s not quite true—I focus on the worst half of the dataset.) For each individual HTTP fetch operation, we get an application latency in milliseconds; for this particular test payload, it’s the latency between the time sixteen separate HTTP GETs for a 128 KB file go out in parallel, and the time the last of the sixteen are delivered.

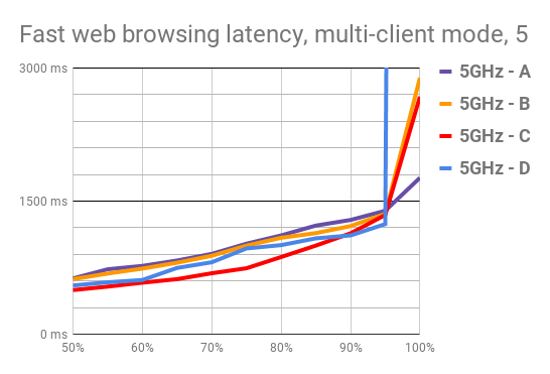

Wi-Fi—at least, omnidirectional Wi-Fi—is very much a best-effort service. You cannot expect the sort of well-behaved, tightly-clustered results you’d get from a wired Ethernet network. So we take a large set of datapoints, order them from best (fastest) to worst (slowest), and organize them into percentiles. We can then make a line graph displaying each STA’s results as shown below. The 50% mark represents the median—half of the latencies measured for the STA were better than the value at the 50% mark, and half were worse. Similarly, at the 75th percentile, 75% were better and 25% were worse.

Sample set of 5 GHz multi-client test results

It’s important to evaluate the performance of a network across the range of results, because that’s how humans using it will actually perceive it. Nobody wants a network that’s 25% faster on average, if it’s also completely broken for 10% of all requests!

In our example above, we see an access point doing a pretty good job of servicing all STAs. The lines are pretty tightly clustered, and they stay below 1500 ms all the way to the 95th percentile mark on the X axis. This means that all four STAs will get their “webpage” in less than 1500 ms for 19 out of 20 attempts. 1500 ms (1.5 seconds) is a relatively arbitrary delay that we’re declaring “decent results” for this test.

We can see things go off the rails for STA D after the 95th percentile; its line has a “knee” going almost purely vertical at the 95th percentile mark. What this means is that at least one page load during the test run was not completed, and timed out at 60 seconds. A few (fewer than 5%) page loads going astray in a relatively challenging test like this is not really a death sentence. But it does indicate that every now and again, when the network is really busy, you’re going to have users needing to click “refresh” in a browser.

Roaming Tests

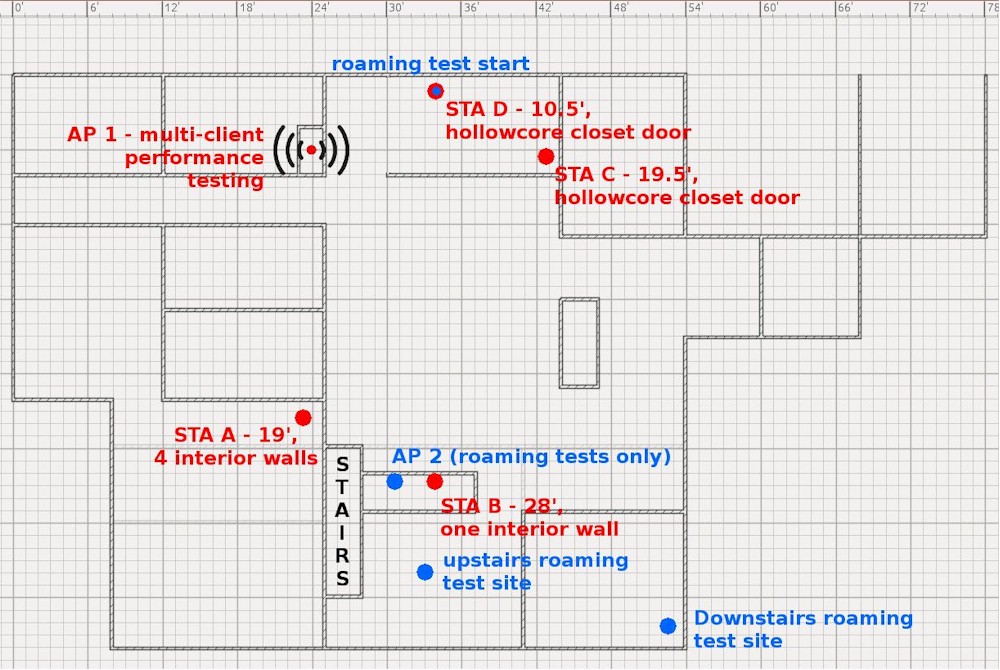

Roaming is the process of moving a wireless device’s (STA) established Wi-Fi network association from one access point to another without losing connection. To test roaming, I set up a second AP—in controller, cluster, or managed mode, if available—as shown in the floorplan below. I connect the Chromebook’s onboard Intel AC 7265 to the WLAN, and start up a script that makes it loudly go “BING!” each time the connected BSSID or frequency changes.

I then walk a predetermined path through the house. Starting from Station D, 10.5′ from the first access point, I walk around the living room island to the farthest wall, about 10′ behind Station B. At this point, the second access point is a good twenty feet closer than the first. If a roaming event doesn’t occur within ten seconds or so, I’ll start doing some iperf3 runs—sometimes access points don’t trigger roaming events on idle stations.

Test floorplan—larger view

After the STA does (or doesn’t) move from AP 1 to AP2, I then walk downstairs, and to the farthest corner of the basement floor. This should ideally trigger a bandsteering event from 5 GHz to 2.4 GHz on AP2, since it’s far enough away from both access points that 2.4 GHz is substantially more effective. After giving the access point time and prompting to switch APs and/or bands at both stations, I walk back in the reverse order, and see how quickly the STA switches first back to 5 GHz on AP2 at the top of the stairs, and then to 5 GHz on AP1 as I return back to Station D.

This roaming evaluation isn’t a perfect science, since I have yet to figure out how to capture an 802.11k or 802.11v BSS transition management frame in flight. And not all APs (or STAs) use 802.11k,v or r to roam anyway. With that said, it’s extremely clear that the APs themselves do make a difference—roaming is extremely “sticky” and slow to occur with some kits, and so rapid and hyperactive as to be annoying with others.

While I have multiple APs set up, I also evaluate ease of deployment and management, as well as the quality of roaming, band-steering, and how well the system distributes stations between available access points.

To the tests!

D-Link DAP-2610

| At a glance | |

|---|---|

| Product | D-Link Wireless AC1300 Wave 2 Dual-Band PoE Access Point (DAP-2610) [Website] |

| Summary | Qualcomm-based 2×2 AC1300 class “Wave 2” access point with PoE and support |

| Pros | • Many management options including built-in AP cluster controller • Lots of client bandwidth management options |

| Cons | • Web admin can be confusing • No roam assist features • Poor 5 GHz performance in open air testing |

Typical Price: $136 Buy From Amazon

D-Link’s DAP-2610 is a very neutral, smallish oval, with a 12VDC jack and one PoE RJ-45 jack in a recessed bay on its back side. There’s a small steel loop bracket at its side; I’m not sure what it’s for—possibly for mounting on a junction box.

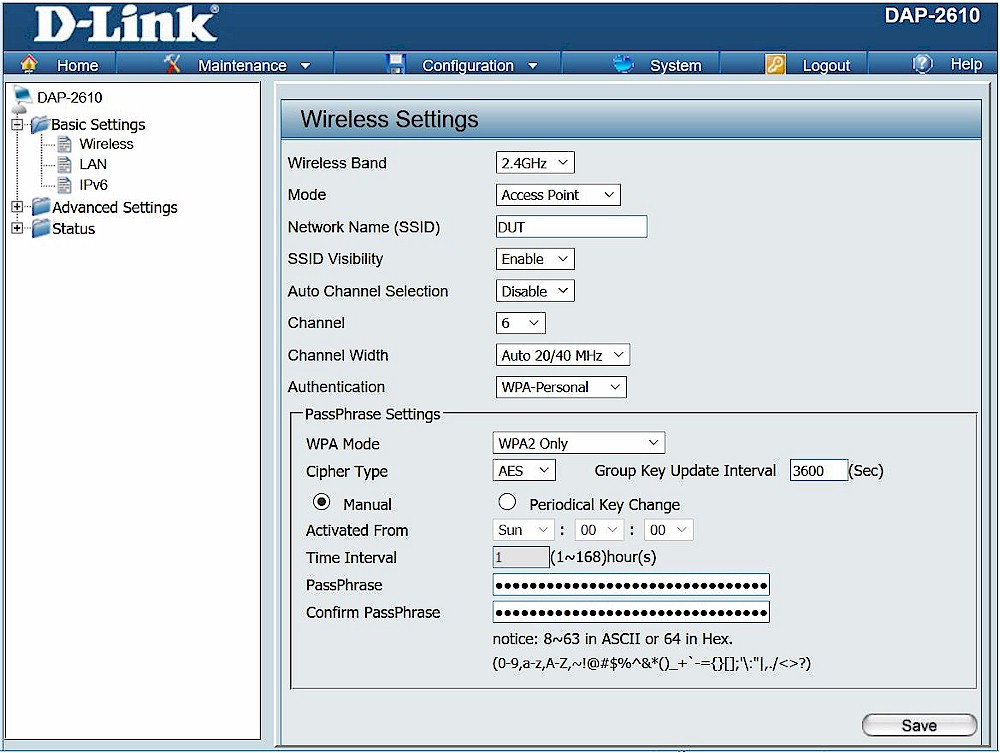

The DAP-2610 is static addressed from the factory at 192.168.0.50. Unlike Zyxel’s NWA1123-AC, it just plain works at that static address—you can connect to it from either of its factory SSIDs, or from a LAN it’s plugged into, with no odd hitches or glitches. The UI is usable, if a little more primitive-looking than I’d prefer; it used default browser folder icons, and the simplest possible controls. In short, it looks like it was hacked together by engineers, with no input from web developers or UI focus group.

D-Link DAP-2610 web admin

My biggest complaint was that there’s no indication you need to go elsewhere to apply your changes after making them, but you do. Apply changes is hidden under a top “Configuration” menu, and when you click it, reboots your AP immediately. If you forget that step, your changes will be gone after rebooting the AP—even changes to the admin password. (On the plus side, this AP reboots remarkably quickly.) Primitive UI or no, it was easy enough to set up all three of my test SSIDs (one 2.4 GHz, one 5 GHz, and one dual-band).

The firmware upgrade section consisted of the familiar old-school “I’ll apply it if you have it” method; you must go looking for a download on your own, then download a ZIP file and extract a BIN from it, then browse to it from the DAP-2610’s UI. There is no hint to let you know whether your firmware is current or outdated.

I had a lot of difficulty getting the DAP-2610’s multi-client testing started on the 5 GHz band. Both STAs A and B were extremely balky about passing their SSH keypair authentication that’s part of the net-hydra multi-STA synchronization controller. SSH keypair authentication is a very chatty protocol that just plain doesn’t like slow, unstable networks.

Poking around at the setup, I discovered ping times frequently shooting into the 200ms range at these stations, despite a reported link quality in the 80s (of 100). iperf3 runs tended to fail with completely hung TCP threads, with 40+ seconds of 0 Mbps throughput, after more typical ten second periods producting 7-24 Mbps throughput. I eventually managed to get a successful authentication from both STAs A and B, allowing me to get through 5 GHz testing.

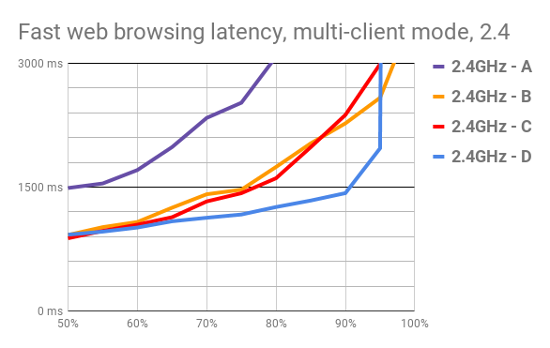

The 2.4 GHz application latency curves for the DAP-2610 are pretty reminiscent of the NWA1123-AC’s; they’re widely separated, with much higher slopes than we’d like to see. The wide separation of STA A from STAs B and C, and theirs from the very short-range STA D, strongly imply range issues with the AP.

D-Link DAP-2610’s 2.4 GHz application latency curves

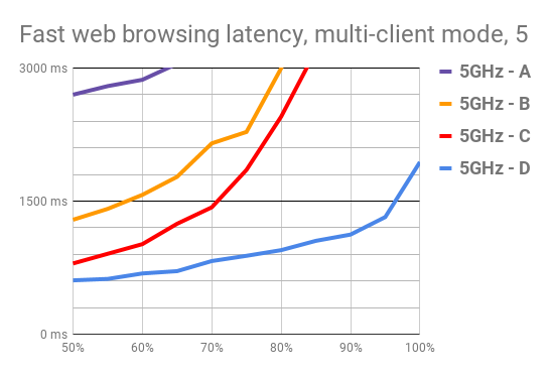

The DAP-2610 performed significantly worse on 5 GHz than it did on 2.4 GHz, which was not a surprise after the SSH keypair authentication problems I had on this band. Again we see wide separation of the extreme range STA A from the moderate-range STAs B and C, which are themselves widely separated from the very short-range STA D. This is not an access point that performs well at any significant—or even not-so-significant—range.

D-Link DAP-2610’s 5 GHz application latency curves

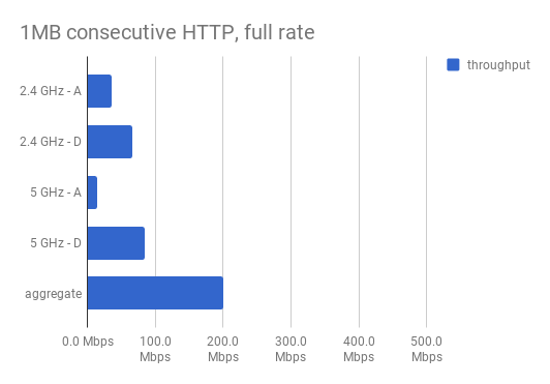

The DAP-2610 posted the worst overall 5 GHz throughput numbers of any access point in the round-up. Although its overall 2.4 GHz throughput was much better—ranking 3rd of 8, just behind the Edimax CAP1200—its poor 2.4 GHz performance at the long-range STA D, at 6th of 8, is more informative. This is not an access point you want to get very far away from.

D-Link DAP-2610’s 2.4 GHz single-client throughput

Because the DAP-2610’s 5 GHz connectivity was so flaky, I powered down the first AP, set up a second, and began the test procedures again with it. I immediately saw the same issues with difficulty authenticating using keypairs in SSH, so I did not complete the full test run on the second DAP-2610 AP; instead, I powered the first AP back on and began testing roaming.

I did not realize at the time that D-Link had a Central WiFiManager software controller available, so I did not test it. With both APs set up using identical settings, I began my standard walkthrough path.

The DAP-2610 does not support 802.11k,v or r. But roaming behavior while connected to the DAP-2610s was excellent, with prompt roaming to AP2/5GHz as I walked behind AP2, and roaming to AP2/2.4 GHz as I reached the bottom of the stairwell. The DAP-2610s didn’t seem happy with the connection when I got to the far corner downstairs, and I got several unprompted roaming events between AP1/2.4 GHz and AP2/2.4 GHz while standing in the corner. Signal in the corner while connected to AP2/2.4 GHz was -63 dBM, quality 43/70; iperf3 gave a reliable 50 Mbps down / 20 Mbps up. Moving back upstairs, the station roamed back to AP1/5GHz as it was placed back on the test stand near AP1.

Further poking around at my earlier issues with SSH keypair authentication showed that as long as the two devices communicating were connected to different bands—or different APs!—they could get through the handshake fine. The only problems occurred when both client and server were connected to the same 5 GHz radio on the same access point.

Edimax CAP1200

| At a glance | |

|---|---|

| Product | Edimax 2 x 2 AC Dual-Band Ceiling-Mount PoE Access Point (CAP1200) [Website] |

| Summary | Business class indoor ceiling mount AC1200 class AP supporting fast roaming and built-in RADIUS server |

| Pros | • Built-in RADIUS server • Good AP placement tool • Traffic shaping for Guest networks • Well-behaved roaming |

| Cons | • Web admin takes awhile to save changes • Terrible 2.4 GHz performance (Revision 10 retest) |

Typical Price: $90 Buy From Amazon

Edimax’s CAP1200 is a medium-sized off-white disc, flat, with dark grey branding in the center and a single medium-size/brightness LED at the bottom, with its RJ-45 Ethernet jack hidden in the back. There is no setup URL (like NETGEAR’s routerlogin.net, or TP-Link’s tplinkeap.net—you’ll have to find the AP on your LAN using an “attached devices” feature in your router, or maybe an nmap scan.

Once you’ve found the CAP1200’s IP address, there are no shenanigans involved in getting it set up—when you browse to its IP address, you’ll be HTTP 302’d to HTTPS, you accept the snakeoil certificate warning, and you’re in; no fuss, no muss.

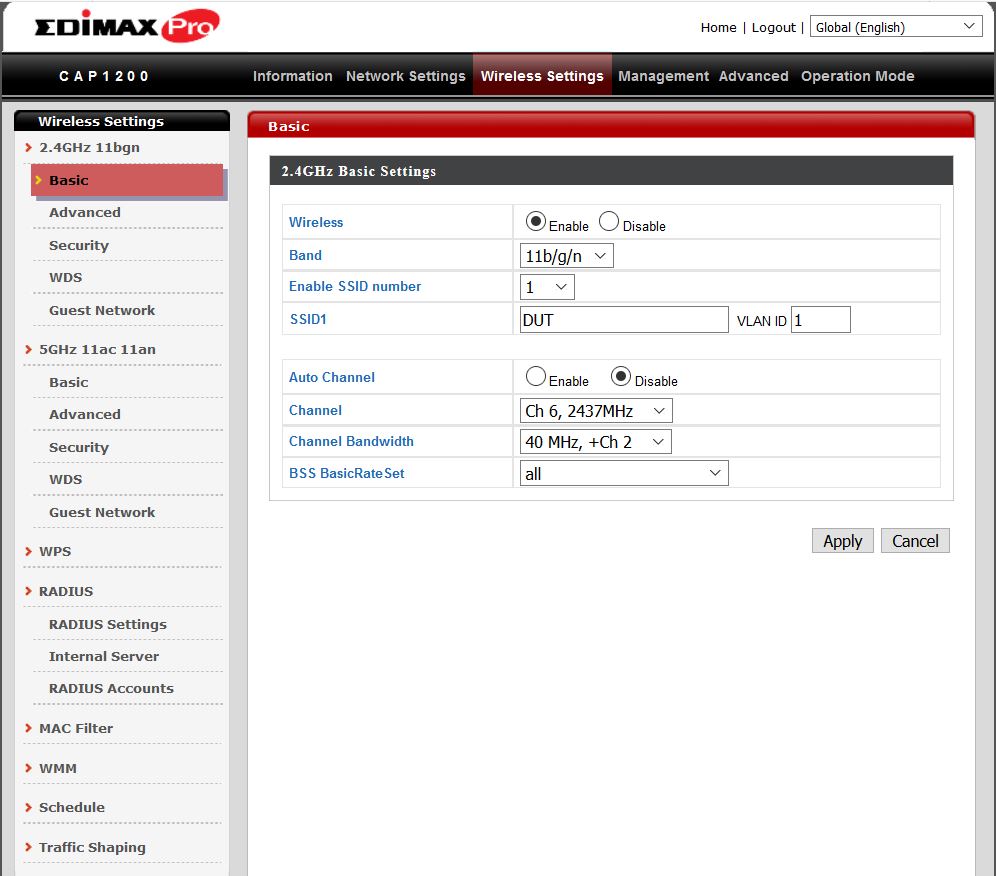

The interface itself is clean and well-organized; most admins should have no trouble figuring out where to go and what to do for the most part. Setting up multiple SSIDs is a little quirky—the CAP1200, like many dual band access points, has you configure its radios separately. Each radio has an option “Enable SSID” with a number next to it—1 by default—and if you change it to 2, 3, etc, more text boxes appear for setting up additional SSIDs.

Edimax CAP-1200 web admin

There’s also a section for setting up 802.11r roaming assistance that’s a bit quirky. If you enable 802.11r, it asks you for “mobility domain” and “encryption key”, which no other router or access point has asked me to configure. Googling got me to an Edimax FAQ in pretty short order; the “mobility domain” is basically whatever you’d like it to be, as long as it’s precisely four alphanumeric characters; and the encryption key is essentially like an old WEP key—32 hexadecimal characters, no more no less. These will need to be present and matching between any CAP1200 access points you want to assist client devices to roam between.

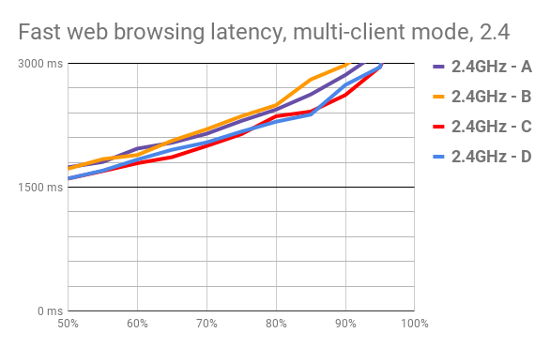

Although its 2.4 GHz radio struggled significantly, the CAP1200 managed to distribute airtime pretty fairly between the stations and produce a nice, clean, reliable latency curve. Under the torturous conditions we gave it (roughly equivalent to 15-30 devices with humans actively browsing websites), nobody’s session would have felt fast—but nobody’s would have felt horrifically slow or unreliable, either.

Edimax CAP1200’s 2.4 GHz application latency curves

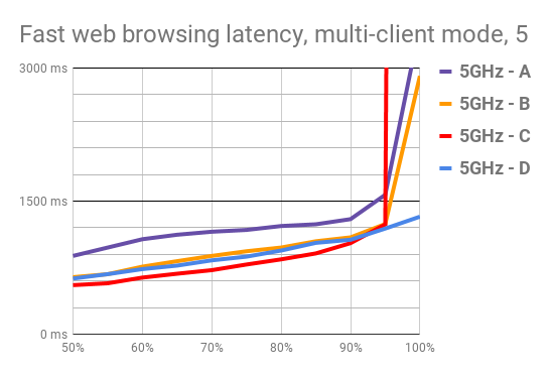

The CAP1200’s 5 GHz performance was quite good overall, although it failed to load seven total pages on Station C. Those failed page loads are why you see a sharp near-vertical “knee” at 95%. Its 5 GHz response curve overall is still on par with the better access points tested, such as Ubiquiti’s UAP-AC-Lite, TP-Link’s EAP-225 and OpenMesh’s A60.

Edimax CAP1200’s 5 GHz application latency curves

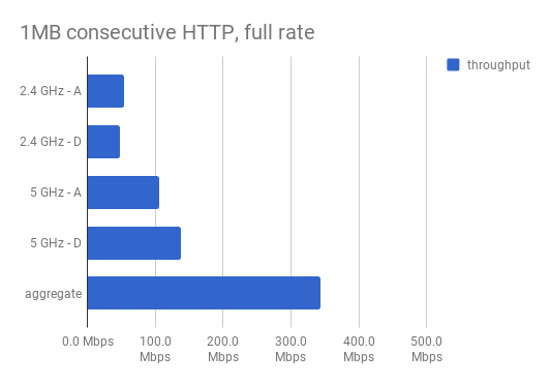

The Edimax’s single-client throughput was extremely good on both frequencies, though oddly lower on 2.4 GHz at STA D (the close-range STA) than STA A (the one behind four interior walls). Despite this odd hiccup, the CAP1200’s 2.4 GHz throughput was third highest in the roundup and its 5 GHz the absolute highest.

Edimax CAP1200’s single-client throughput

However, you really shouldn’t get too excited about single-client throughput scores by themselves. Although we’re all used to them, they are not a reliable indicator of multi client performance—and to borrow a phrase from a colleague, “just one or two devices is literally zero peoples’ use case.”

After putting the first AP through its paces, I changed its “Operation Mode” from Access Point to “AP Controller.” This disabled its radios entirely, but made it ready to, you guessed it, act as a controller and host for my other two CAP1200s. This was a relatively straightforward process; it automatically adopted the two other APs once they were visible on its network, without any further intervention on my part required. With the third AP acting as controller, the system distributed my four STAs fairly evenly between the two active (non-controller) APs.

Once adopted, new APs automatically inherit whatever settings you’ve applied to the “default” WLAN group—but the whole process is decidedly pokey. It took roughly ten minutes for my two new APs to finish being adopted, get their SSID and radio settings, and come back online.

The CAP1200 controller offers a nice, full set of options controlling how frequently (if at all) to scan for channel congestion and hop APs around to avoid it. There’s also a pretty nice “Zone Plan” page that appeared to have already placed my APs within it, in roughly the same relation to one another as in real life. All in all, if you can stand the pokey waiting for configuration changes to apply, this is a pretty nice controller to manage a bunch of access points from—and using an extra $100 AP to run it isn’t such a bad thing either.

The CAP1200 supports 802.11k and r. As I roamed along my testing path through the house, the Intel AC 7265 NIC on my Chromebook hopped along crisply and decisively, hopping to AP2/5 GHz within ten seconds of my arrival behind Station B, and from 5 GHz to 2.4 GHz within less than five seconds of arrival at the far corner downstairs. Retracing my steps, it hopped back to AP2 on 5 GHz within a few seconds of my reaching the top of the stairs, and went to AP1/2.4 GHz within a few seconds of arrival back to the test stand at Station D. It remained on 2.4 GHz there, which is likely because it was configured for “balanced” band steering rather than specifically favoring 5 GHz.

It was very clear that assisted roaming, despite being a little odd to configure, was quick and logical for the CAP1200.

Linksys LAPC-1200

| At a glance | |

|---|---|

| Product | Linksys AC1200 Dual Band Access Point (LAPAC1200) [Website] |

| Summary | Qualcomm-based AC1200 class 2×2 access point with PoE |

| Pros | • Built-in cluster controller |

| Cons | • Physically large • Relatively expensive • No roam assistance • Very poor 2.4 GHz multi-client test performance |

Typical Price: $0 Buy From Amazon

The LAPC-1200 is an unusually large hexagon with obnoxiously bold LINKSYS branding in the center. It comes with a printed paper drilling template, which is pretty nice.

There’s no configuration URL for an LAPC-1200; you’ll need to find the AP’s IP address by way of an nmap scan, dhcp logs, “attached devices” interface in your router, Fing or what have you in order to configure it. You also need to avoid connecting to the 2.4 GHz or 5 GHz open SSIDs it provides in its factory condition. The manual makes no mention of this, but while a STA connected to one of these factory SSIDs can hit your LAN just fine, it can’t hit the web UI for the AP itself—you’ll need to be connected directly to the LAN, upstream of the AP, for that.

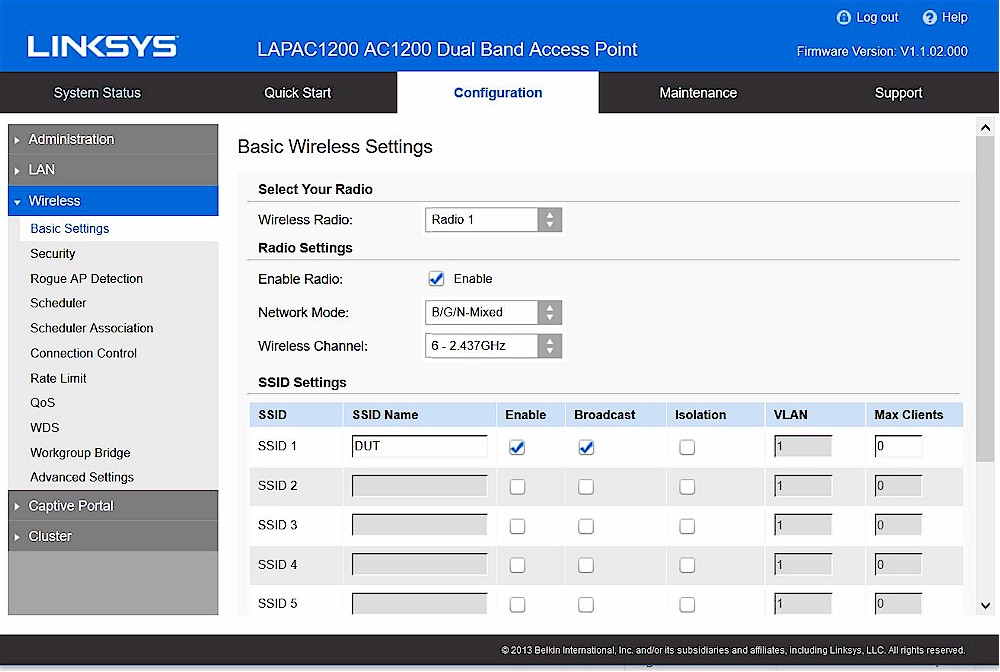

Linksys LAPAC1200 admin GUI

Once you’ve connected to the LAPC from the LAN, you can log into it using either HTTP or HTTPS with no issues. First stop is a firmware update. The LAPC1200 can be fed an upgrade package from a local file, from TFTP, or from the internet with an easy “Check for Upgrades” button. I updated mine from the “Check for Upgrades” button, with no problems.

The initial configuration, by Quick Setup wizard, was fairly painless. The radios are labeled “Radio 1” and “Radio 2” with no actual indication of band, but the factory default SSIDs are named “SMB24” and “SMB5” or similar, so it’s pretty easy to figure out that Radio 2 is the one on 5 GHz. You can set up to four SSIDs per radio, via a primitive here’s-your-four-text-boxes type interface. There is no obvious way to actually disable any of your four SSIDs; all you can do is leave their text boxes blank. This did not inspire a lot of confidence for me that there’s no accidental back door left into the system. VLAN configuration per SSID is available.

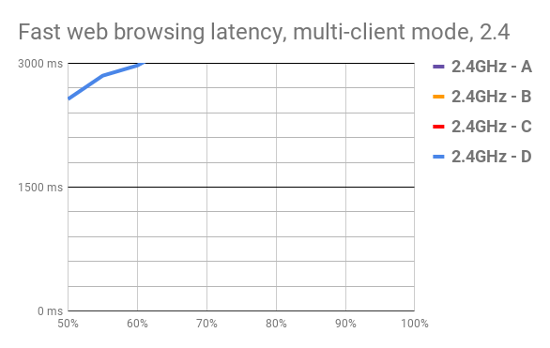

The less said about the LAPC-1200’s 2.4 GHz performance, the better. Only a single STA even made it onto our standard graphing space, which ends the Y axis at 3000ms—and even then, it only stayed there briefly. Anyone attempting to make significant use of these APs on 2.4 GHz is going to have a Very Bad Time indeed.

Linksys LAPC-1200’s 2.4 GHz application latency curves

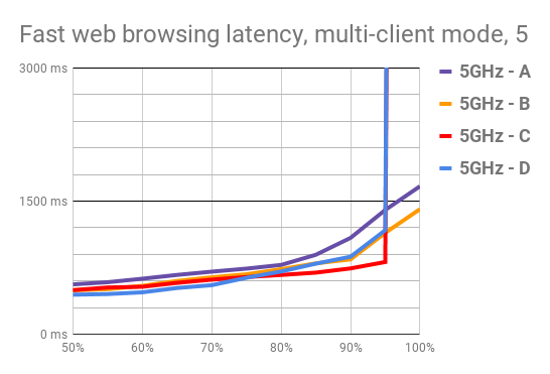

The LAPC-1200 did significantly better on 5 GHz, with low, tightly grouped STA lines that didn’t really begin to diverge until around the 82% mark. Two of the four STAs did fail on a number of attempts, which produces the sharp knee at the 95th percentile you see for STAs C and D.

Linksys LAPC-1200’s 5 GHz application latency curves

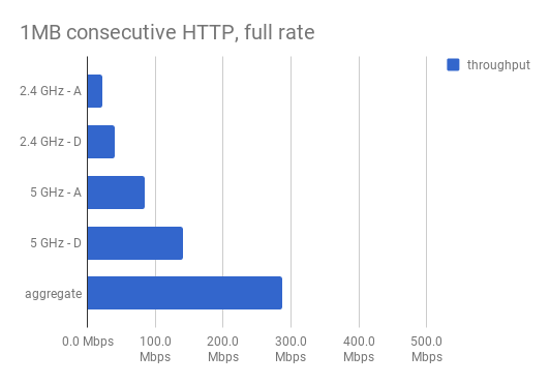

The LAPC-1200 performed very poorly on 2.4 GHz in single-client, maximum-throughput testing. Its 22.0 Mbps score for 2.4 GHz at STA A is particularly bad, but both STAs scored more poorly than any other AP tested on this band. Its 5 GHz numbers were markedly better, placing it in the top four on the difficult-to-reach STA A, and giving it top honors at 140.3 Mbps on the extreme close range STA D.

Linksys LAPC-1200’s single-client throughput

Moving on to testing with multiple APs, the LAPC-1200 takes a different approach to most kits. Instead of a controller, it opts for “cluster mode”. Cluster mode is quite simple to set up; you just set your first AP’s operation mode to Master, set any others as Slave, and you’re done. The configurations you set on the Master AP will be automatically replicated to all of the others. An auto channel configuration feature allows you to select how frequently the APs will scan their RF surroundings to look for the least congested channel, which is a nice touch.

Despite manually enabling band steering, none of the eight connections across four STAs went to 2.4 GHz—even STA A, where the 5 GHz connection is quite dicey for these APs. All eight of my NICs (one WUSB-6300 and one Intel 7265 in each STA) went to 5 GHz, although they did spread evenly across available APs. The channels auto selected by the APs did not overlap; AP 1 took Ch1/Ch44 and AP2 took Ch6/Ch157.

The LAPC1200 does not support 802.11k,v or r. On leaving the test stand near AP1, my laptop roamed to AP2/5GHz before even reaching the top of the stairs, let alone walking around behind AP2. Upon reaching the far corner downstairs, it hopped from AP2/5GHz to “not associated” to AP1/5GHz to “not associated” and back to AP2/5GHz—six roaming events in six seconds. It really, really did not want to try 2.4 GHz at all, even after a manual disconnect and reconnect. It finally hopped to AP2/2.4 GHz about ten seconds after being manually reconnected, and seemed content to stay connected once there.

Moving back up to the top of the stairs near AP2, it did not roam easily back to 5 GHz; even after thirty seconds’ wait followed by a complete and very sad iperf3 run of 3.5 Mbps. Some time after the iperf3 run, it finally hopped back to AP2/5 GHz, on which connection an iperf3 run produced 65 Mbps. Finally, it roamed back to AP1/5GHz after ten seconds on the test stand at STA A.

There was no doubt that the LAPC-1200s were “assisting” my test laptop in roaming events—but while they did a good job of steering between APs, they did an absolutely awful job steering between bands. To be fair, the LAPC-1200’s horrible 2.4 GHz performance probably doesn’t help that any; I’m not sure when you’d actually want to be connected to one on 2.4 GHz in the first place.

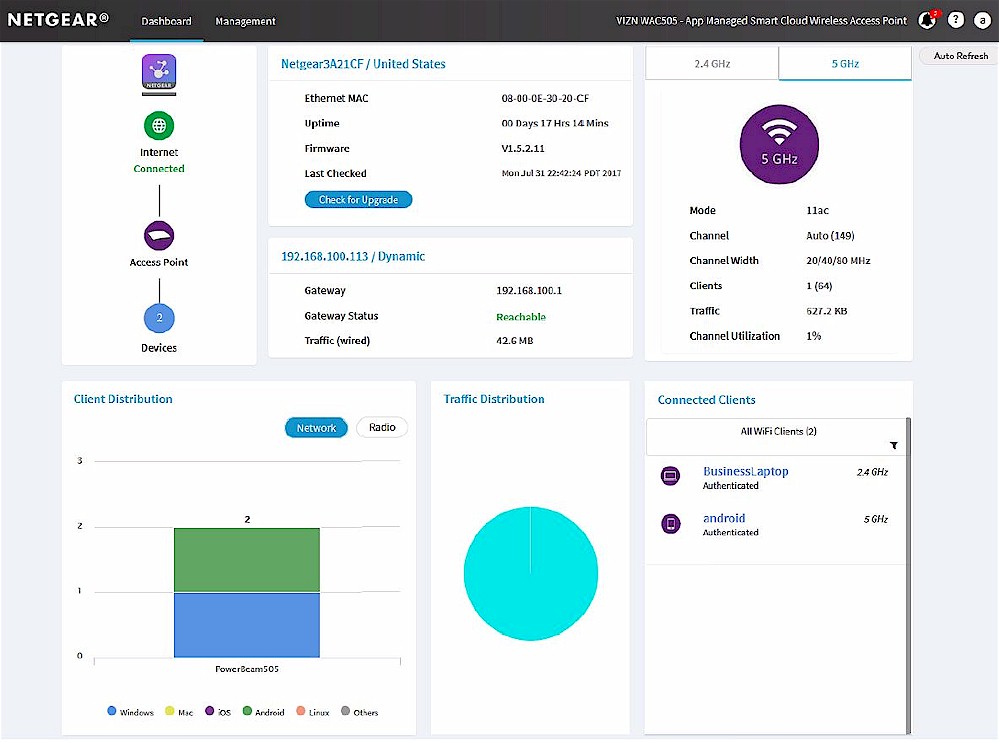

NETGEAR WAC-505

| At a glance | |

|---|---|

| Product | NETGEAR Insight Managed Smart Cloud Wireless Access Point (WAC505) [Website] |

| Summary | AC1200 class dual-stream PoE-powered access point with MU-MIMO support |

| Pros | • Supports AP load balancing and band steering |

| Cons | • All settings not available via app • Smartphone app is not suitable for managing multiple APs • No MU-MIMO disable |

Typical Price: $0 Buy From Amazon

The NETGEAR WAC-505 is a small, unusually lightweight off-white rectangular lozenge. Its Ethernet jack is hidden behind the back, and the front sports an unusual five LEDs—LAN, 2.4 GHz, 5 GHz, power… and something odd, a bit like a recycling logo (three little arrows twisted to make a clockwise-pointing circle). It booted very quickly, noticeably faster than most APs.

Once booted, the WAC-505 produces 2.4 and 5 GHz WLANs. The SSIDs themselves are "SSID" plus a phrase unique per AP and “secured” with the PSK “sharedsecret”. The usual NETGEAR setup URL, routerlogin.net, works to connect to a new WAC505 for configuration.

Also as typical for NETGEAR, the web UI is very well laid out, easy to navigate and attractive. My only complaint is an interminable list of alphabetically ordered time zones in the “Day Zero” wizard for initial configuration—am I going to be in Eastern, or New York, or US/New York, or US/Eastern, or…? You have no choice but to scroll through a hundred-plus possibilities in the drop-down box to find out.

Once you get through that, the “real” configuration UI is easy to navigate; I had no difficulty setting up my standard three test SSIDs (one 2.4 GHz, one 5 GHz, and one dual-band) and enabling 802.11k and 802.11r for them. The “firmware upgrade” button is also prominently placed at the top of the dashboard home, and warns you there’s a new one out and you should upgrade without you even needing to click anything.

NETGEAR WAC505 Web GUI

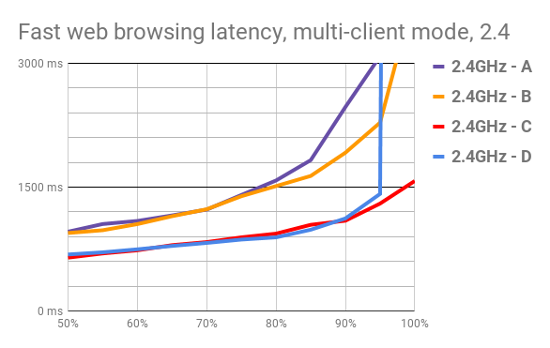

The WAC505 did quite well on 2.4 GHz, aside from dropping a page or two at station D. About four out of five page loads completed in 1500ms or less at all stations, which is better than it sounds when you realize just how much traffic and congestion we’re throwing at an AP here—our four stations’ combined 32 Mbps of traffic is roughly equivalent to 15-30 stations with actual humans behind them animatedly browsing websites.

NETGEAR WAC505’s 2.4 GHz application latency curves

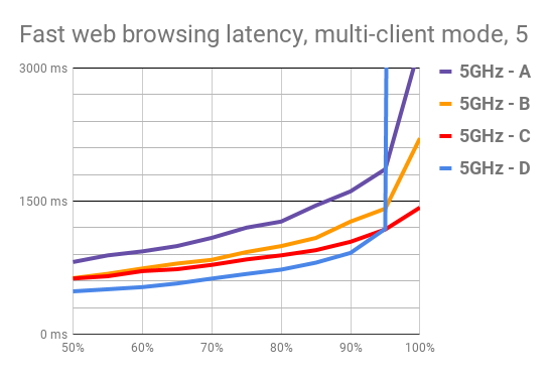

The WAC505’s application latency curves at 5 GHz are still tightly grouped, and do a slightly better job bringing home the data, meeting or exceeding a 1500ms latency in 9 of every 10 attempts. The relatively close grouping of the curves for each STA are also a good sign here—if the curves match closely as they do here, it’s an indication of good airtime management by the AP.

NETGEAR WAC505’s 5 GHz application latency curves

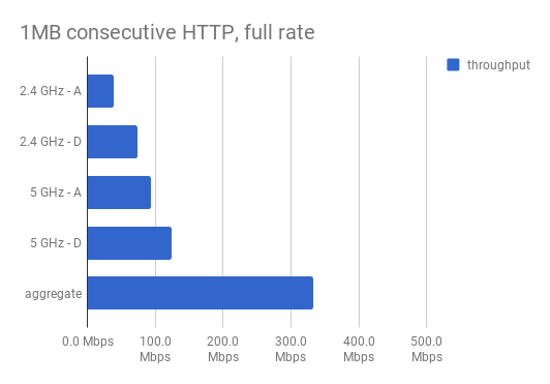

When testing with a single client, the WAC-505 produced some of the best throughput of the round-up. Only the Edimax CAP1200 outperformed it in overall raw throughput, with slightly lower 2.4 GHz scores and slightly higher 5 GHz scores.

NETGEAR WAC-505’s single-client throughput

With the performance testing on a single AP done, it was time to shift into multiple access points and a controller. For the WAC505, this means NETGEAR’s new Insight cloud controller. The APs report to a NETGEAR-managed controller algorithm in a datacenter somewhere. NETGEAR’s pretty proud of Insight; they’re currently offering free “Basic” management of no more than two APs (further APs cost $5/year apiece) or “Premium” management at $1 per AP per month. Basic management only allows use of the mobile app; you need Premium if you want to use the web UI. I didn’t want to junk up my phone with another app, so I took the free seven-day trial for Premium.

Philosophical objections aside, I didn’t enjoy this interface much. There was no network discovery of APs; you have to type each AP’s serial number into a text box, after which the cloud controller eventually “finds” it. (There’s a QR code on the back of each AP, which does at least heavily imply that I could’ve just scanned it, if I’d been willing to use the mobile app.)

Adding a new AP was obnoxious and slow; mine reported “Disconnected” with no apparent reason why for a good ten minutes after I’d added it. Hitting that AP’s web interface directly on the LAN similarly showed its status as “Cloud Disconnected” with no reason given. Eventually, I tried to upgrade its firmware from the local web interface, only to be told “An upgrade is already in progress”—which was apparently triggered from the cloud controller. After that invisible upgrade process finished and the AP rebooted, it showed up on the Insight controller.

Changing my existing, standalone AP to Insight-managed mode worked the same way—type its serial number into the Insight controller and wait. Annoyingly, Insight was perfectly willing to add my standalone AP and report on its status, even though it was in standalone mode and already configured, not in Insight-managed mode. I’m suspicious of unforeseen infosec pitfalls in that area, since it means anyone with an Insight account can get some degree of monitoring of any arbitrary NETGEAR access point just by serial number shopping.

After going back to my standalone AP’s local interface and switching it to Insight-managed mode, it warned me that it would drop its SSIDs, and then disappeared for reconfiguration. In the meantime, I configured my usual three test SSIDs, and enabled “Fast Roaming” and band steering in the Insight controller. It took another ten plus minutes before my standalone AP had finished reconfiguring itself and showed up as “Connected” in Insight.

Every page load or refresh in Insight tended to take ten to twenty seconds of staring at a CSS-rendered “spinning circle of wait” icon waiting for lists of devices, APs, or what have you to render. I really did not enjoy Insight at all.

Stepping away from Insight and recycling the connections on my four stations, they distributed between the two APs, all staying on 5 GHz.

The WAC505 supports 802.11k and r. Walking my path through the house from Station D, the “Fast Roaming” feature I’d enabled in Insight was very clearly present and functioning. Roaming to AP2/5GHz occurred just before I arrived behind Station B upstairs, and hopping bands from 5 GHz to 2.4 GHz occurred before I’d quite reached the far corner downstairs. Tracing my steps back, I was back on 5 GHz at AP2 at the top of the stairs. Arriving back to Station D where I’d started from, it roamed back from AP2 to AP1 unprompted after about ten seconds. This was easily the best roaming experience of all access points tested.

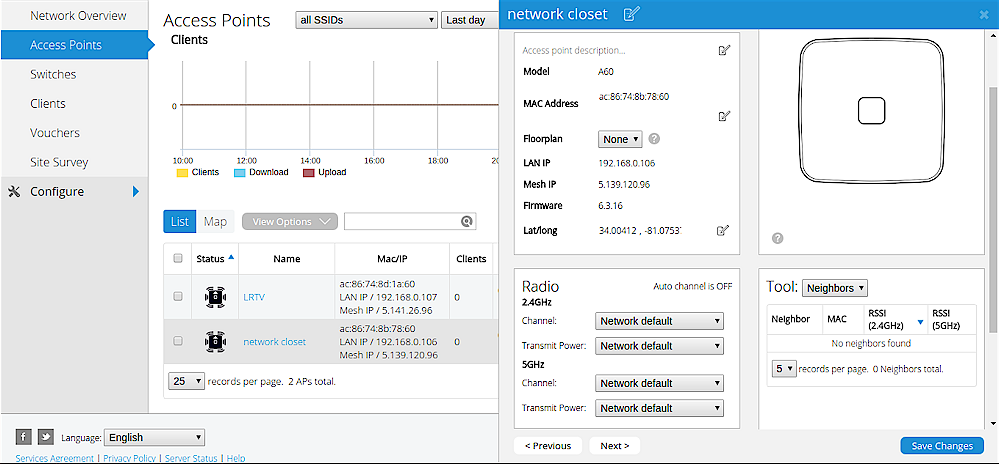

OpenMesh A60

| At a glance | |

|---|---|

| Product | Open Mesh Universal 802.11ac Access Point (A60) [Website] |

| Summary | 3×3 AC1750 class PoE-powered cloud-managed access point. Supports Wi-Fi backhaul |

| Pros | • PoE powered • Supports mesh backhaul • Cloud-based portal supports multiple sites • Good for indoor and outdoor use |

| Cons | • No local admin option • Iffy, inconsistent roaming in open air tests |

Typical Price: $0 Buy From Amazon

Technically speaking, I shouldn’t be testing the OpenMesh A60 here at all—the A60 is a 3×3 access point, and this is supposed to be a 2×2 AP round-up. But while Tim got A40s, I got A60s, so I tested what I had on hand. I do not believe that the extra MIMO stream really gave the A60 any advantage with my strictly 2×2 test stations.

The A60 is a pleasant-looking slim rectangle, with an unusual yellow activity LED. Unlike the rest of the APs tested here, it’s a moisture-resistant indoor/outdoor design with a floppy rubber gasket securing the cable access bay in its back. The gasket also grips one or two Ethernet cables entering from the bottom—yes, two Ethernet cables. Also unlike the other APs in the roundup, the A60 (and its smaller brother, the A40, which Tim tested) features two RJ-45 jacks, which can be a significant benefit for more loosely-managed smallbiz or home infrastructures.

That extra Ethernet jack means that you can power the A60/A40 from a central Power over Ethernet switch and extend that connection to non-powered wired devices plugged into its second jack. This might not be a big deal for fully- and well- planned sites, but it could be a lifesaver for “I found it this way” infrastructure that tends not to have enough in-wall cabling available.

The A60/A40 are also different in that they have no standalone mode whatsoever. You’ll need a working internet connection to set up and manage OpenMesh access points, since they’re designed specifically for management by OpenMesh’s “CloudTrax” cloud controller, with no local alternative possible. Philosophically, this is a downside. If OpenMesh goes out of business or stops operating CloudTrax, all these A60s and A40s turn into paperweights unless somebody designs new standalone firmware for them. But it’s potentially pretty appealing to the “don’t care, I don’t have to fiddle with it” set, since it Just Works without needing to set up any apps, controllers, or what have you.

CloudTrax UI

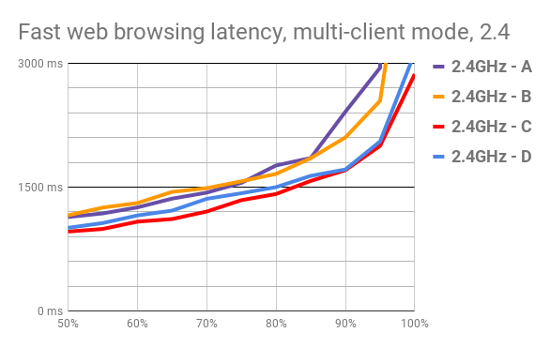

The A60 did a solid, workmanlike job on 2.4 GHz. It did not take top honors here, but all four stations showed very similar response curves, and three of every four page loads completed in under 1500 ms.

OpenMesh A60’s 2.4 GHz application latency curves

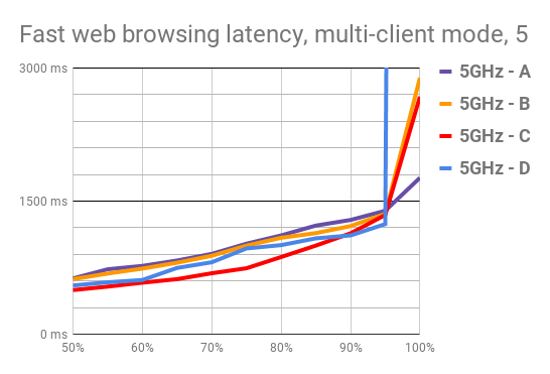

On 5 GHz, the A60 presents a pretty solid profile. All four stations managed sub-1500ms page loads for 19 of every 20 attempts here, with an unfortunate “knee” at 95% for Station D, which failed to load a few pages. It’s interesting how frequently this pattern repeats across the various models of APs we tested, since Station D has by far the best site placement of the four.

OpenMesh A60’s 5 GHz application latency curves

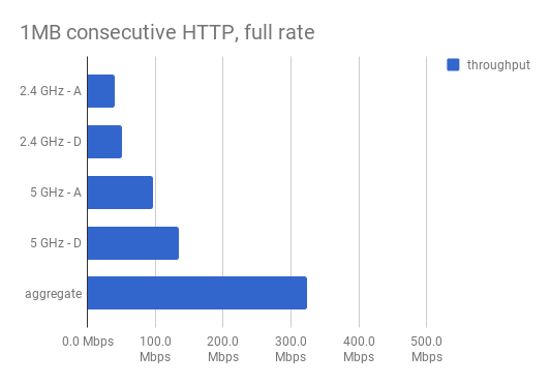

The A60 posted very good single-client throughput numbers. Its 2.4 GHz performance was unexciting but solid, and its 5 GHz performance was outstanding, second only to the Edimax CAP-1200.

OpenMesh A60’s single-client throughput

Unlike NETGEAR’s Insight cloud controller, OpenMesh’s Cloudtrax is “free as in beer”. There is no cost to set up a single AP at a single site… or a thousand APs at a hundred sites. However many APs you bought, you bought the rights to cloud manage them along with at no additional charge. Cloudtrax is absolutely awash with retail-friendly options, like the ability to charge for access via PayPal vouchers, log in with Facebook, etc.

It also offers a greater ability to separate clients from infrastructure than most APs, going so far as to run private DHCP servers for each SSID within the network, unless that SSID has been “bridged” to the LAN within Cloudtrax. This is where it starts to get sysadmin-unfriendly, though; you can only bridge a single SSID to the LAN, which means you’re stuck with multiple private subnets whether you want them or not if you need multiple SSIDs. Worse, I couldn’t even find a way to configure what private subnets are allocated. So far as I could tell, you get whatever randomly-chosen 10-dot class C it feels like deploying for whichever non-bridged SSID you’re on, and that’s that.

Cloudtrax, like Insight, was decidedly pokey about applying configuration changes and adopting new APs, taking as much as ten minutes before a change propagated to the APs. The firmware upgrade process—which was automatic, no intervention required—was extremely slow; it took more than half an hour before the activity LEDs stopped being an angry red and the APs showed as available within Cloudtrax. The SSIDs were available and the APs active during most of that process, though.

The actual performance tests here were done with the appropriate SSID bridged to the LAN for the duration of the test. I did a little exploratory testing of the performance of un-bridged SSIDs, and I was decidedly unimpressed—I only saw about 40 Mbps up / 60 Mbps down on the Intel AC 7265 onboard NIC when standing ten feet in clear line of sight from an access point. I’m not sure if this is a NAT issue, or traffic shaping buried somewhere in the Cloudtrax interface that I didn’t find, or what.

802.11r and band steering were both marked BETA in the Cloudtrax interface and disabled by default, so I left them that way. The A60s produced iffy, inconsistent roaming. The Intel AC 7265 generally would not roam until thirty plus seconds of standing at a new site and several iperf3 runs to prod it. It did not shift to 2.4 GHz in the downstairs far corner until several extremely shaky ~10 Mbps iperf3 runs, although it hopped back to 5 GHz willingly enough when I walked back up the stairs. As I’ve mentioned before, interpreting roaming behavior is unfortunately as much art as science, but I’m pretty sure the Intel AC 7265 was receiving no assistance at all from the A60s.

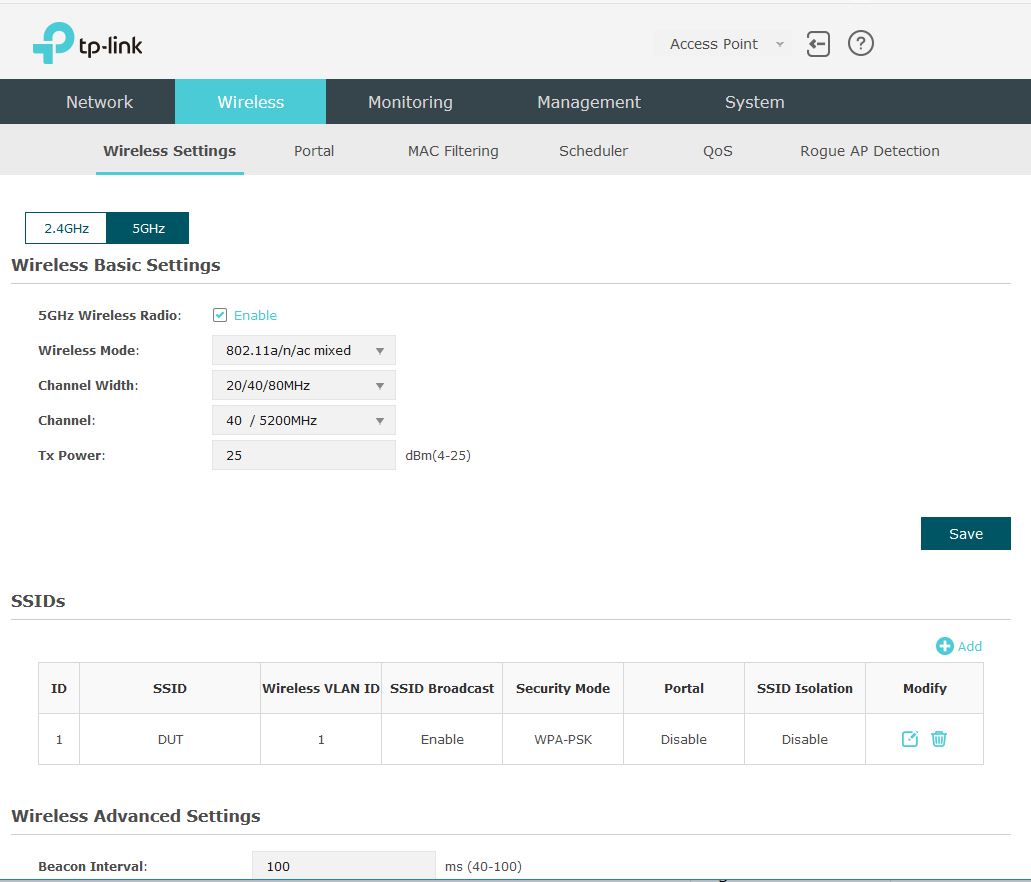

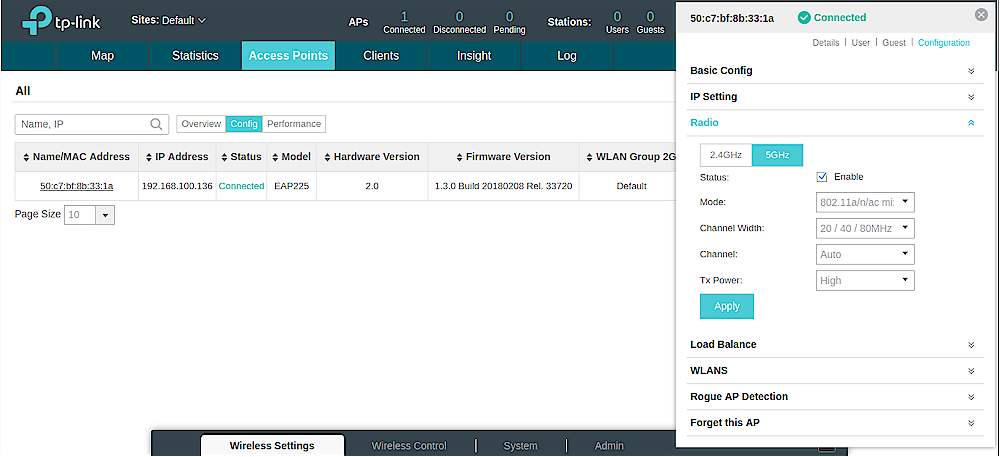

TP-Link EAP-225 v2

| At a glance | |

|---|---|

| Product | TP-LINK AC1200 Wireless Dual Band Gigabit Ceiling Mount Access Point (EAP225 v2) [Website] |

| Summary | Qualcomm-based AC1200 class 2×2 PoE-powered access point. |

| Pros | • Inexpensive • Great performance • Excellent multi-client performance |

| Cons | • No roaming assistance • No client bandwidth controls |

Typical Price: $65 Buy From Amazon

The EAP-225 is an inoffensive, chunky rectangle. It’s neither particularly attractive nor particularly ugly; just kind of a bland off-white box on the wall. Instead of the more typical RJ-45 access ports on the back, the EAP-225 places them on its bottom edge—which could be a boon in a chaotic SMB environment (easy to get to!) or a curse in a more tightly-managed enterprise environment (easy to get to).

The first thing I noticed about the EAP-225 was how complete, functional, and usable its standalone web interface is. I’m most familiar with Ubiquiti’s UAP line—and with those, you really need to set up their Unifi controller to access more than a tiny fraction of the functionality of the access points. Not so with the EAP-225. Logging into a single EAP’s web UI presents you with everything from multiple SSIDs to VLANs (with rudimentary QoS!) to working captive portal—all with no controller required. All the functionality was well laid-out and easy to find, and the UI was quite responsive.

TP-Link EAP225 web GUI

The only place I could really ding the EAP-225’s interface is in trying to identify the hardware version. This model has three hardware revs—v1, v2, and v3—and they have separate firmware lines. I eventually had to give up and go look at the physical label on the access point. The upgrade process itself is the oldschool “go hit the internet in another tab, see if there’s a download available, download it, extract the.BIN from the.ZIP you just downloaded, then go back to the firmware UI and click browse and find the.BIN” fandango. Painful. Most vendors really need to do a better job here, and TP-Link is unfortunately no exception.

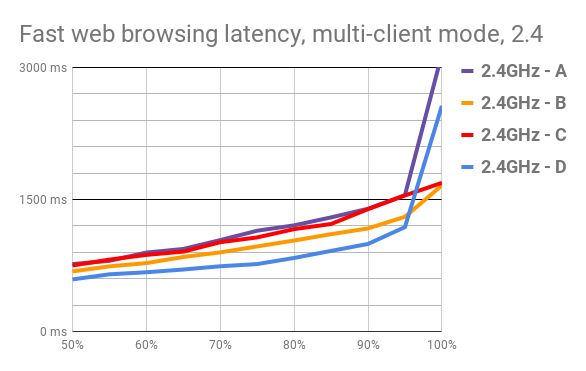

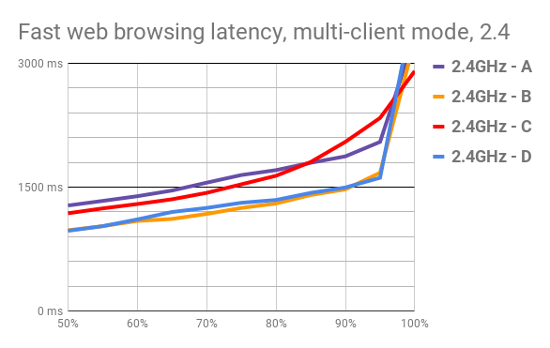

The EAP-225 did a flawless job on 2.4 GHz. Spoiler alert, this is as good as it gets for this round-up; do not expect to find a better set of 2.4 GHz curves for any other kit. Our STA lines here are tightly grouped, well below 1500ms for most of the x axis, and increase smoothly and incrementally instead of suddenly and drastically. This represents a very predictable usage experience that makes users comfortable. While STAs A and D did both take a noticeable sharp uptick at the 99th percentile, neither failed to load a page, with a worst case return of 3150ms.

TP-Link EAP-225 v2’s 2.4 GHz application latency curves

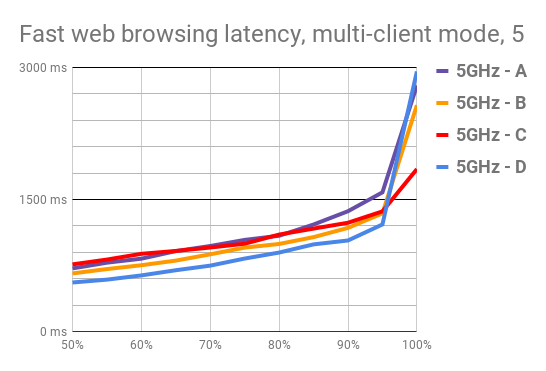

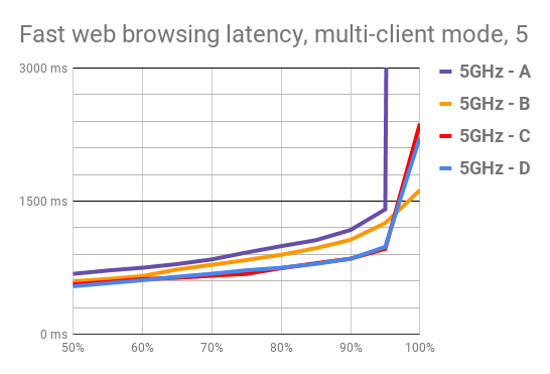

Things don’t look much different on the 5 GHz spectrum. We’ve still got four tightly grouped STA lines, smooth, incremental increases in value across the spectrum, and worst-case results in the 3000ms range. This is a really predictable, consistent set of results—once again, the best set of results in the round-up.

TP-Link EAP-225 v2’s 5 GHz application latency curves

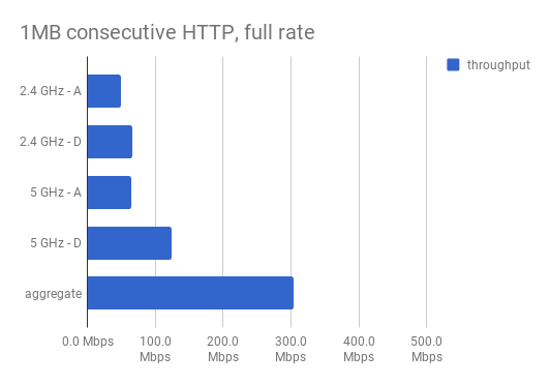

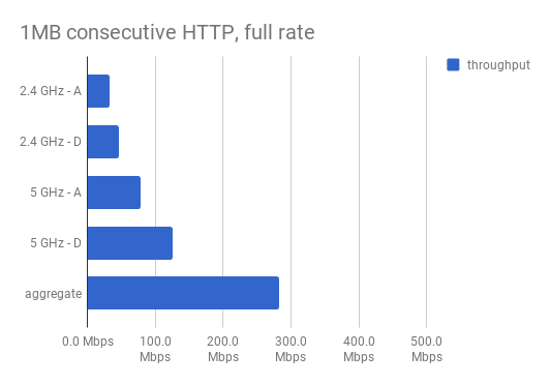

The EAP-225 posted excellent scores for 2.4 GHz throughput, neck-and-neck with NETGEAR’s top-scoring WAC-505. Its 5 GHz maximum throughput scores were middling, roughly on par with Ubiquiti’s UAP-AC-Lite. Environments that expect to actively use the 2.4 GHz band as well as 5 GHz would have a tough time finding a better-suited AP.

TP-Link EAP-225 v2’s single-client throughput

Moving on to the management and roaming tests, I downloaded and installed TP-Link’s free “AuraNet” controller that comes in Windows, Linux, and MacOS flavors. I installed it on a crappy little six-year-old Windows laptop I had lying around and had a pretty seamless experience. The controller, which calls itself Omada more frequently than Auranet, needs Java and prompted me for a Windows Firewall exception for the same, which I granted.

TP-Link Auranet controller

Once inside the Auranet/Omada controller, everything went pretty smoothly. I had a little trouble figuring out WLAN grouping, but I got there eventually. It’s just a little confusing figuring out when to hit the APs from the controller’s center dialog, vs. when to expand the system dialog, which lurks along the bottom of the screen. Adopting APs was quick and seamless. There is a single button marked “Forget All APs” that does exactly what it says on the tin… so don’t click it unless you mean it. It does leave the APs in functional standalone configuration once forgotten, thankfully.

Firmware upgrades are still the EAP-225’s Achilles’ heel, even when managed from a controller. You can batch apply firmware upgrades to multiple APs, but you still have to do the find-it-download-it-extract-it-browse-to-it fandango from the controller first—a sharp contrast to Ubiquiti’s model, which automatically discovers and notifies you of firmware upgrades, and optionally allows them to be automatically applied without any admin intervention as well.

With the second AP adopted, recycling the WLAN on the four test STAs distributed them evenly between the two APs, but all on 5 GHz, despite attaching to a dual-band SSID. I’m not sure if a greater number of STAs would ever result in any being automatically attached on 2.4 GHz. The channels did not overlap on either 2.4 GHz or 5 GHz between the two APs.

The EAP-225 doesn’t support 802.11k,v, or r. I found roaming with the EAP-225s was passable, but not world class. The Intel AC 7265 stubbornly maintained association with AP1 while walking behind AP2, and while walking to the farthest corner of the house on the bottom floor. It roamed to 5 GHz on AP2 shortly after I reached the far corner downstairs, and from there to 2.4 GHz on AP2 a few seconds later, without any network activity (read: without iperf3 runs) on the STA. The STA hopped back to 5 GHz on AP2 as I reached the top of the stairs, and stayed associated with AP2 after being returned to the test stand near AP1.

Ubiquiti UAP-AC-Lite

| At a glance | |

|---|---|

| Product | Ubiquiti 802.11ac Dual Radio Access Point (UAP-AC-LITE) [Website] |

| Summary | Inexpensive AC1200 class access point |

| Pros | • Many features including airtime fairness and band steering • Relatively inexpensive • Decent performance in multi-client load testing |

| Cons | • Meh performance • Can be hard to find features in Unifi GUI • Roaming speed not great unless signal levels are very low |

Typical Price: $100 Buy From Amazon

Like a lot of small business integrators, I’ve considered Ubiquiti’s UAP access point line to be the gold standard of small-biz Wi-Fi for several years now. They’re small, cheap, attractive and get the job done well… assuming you’ve got the infrastructure available to set up their Unifi controller on a PC somewhere, back it up regularly, and recover it if something goes wrong.

Each model of UAP, including the Lite, has a hidden access bay on the back of the device—a smallish off-white disc with a “ring” activity LED on the front—allowing for hidden cable installations. They’re pretty easy to mount, but a little persnickety to dismount and get to the cabling; this can be a feature or a bug, depending on your environment.

Relatively recently, Ubiquiti made it possible to configure UAPs in a standalone mode, using a tablet or mobile phone. The kicker is, unlike most of the APs tested in this roundup, they’re dumbed down to the point of making a Linksys WRT54G look smart without their controller. When configured standalone, they only offer a single SSID and no frills. Worse, you can manage standalone UAPs only from an app on your smartphone or tablet—there’s no web UI at all for non-app configuration.

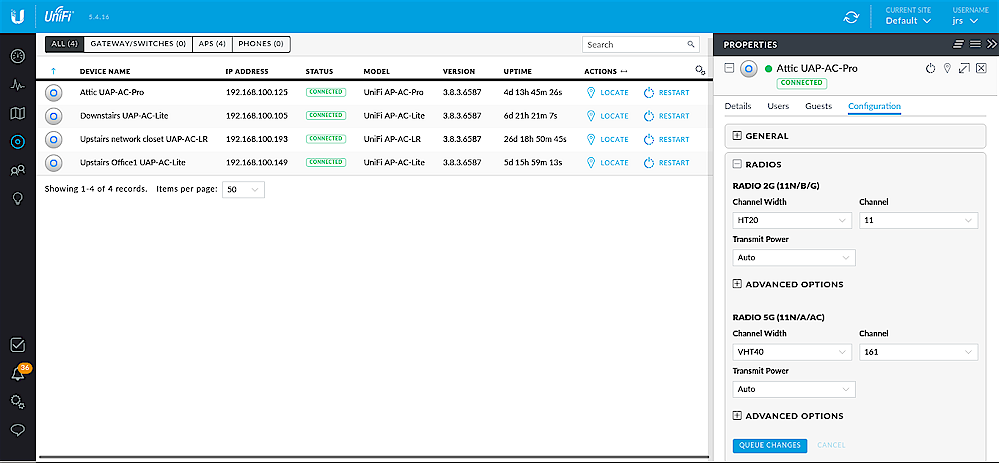

This is the only set of access points I did not actually test in standalone mode; I’ve had the experience of “managing” them with a phone already, and did not care to repeat it here. For these tests, I just disabled all but one of my house’s production UAP-AC-Lites from the Unifi Controller, and shifted it to a different WLAN group that offered the proper SSIDs and settings for testing.

The UAP-AC-Lite does a solid, workmanlike job on 2.4 GHz. Although the STA lines are higher than I’d prefer them to be, breaking 1500ms between the 80%-85% marks, they’re fairly tightly grouped and predictable, with only a moderate rise at the 99th percentile, and no flat-out failed page loads during the five-minute run at 2.4 GHz.

Ubiquiti UAP-AC-Lite’s 2.4 GHz application latency curves

The UAP-AC-Lites perform much better on 5 GHz than they did on 2.4 GHz, staying well below 1500ms clear to the 95th percentile. We do see a nasty knee in the data for STA A—our worst-sited STA—which failed entirely on several page loads during the run.

Ubiquiti UAP-AC-Lite’s 5 GHz application latency curves

The UAP-AC-Lite’s single-client, maximum throughput numbers are best described as “serviceable.” Although neither 2.4 GHz nor 5 GHz stands out as impressive, neither particularly disappoint, either. Its 5 GHz throughput is 4th place out of 8 devices, and while its 2.4 GHz throughput ranks 7th overall, it does not perform much worse at the distant STA A than it does at the close-range STA D, which softens the blow considerably.

Ubiquiti UAP-AC-Lite’s single-client throughput

Managing a larger set of Unifi Access Points is easy, using Ubiquiti’s free Unifi Controller software, which can be downloaded for Windows, Mac, or Linux. The UAPs tested here were being controlled by an instance of Unifi running on a dedicated Ubuntu virtual machine; this is actually the production network for my house.

The controller is managed from a browser, and exposes pretty much all the functionality you could ask for—multiple SSIDs, WLAN groups, VLANs, captive portal, radio strengths, minimum RSSID, steering behavior, and so forth—reasonably cleanly. It is afflicted with a small dose of super-cool-Web-2.0 flash that can get in the way a little bit at times, but it’s easy enough to pick up as you go along.

Ubiquiti Unifi controller

If you don’t have a server available to run Unifi on, you can buy a Ubiquiti Cloud Key for about $80. The Cloud Key is a USB powered (you’ll need to provide your own power supply) dongle that hangs off your LAN switch and runs Unifi, saving its data on an 8 GB Micro SD card.

The problem here is that the Cloud Key uses a non-journaling filesystem and a non-crash-safe database, so if you lose power, you run about a 50% chance of corrupting your data. If you lost power and lunched the cloud key’s storage, you’d better hope you have a good backup. If not, your UAPs will continue to function as configured, but you won’t be able to view their status, manage, or reconfigure them without a hardware paperclip reset on each and every one. (Yes, I’ve been bitten by this issue personally.) Caveat emptor.

Ubiquiti UniFi Cloud Key controller

Another thing to be careful of with the UAP Lite is that they began supporting 802.11af PoE only recently. Older hardware revs of the UAP-AC-Lite supported 24V passive PoE only, meaning they cannot use power from a standard PoE switch—only from their own injectors, or special 24V passive switches. It’s difficult to tell whether you’ve got a PoE-capable UAP even when looking at the box (there’s a pale gray “PoE” marking on the front) and, at least for now, your odds of getting one or the other are pretty much a toss-up from online vendors.

As mentioned in the first section of the review, it’s possible but emphatically not recommended (by me, at least) to run UAPs standalone—if you do, you’ll need to set up and manage each AP individually using a mobile app on a smartphone or tablet. No standalone management is possible from a standard browser. Should you decide to set up UAPs this way, you will be locked out of 90% of their functionality.

After completing the multi-client tests, I adopted and enabled a second UAP-AC-Lite and upgraded its firmware from within the Unifi controller. This is a relatively quick process; it’s a little slower than adopting APs in TP-Link’s Auranet/Omada controller but much, much quicker than dealing with the cloud-based offerings like OpenMesh Cloudtrax or NETGEAR Insight.

The AC Lite currently supports 802.11r. Once the AP was provisioned, I picked up STA D from its test stand near AP1 and walked across the house to the usual point behind AP2; approximately fifteen seconds later, the laptop roamed to AP2/5 GHz. The laptop did not shift to 2.4 GHz on reaching the farthest corner and waiting thirty seconds; but did shift to AP2/2.4 GHz in the middle of the first iperf3 test run there.

Walking back upstairs, it hopped back to AP2/5 GHz within a few seconds of reaching AP2. The laptop remained on AP2/5GHz thereafter; even when placed on the test stand near AP1 and after thirty seconds’ wait and several iperf3 runs, it was not inclined to roam again. This is pretty typical roaming behavior when connected to UAPs—they roam readily when the connection is poor, but don’t hop around much when it’s good, even if there’s a theoretically better one available.

Zyxel NWA1123-ACv2

| At a glance | |

|---|---|

| Product | Zyxel 802.11ac Dual-Radio Ceiling Mount PoE Access Point (NWA1123-ACv2) [Website] |

| Summary | Qualcomm-based AC1200 2×2 PoE-powered access point. |

| Pros | • Very small footprint • Decent performance |

| Cons | • No band steering • Setup much more difficult than it should be • Poor performance in multi-client load test |

Typical Price: $0 Buy From Amazon

Updated 5 April 2018: Product retested due to defective AP

The NWA1123-AC is a chunky disc, smaller in radius but several times thicker than a Ubiqitui UAP-AC-Lite. It has a hidden bay in the back of the device providing access to its 12VDC and RJ-45 jacks; it’s also got a little “cable maze” on the way out of the bay which secures the weight of the device on the Ethernet cable, not on the jacks, should the device fall off a wall. I really liked that little touch.

I initially had an extremely poor experience with both setup and performance of the NWA1123ac, but this turned out to be due to a defective access point. While it’s not great that a defective AP made its way out the door, it would be unfair to judge the entire line based on one defective part’s performance. So we’ve updated the results (and the setup experience) to reflect the typical behavior. I paperclip reset the second of three NWA1123ac access points in my possession and set it up from scratch, then logged three complete new test runs under the same conditions to confirm a true, repeatable baseline. The updated results here are from the median of those three runs.

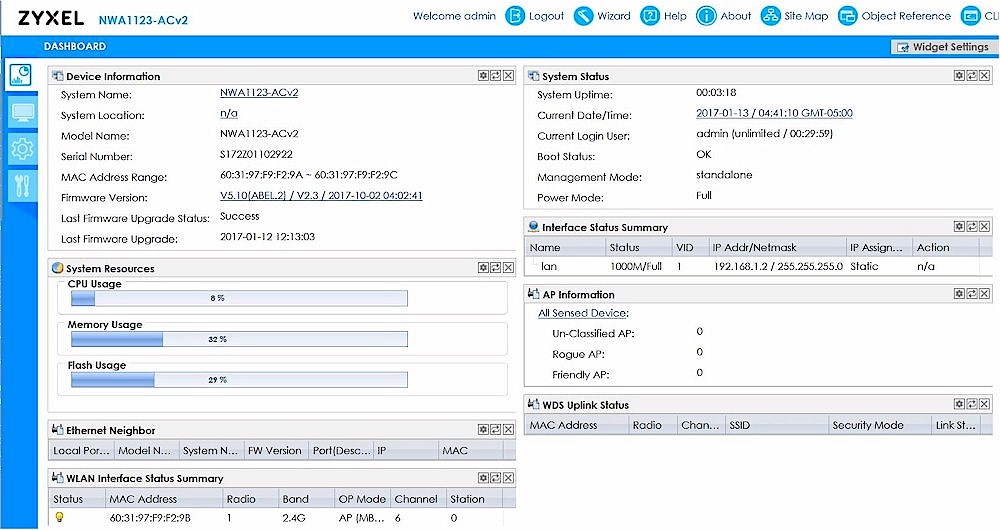

The NWA1123-AC will grab an IP address by DHCP from your existing LAN, or will statically configure itself to 192.168.1.2 if no DHCP server is available. You can connect to the NWA1123ac either over your LAN, or over the "Zyxel" setup SSID it presents in factory condition. The web UI for the NWA1123 was extremely slow. It spawned actual popup windows, which modern browsers will block automatically. Its help icon, when I tried it, took me to an external page. In a blocked pop-up. Which 404’ed, when I manually unblocked the pop-up.

I wanted that help because the setup wizard failed on the second AP I tried, and it took me a good half an hour puzzling my way through the regular interface just to configure an SSID. It’s not under "wireless"… it’s under "objects", and in several pieces. First you have to go to the SSIDs sub-tab under "objects", and configure those. Then go to the Security sub-tab of "objects", scroll down far enough to find the PSK section, and set up password and auth profiles. Then you can go into the actual "wireless" section of the interface, into APs beneath there, and apply both the SSID profiles and Security profiles to each radio individually. (For bonus points, the radios are labeled "Radio 1" and "Radio 2" with no hint whatsoever as to which is 2.4 GHz and which is 5 GHz.)

Zyxel NWA1123-ACv2 web GUI

I was not impressed with the NWA1123-AC setup experience.

The NWA1123-AC did a reasonably good job on 2.4 GHz. Its station curves are pretty tightly grouped with a predictable slope through the 80th percentile on all stations, and through the 90th percentile on all stations but the one behind four interior walls. Unfortunately, things go pretty badly haywire after that – the NWA1123-AC failed several page loads entirely on 2.4 GHz, resulting in throughput under 7 Mbps (of the targeted 8 Mbps) and a sharp knee off the chart a tthe 95th percentile on all stations. A user on this network would absolutely be clicking "reload" in their browser every now and then on this network.

Zyxel NWA1123-AC’s 2.4 GHz application latency curves

The NWA1123-AC did quite well on 5 GHz. Although it’s still plagued with a failure to load a few pages on stations C and D, its curves are predictable and tightly grouped all the way to the 95th percentile here, with slightly faster returns than even the top-ranked TP-Link EAP225 v2. Despite the slightly lower (faster) returns across most of the X axis, I would still give the EAP the nod on this test, since it did not take more than 3000ms to load any pages.

Zyxel NWA1123-AC’s 5 GHz application latency curves

While my defective NWA1123-AC struggled with single-client throughput results, the second, properly functioning AP turned in outstanding numbers. These are the best single-client 2.4GHz throughput numbers of the round-up, with 5 GHz throughput coming in third, just behind the OpenMesh A60.

Zyxel NWA1123-AC’s single-client throughput

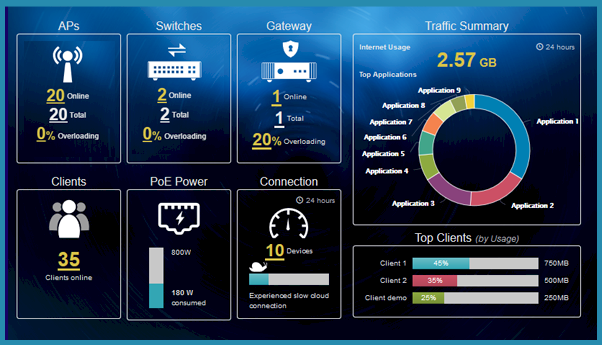

Zyxel offers free cloud management of these access points via their Nebula platform, at nebula.zyxel.com. Although the initial setup is a little clunky – it may take you a little while of confusedly poking at it before you figure out how to create your first "organization" and then "site" within that organization. It is pretty attractive and easy to navigate once set up, and offers plenty of information and graphs, along with event logs showing when client devices show up and drop off from the network.

Zyxel Nebula

In addition to management of access points—and Zyxel switches and gateways, if you have any—and logs of client device events, Nebula can serve as the back-end for WPA-Enterprise authentication. This is a pretty nice feature for SMBs or homes that would like to have individual Wi-Fi logins per user, but don’t want to deal with the significant hassle of running their own RADIUS server. Nebula is free for any number of sites and devices, and up to 200 WPA-Enterprise users. If you want to manage more user accounts than that, you’ll need to upgrade to and pay for a Pro subscription (which I didn’t price). Pro also offers additional log storage; the free version stores events and graphs for the past seven days only.

The NWA-1123AC does not support 11k,v or r, but does offer RSSI-based roaming assistance. All devices and interfaces connected on 5 GHz when connecting to the dual-band SSID on the NWA1123-AC access points initially. STA D roamed freely to AP2 after about ten seconds standing behind it, but refused to switch to 2.4 GHz downstairs in the far corner even after several minutes, and despite a -85 dBM signal strength there.

It did change to the 2.4 GHz band about 350ms into an iperf3 run—but switched right back to 5 GHz again about 15 seconds after the iperf3 run completed. At this farthest location, 2.4 GHz and 5 GHz both produced shaky 9-18 Mbps iperf3 runs, both TX and RX. It eventually shifted back to 2.4 GHz on AP 2 as I played with iperf3 in the downstairs far corner; and roamed back to AP 1 on 5 GHz as I set it on the test stand near AP1. There was no doubt that the Zyxel APs were assisting roaming; the Intel AC 7265 is not that prompt to roam on its own. While the AP steering was prompt, the band steering—if any—left much to be desired.

Performance Summary

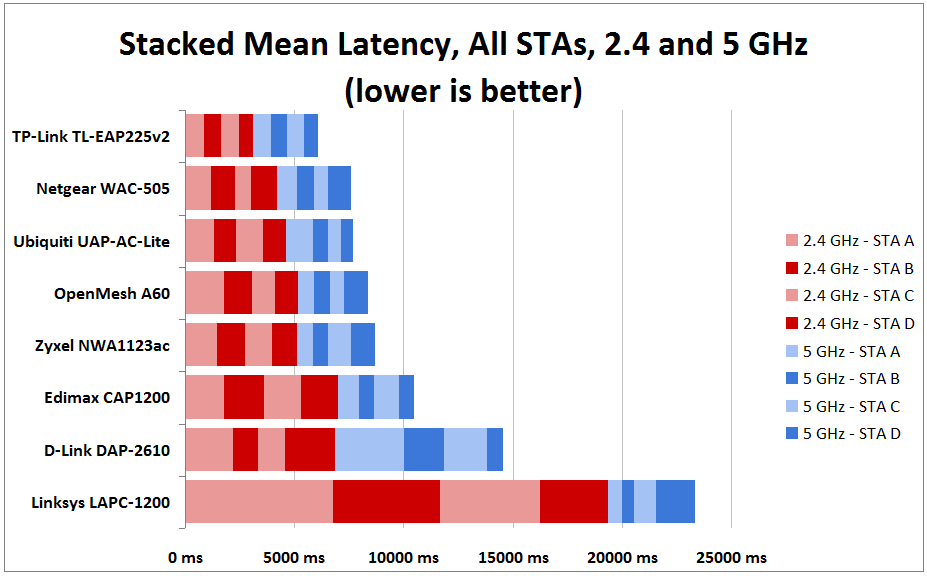

It can get a little overwhelming trying to figure out how the access points relate to one another performance-wise looking at the full dataset, so it’s handy to also have a simpler device ranking, based on the mean application latency.

While the performance of a device on its 2.4 GHz and 5 GHz radios aren’t necessarily directly related, many site admins will need to evaluate the performance on both bands—both to accomodate legacy devices, and make maximum use of available spectrum. To that end, I like to use the stacked latency from both bands combined as an initial ranking of devices.

True to expectations, TP-Link’s EAP-225 comes out clearly on top here, followed by NETGEAR’s WAC-505 and Ubiquiti’s UAP-AC-Lite, which are competing with one another neck-and-neck; OpenMesh’s A60 nips at both of their heels, and the rest of the pack falls significantly behind. Of course, what these performance graphs don’t show you is the management experience—which I found much better with the TP-Link and Ubiquiti locally-controlled access points than with NETGEAR’s or OpenMesh’s cloud-controlled schemes. Given the choice, I’d still pick the OpenMesh controller over NETGEAR’s—for both price and features.

Multi-client testing, stacked mean latency (both bands)

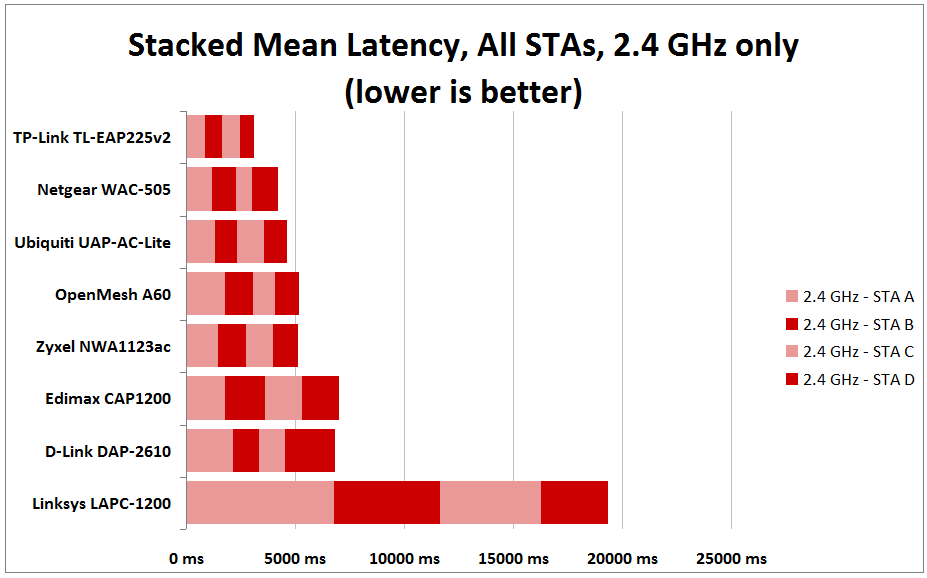

Eliminating the 5 GHz band and focusing solely on 2.4 GHz doesn’t change things significantly—the DAP-2610 now looks slightly better than the Edimax CAP1200, but not enough to write home about. The rest of the devices remain ranked as they were.

Multi-client testing, stacked mean latency (2.4 GHz only)

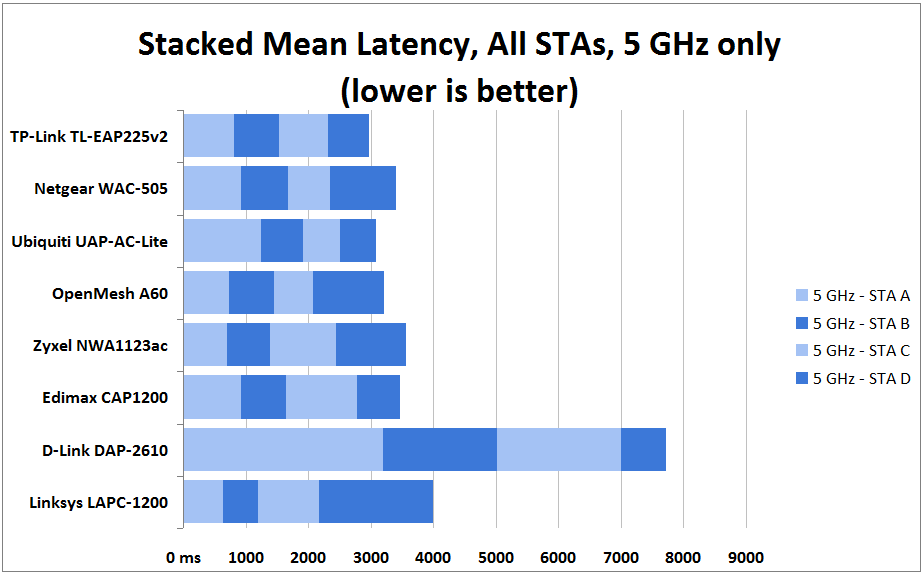

For most site admins, if there’s a single band to focus on, it’s not the 2.4 GHz band—it’s the 5 GHz. Although things look a little closer with 2.4 GHz eliminated, TP-Link’s EAP-225 is still clearly on top, followed now by the Ubiquiti UAP-AC-Lite, then the OpenMesh A60, with Edimax’s CAP1200, NETGEAR’s WAC-505 and the retested Zyxel NWA1123-AC close behind. Linksys’s LAPC-1200 trails about as far behind the WAC-505 and CAP1200 as they did after the top three, leaving D-Link’s DAP-2610 behind—far behind—in the weeds.

Multi-client testing, stacked mean latency (5 GHz only)

The table below brings all the roaming commentary into one place for easier comparison. For the summary, the less commentary, the better the roaming. So on that basis, the NETGEAR WAC-505 and Edimax CAP1200 come out on top for providing fast, reliable roaming. However, since the Intel AC 7265 used for roaming most likely supports at least 802.11k, your devices may also need to support 11k to achieve similar results.

| Assistance (802.11k/r/v) |

Start | D > B | B > Down | Down > B | B > D | |

|---|---|---|---|---|---|---|

| D-Link DAP-2610 | None | AP1/5 GHz | AP2/5 GHz | Moved between AP1, 2.4 GHz and AP2, 2.4 GHz | [not recorded] | AP1/5GHz |

| Edimax CAP1200 | k,r | AP1/5 GHz | AP2/5 GHz | AP2, 2.4 GHz | AP2/5 GHz | AP1/2.4 GHz |

| Linksys LAPC-1200 | None | AP1/5 GHz | AP2/5 GHz | AP2/5GHz to not associated to AP1/5GHz to not associated to AP2/5GHz. Moved to AP2/2.4 GHz about ten seconds after being manually reconnected | AP2/5 GHz after > 30 sec of iperf | AP1/5GHz after 10 sec |

| NETGEAR WAC-505 | k,r | AP1/5 GHz | AP2/5GHz | AP2, 2.4 GHz | AP2/5GHz | AP1/5GHz |

| Open Mesh A60 | r (not enabled) | AP1/5 GHz | iffy, inconsistent roaming in all locations | |||

| TP-Link EAP-225 v2 | None | AP1/5 GHz | No roam. Stayed on AP1/5 GHz | AP2/5 GHz then AP2/2.4 GHz a few seconds later | AP2/5 GHz | AP2/5 GHz |

| Ubiquiti UAP-AC-Lite | r, Minimum RSSI setting | AP1/5 GHz | AP2/5 GHz after 15 seconds | AP2/2.4 GHz during iperf3 run | AP2/5 GHz | AP2/5 GHz |

| Zyxel NWA1123-ACv2 | Proprietary RSSI-based | AP1/5 GHz | AP2/5GHz | AP2/2.4 GHz during iperf3 run. Switched right back to 5 GHz ~ 15 seconds after iperf3 run completed | [not recorded] | AP1/5GHz |

Summary of roaming behavior

Note that the information in the Assistance column above came from asking the vendors. Many of the APs have "minimum RSSI" settings that prevent devices lower than the setting from connecting, or deauthenticate (disconnect) associated devices when their RSSI falls below that level and the device’s own roaming logic hasn’t told the device it’s time to move to a better AP. The bottom line is that lack of 11k/v/r support shouldn’t automatically disqualify an AP from consideration. But as the results also show, it sure seems to help devices that support it.

Closing Thoughts

I don’t think I can really pick a single winner from this round-up, because absolute top performance isn’t everything—different situations, sites, and applications call for different features. But it’s really easy to pick a top three, which are clearly TP-Link’s EAP-225, Ubiquiti’s UAP-AC-Lite, and OpenMesh’s A40/A60 line.

Ubiquiti’s UAP line has been the gold standard for inexpensive access points for awhile, but they’ve clearly got some real competition in the space now. While the UAPs still offer a great set of features and solid performance for the price, they’re no longer the absolute performance kings, and they could be a lot more home / microbusiness friendly.

Their free-to-download Unifi controller is awesome, and I love that it’s fully cross-platform—available for Windows, Linux, or MacOS. But you need a server to run it on, and a solid backup plan for the controller configuration. The Cloud Key isn’t a good replacement because it’s slow, balky and severely prone to corruption after power outages. And without a controller of some kind, the UAPs are nearly worthless, crippled by a mobile-only management interface that hides nearly all of their functionality.

In many ways, TP-Link’s EAP-225 displaced the UAP-AC-Lite as the gold standard in inexpensive access points— ir costs less, performs better and offers almost all of the same features. Better yet, while TP-Link also offers a great, free-to-download, fully cross-platform controller, small sites don’t absolutely need it. The EAP-225s have a fully functional, easy-to-use web interface that can be managed from any browser.

For upmarket applications, the EAP-225 falls down in two places—its downward-facing cable placement, and its lack of automatic firmware upgrades. The downward-facing cables mean installations can’t be completely without visible cables. This may be a plus in some more chaotic small business environments, but visually it’s a minus for locked-down environments, whether locked down in security, or just in aesthetics. Meanwhile, the need to manually check for and apply firmware should give integrators who aren’t frequently on site pause. What won’t bother a net admin with only one network to worry about is a big hassle for somebody with 50+.

Challenging the UAP-AC-Lite from the other direction, OpenMesh’s A40/A60 line offers a highly tailored, retail-friendly experience that’s hard to beat for the right application. While I don’t generally like cloud dependency, the ability to manage one site or a hundred from a completely cloud-based infrastructure that requires no management and no extra expense is going to appeal strongly to a lot of people. In particular, having all of that advanced management capability without needing any local infrastructure is a big benefit for a lot of customers and integrators—and the extra network port makes controlled, protected power-over-Ethernet a lot easier to deploy in sites that don’t have separate AP-only cable runs.

Buy Wireless AC1300 Wave 2 Dual-Band PoE Access Point from Amazon

Buy Wireless AC1300 Wave 2 Dual-Band PoE Access Point from Amazon

Buy 2 x 2 AC Dual-Band Ceiling-Mount PoE Access Point from Amazon

Buy 2 x 2 AC Dual-Band Ceiling-Mount PoE Access Point from Amazon

Buy AC1200 Dual Band Access Point from Amazon

Buy AC1200 Dual Band Access Point from Amazon

Buy Insight Managed Smart Cloud Wireless Access Point from Amazon

Buy Insight Managed Smart Cloud Wireless Access Point from Amazon

Buy Universal 802.11ac Access Point from Amazon

Buy Universal 802.11ac Access Point from Amazon

Buy AC1200 Wireless Dual Band Gigabit Ceiling Mount Access Point from Amazon

Buy AC1200 Wireless Dual Band Gigabit Ceiling Mount Access Point from Amazon

Buy 802.11ac Dual Radio Access Point from Amazon

Buy 802.11ac Dual Radio Access Point from Amazon

Buy 802.11ac Dual-Radio Ceiling Mount PoE Access Point from Amazon

Buy 802.11ac Dual-Radio Ceiling Mount PoE Access Point from Amazon