Introduction

In my last article, I

discussed various technologies from companies including VMware, Virtual Iron, Citrix,

and Microsoft. These software solutions enable virtualization, the technology

that enables sharing physical computing resources such as CPUs, memory,

storage, and networks among multiple different servers. Virtualization enables

maximizing the utilization of computing investment, savings in energy costs and

data center floor space, and provides compelling technology options for both large and small networks.

In that previous discussion, I stepped through the creation

of a virtual Host machine, which is a single physical computer that can run

multiple servers simultaneously. I used a Windows XP Pro machine as my Host,

and then created two virtual machines. One virtual machine was created running

Linux, the other running an open source NAS appliance. I used VMware’s free VMware

Server on my Host virtualization platform, Ubuntu for my virtual Linux machine, and the BSD-based FreeNAS distribution for my virtual NAS appliance.

In this article, I’m going to examine some of the network

performance aspects of virtualization as well as the underlying operations that

enable a virtual machine to interact with a network. I’ll also go into some

configuration options that can be used to optimize network performance for virtualization.

The "Virtual Layer"

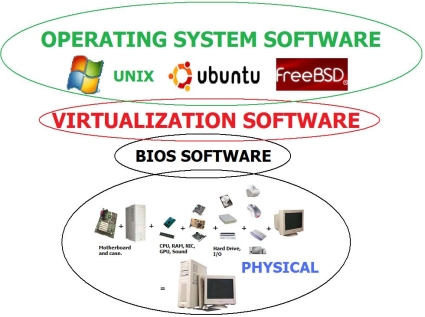

A key to understanding virtualization involves the idea of

layering. At the physical layer of any computer is the hardware, which includes

the motherboard, CPU(s), RAM, hard drive(s), NIC(s) and various other physical

components. As depicted in Figure 1, the physical computer hardware sits at the

bottom layer, with multiple layers of software implemented to enable the desired computing functionality.

Figure 1: Multiple layers are involved in virtualization

Software is required to operate the physical layer. Within each motherboard is the BIOS, or Basic Input/Output Software. The BIOS is the first software layer that controls the physical elements of a computer.

Traditionally, the second software layer is the operating system,

such as Windows, UNIX, Linux, BSD, and so forth. In a typical computer, the operating

system software interacts between applications and the BIOS to provide drivers

and control the input and output of data to all the physical components of the

computer. The limitation of this two-software layer scenario is that only one operating system can use the physical components of the computer.

Between the first and second software layers is where

virtualization plays. Virtualization takes over the control of all the hardware

between the BIOS and the Guest operating systems, providing a consistent set of drivers to the virtual machines, regardless of the underlying hardware.

Virtualization can be achieved in multiple scenarios. In

this article and the previous, we’re using Windows XP Pro as the Host operating

system residing in the layer above the BIOS. We’re running VMware Server

software to provide a virtualization layer for Guest operating systems. This

essentially creates four layers of software above the hardware: the BIOS, the Host operating system, the virtualization software, and the Guest operating systems.

Alternatively, and more efficiently, virtualization can be

achieved without a Host operating system using software known as a Hypervisor

that resides right above the BIOS. This is a three-layer model, and more efficient,

as the Hypervisor is a very thin piece of software residing between the BIOS

and the Guest operating systems. Hypervisor software, such as VMware?s

subscription based ESX3.1

is only 32MB in size and comes preloaded in the firmware of servers from various companies, including Dell, Fujitsu Siemens, HP, IBM, and NEC.

In either virtualization scenario, Guest operating systems

do not directly interact with the BIOS. Guest operating systems, or virtual machines,

interact with the virtualization layer, which then controls the hardware for the Guest OS.

The point is that virtualization adds one to two software

layers between the operating system and hardware, which brings us to the

question of performance. Can virtual machines perform as well over networks as physical machines, considering the processing overhead of the added layers of software?

Virtual vs. Physical NAS

To explore this question, I used the Virtualized FreeNAS

server I set up in my last review and compared its performance to a physical

FreeNAS server running on dedicated hardware. My Virtualized FreeNAS server is

installed running as a Guest OS allocated 512MB of RAM inside VMware Server on an XP Pro Host with an Intel 3.2GHz P4 CPU on an Intel chipset motherboard.

My

Physical FreeNAS server also has 512MB of RAM and is running an AMD 3000+ CPU with

an Nvidia chipset motherboard. Both platforms are x386 based systems with similar features and capabilities.

For my tests, both NAS machines were given 20 GB disks for

storage and 100 Mbps NICs. The virtualized FreeNAS used a "bridged"

network interface as outlined in my last article, meaning the virtualized

FreeNAS was sharing the Host machine’s integrated physical NIC. The physical

FreeNAS server used the integrated NIC on the Nvidia motherboard. Testing was

performed using Iozone software with our standard methodology.

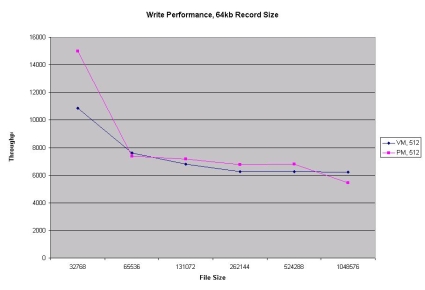

Figures 2 and 3 show the Write and Read throughput to the virtual and physical NAS devices, using a Windows Vista laptop running iozone. (Throughput and Filesizes are in KBytes for all graphs.)

Figure 2: Write throughput to the virtual and physical NAS devices

Figure 2 shows the physical FreeNAS system outperforming the

virtual machine’s throughput on 32 MB file writes, with the remaining write

performance very similar. This declining performance is typical of what we

usually see with iozone tests and is due to write caching effects. It?s

interesting that the effect is more pronounced in the physical machine than in the virtual.

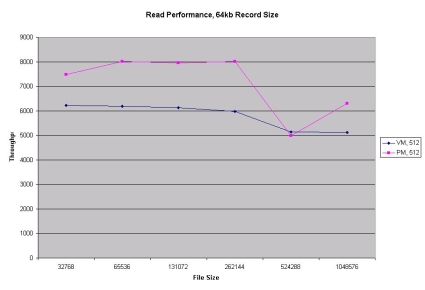

It is a different story for read performance, as shown in

Figure 3, with the physical FreeNAS system clearly outperforming the virtual machine

in nearly all file sizes. (The big downward drop in throughput at 512 MB for the Physical NAS is a measurement glitch. The correct value would result in a line with slope similar to the Virtual NAS.)

Figure 3: Read throughput to the virtual and physical NAS devices

Virtualization and Memory Considerations

The concept of virtualization is like carpooling. Most of

our cars run each day with only one passenger, leaving a lot of excess

passenger capacity. The same thing applies to servers. Servers running only one

operating system and application typically have a lot of excess computing

capacity. Virtualization utilizes excess computing capacity by enabling

multiple operating systems and applications to run simultaneously on the same physical server.

Along the lines of the carpooling analogy, cars with more

interior space, more seats, and bigger engines are better for carrying multiple

passengers. Similarly, servers with more CPUs, RAM, NICs, and disk space are better for virtualization and running multiple virtual machines.

One of the neat things about virtualization is that by

converting physical machines to virtual machines, hardware components from physical

machines such as RAM, NICs, and disk space may be consolidated to create fewer,

yet more-powerful physical computers better equipped to run multiple virtual machines.

These more robust physical machines still consume significantly fewer physical

resources, such as electricity, cooling, network ports, and floor space, while

providing sufficient computing resources to run multiple operating systems and applications.

For many server applications, virtualization performance can

benefit from additional RAM in the physical host machine. In the case of a NAS,

RAM isn’t as big a concern as with, say, a database server, so I tried running the virtual NAS with only 256MB to see how much the performance would suffer.

Virtualization and Memory Considerations – more

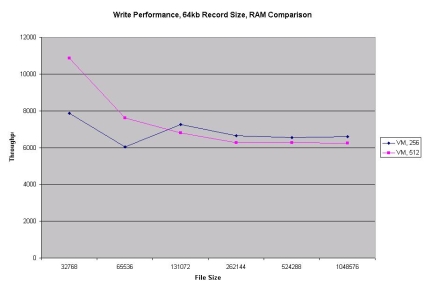

Figures 4 and 5 show Write and Read performance when the virtual machine has only 256MB of RAM as opposed to the virtual machine having 512MB of RAM as used in the head-to-head comparison with the physical machine.

In Figure

4 showing Write Performance, the virtual machine with 512MB RAM clearly

outperformed the virtual machine with 256 MB RAM at smaller file sizes, with

nearly identical performance as file sizes increased. Again, this effect is

primarily due to write caching, so with less memory allocated, the cache

performance boost declines sooner, resulting in lower performance for the 32 MB file write.

Figure 4: Write performance of 256MB RAM virtual machine vs. 512MB RAM virtual machine

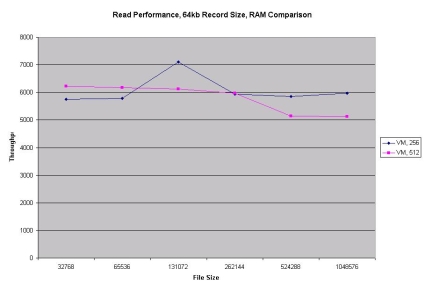

Read performance in Figure 5 between the 256MB virtual NAS

and 512MB virtual NAS shows a tradeoff, with higher performance at lower file

sizes for the larger memory virtual device and lower performance for the higher

file sizes for the larger memory virtual device. (The 128 MB data point for the

256 MB plot line is another measurement glitch. The real performance is more in line with the other data points.)

Figure 5: Read performance of 256MB RAM virtual machine vs. 512MB RAM virtual machine

Virtualization and Network Interface Considerations

Network performance is a key aspect to optimizing server

functionality. For a server to provide value, it must provide high throughput

to clients, data stores, and other servers over a high-speed network. In

addition to adding RAM to a Host physical server, there are multiple different options to optimize the performance of virtual machines.

Understanding that Guest operating systems interact with the

computing hardware through the virtualization layer explains how optimized

virtual drivers can improve the performance of a virtual machine. As discussed

in my last article, VMware has packages of high performance drivers, called "VMware

Tools," for Windows, Linux, FreeBSD, and Netware Guest operating systems that improve the performance of network interfaces and disk I/O operations.

In addition to installing VMware Tools, performance may be

enhanced on a high network traffic server by installing additional physical

NICs on the Host machine. These additional NICs can then be dedicated to

specific virtual machines. I tested this functionality by adding another NIC to

my Host server, and configured the network settings to map each physical NIC to

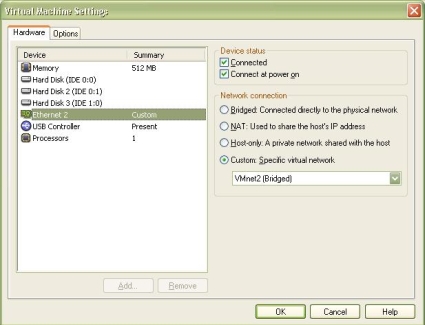

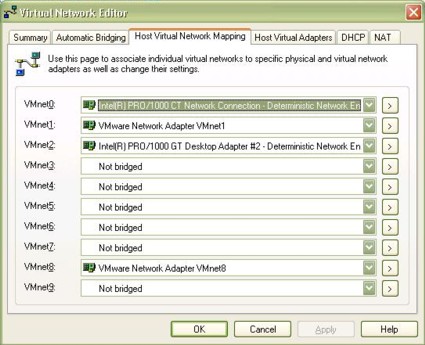

different virtual switches within VMware Server’s configurations, as shown in Figure 6.

Figure 6: Virtual network settings in VMware

Virtualization and Network Interface Considerations – more

The figure shows that the onboard NIC on my server is

VMnet0, and the additional NIC is VMnet2. I then uninstalled the virtual NIC

from my virtual FreeNAS machine and installed a new virtual NIC mapped to VMnet2

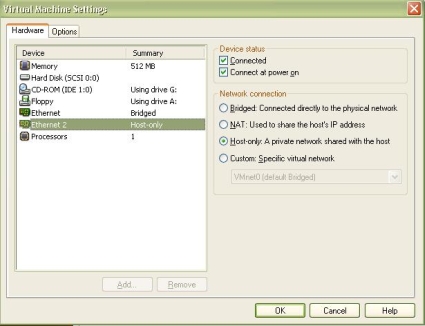

as per Figure 7. Notice the configuration requires selecting the custom option to designate the new interface.

Figure 7: Virtual NIC configuration in VMware

I didn’t expect to see any performance difference over the

dedicated NIC in my case, since my test server wasn’t heavily loaded with

network traffic. Indeed, reruns of Iozone tests on the virtual NAS with the

dedicated NIC revealed performance similar to the shared NIC. In a high-powered

server with heavy network traffic, however, network capacity can be increased by

installing additional network interface cards on the host server and allocating them to the appropriate virtual machines.

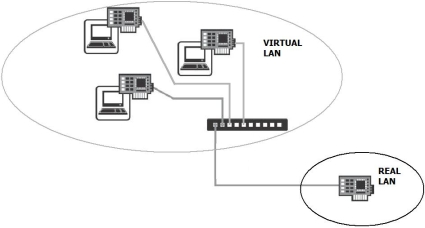

Virtual Networks

An interesting virtualization option to improve overall

network performance is to put virtual server communication on a dedicated

virtual network. Virtualized servers can run multiple NICs connected to both the physical world and the virtual world.

On a server running multiple virtual machines, each machine

can be given a virtual interface connected to a virtual switch. These virtual

interfaces can be given IP addresses statically or through the creation of a

virtual DHCP server, creating a dedicated virtual network to pass real data between virtual servers.

Windows-based VMware Host machines can create ten virtual

switches for networking virtual machines either to physical interfaces or for

internal network communications, while Linux based VMware Host machines can

create up to 200 virtual switches. In either case, creating a virtual switch to

connect each of the virtual machines provides separate dedicated network(s)

between the servers, reducing traffic and potential congestion on the physical network.

To test this functionality, I added a second virtual NIC to

my Ubuntu Linux server, using the Hardware Add tab in the VMware virtual machines

menu, as shown in Figure 8. This NIC was configured in VMware as Host-only,

meaning it does not have access to the physical network. Since Linux can

support multiple network interfaces, and my Linux machine already has a working

connection to the physical LAN, this second connection can be used for the internal virtual LAN.

Figure 8: Adding a NIC from the VMware Hardware Add tab

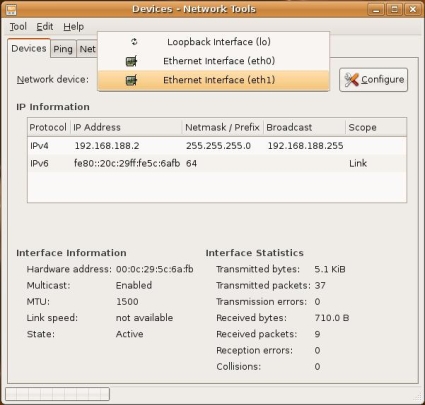

Figure 9 is a screen shot from Ubuntu’s network

configuration page showing the presence of two network interfaces. The first

Ethernet interface (eth0) is bridged to the physical network. The second

Ethernet interface (eth1) is connected to the Host-only or virtual network. I’ve

set up this second network interface with a static IP on the 192.168.188.0/24

LAN, which is the pre-assigned subnet for the VMware Host Only LAN. This can be changed, but since I wasn’t using this subnet, there was no need to adjust it.

Figure 9: Network configuration page in Ubuntu

With my Host server on 192.168.188.1 and my virtual Ubuntu

Linux server on 192.168.188.2, both machines are now connected to a virtual

network and to a physical network. Additional virtual machines on the same physical server can be connected to his virtual network.

Creating a virtual network like this is one of the cooler

aspects to virtualization. This separate virtual network has none of the

negatives of the physical network, such as physical points of failure and resource costs, yet provides all the necessary functionality.

The end result of using a virtual LAN to connect the virtual

machines is a reduction in network traffic on the physical network. In an

environment with large amounts of traffic between servers, creating virtual LANs can be a useful option for managing and optimizing the network.

Conclusion

While researching options for this article, I ran across

some studies indicating that virtual machines can suffer significant issues

both in processing and network performance. One study

stated that "performance degradation by a factor of 2 to 3x for receive

workloads, and a factor of 5x degradation for transmit workloads" could occur in some virtual servers.

Although this extreme level of degradation wasn’t my

experience, my virtual FreeNAS appliance did not perform as well as my physical

FreeNAS appliance, most glaringly with Read performance (Figure 3) running

15-20% slower on the virtual machine, even with significant amounts of RAM allocated to the virtual machine.

Keep in mind that the virtualization I’ve created is through

the free VMware Server software, which enables virtualization, but at the

expense of adding two software layers to the normal BIOS and OS equation. I

would expect that using VMware’s license-based Hypervisor will close, if not

eliminate the performance gaps experienced using VMware Server. The fact that

there is some performance degradation is understandable, considering the virtual

machine must go through additional processing to gain access to the physical layer.

Further, I ran my tests using different hardware platforms,

with a 3.2 GHz CPU (Intel Prescott 3.2E) on my virtualization platform, as

opposed to a 1.8 GHz CPU (AMD 3000+) on my physical platform. If anything, the virtualization platform should have had an edge in processing capability.

Nevertheless, free virtualization software merits strong

consideration, despite its performance disadvantage. The reduction in power

consumption, noise and heat generation, and space consumed by multiple physical

boxes is certainly a positive outcome of virtualization. Further, the

flexibility of creating multiple networks to support both virtual and physical traffic is a useful option for managing traffic flows and network capacity.

Overall, even with the slower performance of the virtual machine,

I’m going to dismantle my physical FreeNAS appliance and re-install its RAM and

hard drive in my Host server, free up the shelf space, and minimize the amount of cash I have to send to my electric company.