Introduction

Why is this man smiling?

It’s been vewy, vewy quiet around ol’ SmallNetBuilder since my last post. Since then, I’ve been focused on getting the Broadband Forum’s TR-398 test suite up and running on a new octoScope test platform. This work will soon be available as a turnkey product from octoScope.

TR-398 was unveiled in February at Mobile World Congress 2019 as the industry’s first Wi-Fi performance test standard. While it’s not as comprehensive as some might want, it’s a good start toward providing a set of benchmarks that can be used to compare product performance.

What Is The Broadband Forum?

The Broadband Forum (BBF) is a non-profit corporation organized to create guidelines for broadband network system development and deployment. They are perhaps best known for their TR-069 CPE WAN Management Protocol that is used by service providers to remotely manage broadband modems.

What Is TR-398?

Quoting from the TR-398 Wi-Fi In-Premises Performance Testing standard:

The primary goal of TR-398 is to provide a set of test cases and framework to verify the performance between Access Point (AP) (e.g., a CPE with Wi-Fi) and one or more Station (STA) (e.g., Personal Computer [PC], integrated testing equipment, etc.).

This is the first time an industry group has attempted to establish Wi-Fi performance test benchmarks. You might think the Wi-Fi Alliance had already done this long ago. But the WFA’s focus is on functional / interoperability Certification, which they strongly encourage their members to perform so that they can display a Wi-Fi Certification logo.

You need to be a WFA member to access their test plans. Membership ain’t cheap, so SmallNetBuilder is not a WFA member.

The BBF has no certification program for TR-398, so there are no logos to earn or display. You don’t need to be a BBF member to access their standards (aka Technical Reports / TRs), as evidenced by the links above.

The TR-398 suite is organized into eleven tests covering five performance aspects:

- RF capability

- Receive sensitivity

- Baseline performance

- Maximum connection

- Maximum throughput

- Airtime Fairness

- Coverage

- Range vs. Rate

- Spatial consistency

- Multiple STA performance

- Multiple STA performance

- Multiple Association/Disassociation Stability

- Downlink MU-MIMO performance

- Stability/Robustness

- Long Term Stability

- AP Coexistence

In general, the TR-398 suite uses two stream devices as the test devices (STA). This makes sense, since that’s the configuration of most mobile Wi-Fi devices today. It also mandates that the router/AP device under test (DUT) be set to channel 6 @ 20 MHz channel bandwidth in 2.4 GHz and channel 36 @ 80 MHz channel bandwidth in 5 GHz. 2.4 GHz band tests are done with DUT and STA configured for 802.11n; 5 GHz band tests use 802.11ac.

Since you can download and read the standard, I’m not going to go into the details of each test. Instead, I’ll focus on some of the tests that address areas that the SmallNetBuilder Wi-Fi benchmarks have not tested and look at some test results.

The Testbed

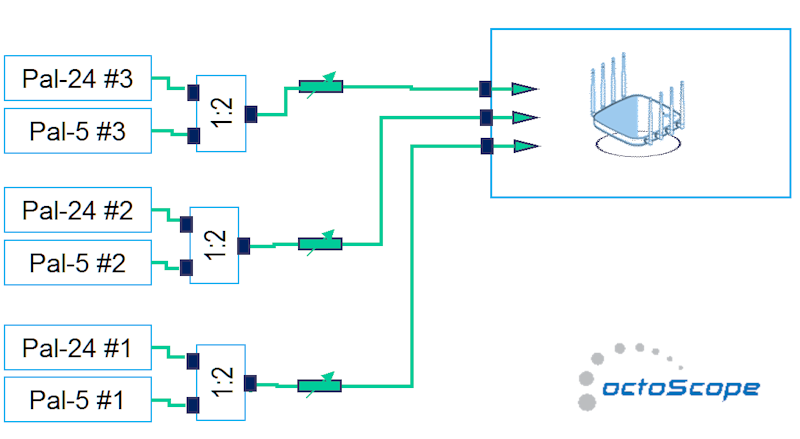

The TR-398 suite uses a new testbed configuration shown below. Although originally designed to suppport the TR-398, this configuration can also support roaming, band steering and other tests not included in the spec.

octoScope TR-398 testbed

The testbed uses octoScope’s Pal partner devices that can function as a station (STA), virtual stations (vSTAs), access point (AP), traffic generator, load generator, sniffer and an expert monitor. The Pal-24 supports up to four stream 802.11 b/g/n operation in 2.4 GHz and the Pal-5 supports up to four stream 802.11 a/n/ac. There will also be a version of this testbed that uses octoScope’s Pal-6 smartBox subsystem that supports 802.11a/b/g/n/ac/ax testing.

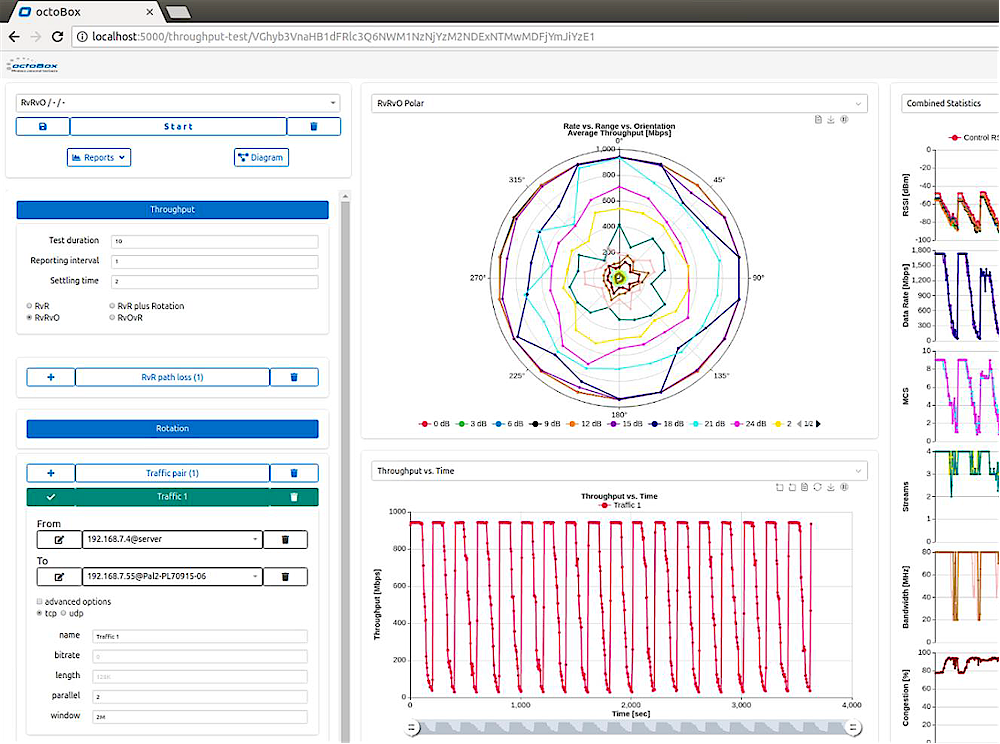

My implementation of the TR-398 suite uses octoScope’s octoBox software platform, automated via Python scripts. Test results are captured in CSV files and analyzed via Python scripts to test against limits specified in TR-398 and yield pass/fail results.

octoBox software screenshot

Traffic generation is done via a customized version of iperf3 that is controlled by the octoBox software and can be run in a multipoint configuration.

Now let’s look at a few of the tests and test results from three products:

- Linksys LAPAC1200 – two stream AC1200 Wi-Fi 5 access point

- NETGEAR R7800 Nighthawk X4S – four stream AC2600 Wi-Fi 5 router

- NETGEAR RAX80 Nighthawk AX8 – four stream AX6000 Wi-Fi 6 router

Given the nature of these tests, I didn’t expect to see much difference between the two NETGEAR routers. Draft 11ax doesn’t really bring anything to the party over a four-stream 11ac router when used with 11ac STAs. But it will be interesting to see if these tests reveal any advantages of the more expensive four-stream routers over the two-stream Linksys.

Instead of the usual rate vs. range tests, I’m going to look at some of the TR-398 benchmarks that test things I haven’t tested in the past.

Maximum Connection

I’ve been on a quest to come up with test methods that can show how much of a Wi-Fi load a router, AP or Wi-Fi mesh system can handle. Since top-of-line routers now sell for $600, it would really be nice to show that those products deliver measurable value over less expensive alternatives.While that goal remains a work in progress, TR-398 has taken some steps in that direction.

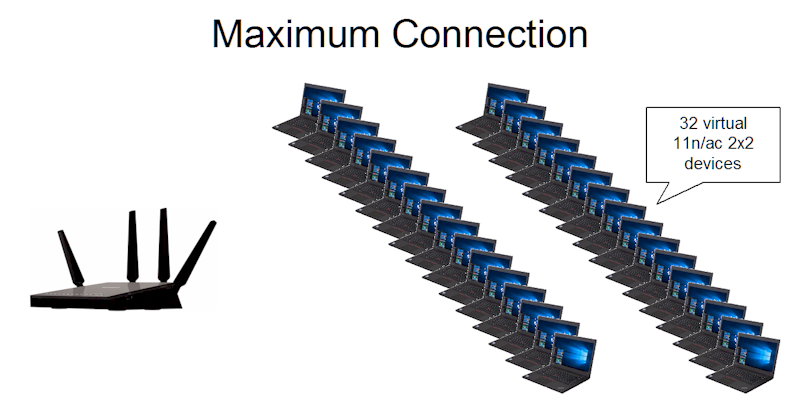

The Maximum Connection test (6.2.1) places a 32 device (STA) load on the router/AP under test (DUT), with each two-stream device running a 2 Mbps UDP stream for the 2.4 GHz test and 8 Mbps for 5 GHz for two minutes. Each STA must have less than 1% packet loss and total throughput for the 32 STAs must be not less than 64 Mbps * 99% for 2.4 GHz and not less than 256 Mbps * 99% for 5 GHz. The test is run under best-case path-loss conditions, i.e. high signal level, downlink and uplink.

I use the octoScope Pal’s vSTA (virtual STA) capability for this test to produce the 32 STAs. This is easier than cramming 32 real devices into a box and much easier than controlling each device’s association/disassociation and reading back results from 32 iperf3 endpoints.

Maximum Connection Test

I’ve been using a similar approach in my load-testing quest, experimenting with different traffic rates and ramping the number of connections to find the break point. Based on those results, I’d say BBF has placed the bar relatively low for this test, at least for current-generation 11ac four-stream routers.

Table 1 shows the results from this test. All three products pass all tests. So as it’s currently designed, this test doesn’t look like it will be useful to assess router/AP capacity.

| Linksys LAPAC1200 | NETGEAR R7800 | NETGEAR RAX80 | ||

|---|---|---|---|---|

| Maximum Connection Throughput (Mbps) |

2.4 GHz Dn | Pass [82.5 Mbps] | Pass [80.2] | Pass [82.2 Mbps] |

| 2.4 GHz Up | Pass [80.5 Mbps] | Pass [82.2] | Pass [80.5 Mbps] | |

| 5 GHz Dn | Pass [271.7 Mbps] | Pass [272.8] | Pass [272.1 Mbps] | |

| 5 GHz Up | Pass [272 Mbps] | Pass [270.8] | Pass [270.4 Mbps] | |

| Maximum Connection Packet Loss (%) |

2.4 GHz Dn | Pass [No loss] | Pass [No loss] | Pass [No loss] |

| 2.4 GHz Up | Pass [No loss] | Pass [No loss] | Pass [No loss] | |

| 5 GHz Dn | Pass [No loss] | Pass [No loss] | Pass [No loss] | |

| 5 GHz Up | Pass [No loss] | Pass [No loss] | Pass [No loss] | |

Table 1: Maximum Connection Throughput Test result summary

Airtime Fairness

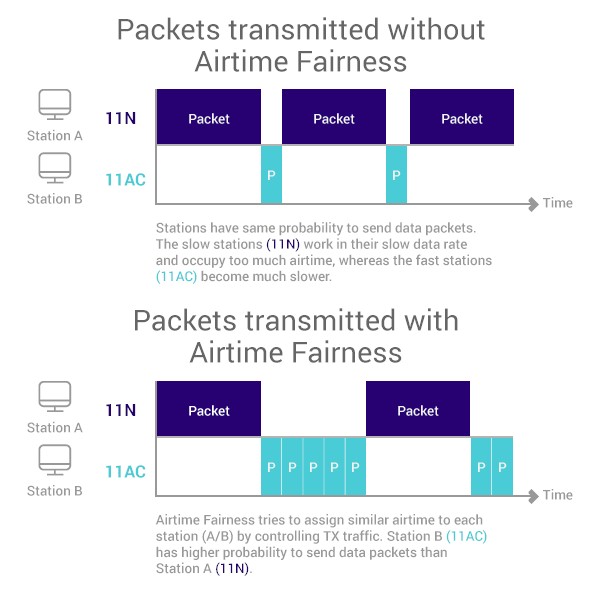

Airtime Fairness is a control you might come across buried deep in the Advanced Wireless settings of your router or, more likely, not. Here’s how TR-398 introduces the test:

Wi-Fi signal transmission can be seen as a multicast process since the STAs involved share the transmission medium. Air interface becomes a rare resource when dense connections or high throughput requests exist. Channel condition determines the MCS selection, therefore affecting the data throughput. In general, long distance to travel or obstacle penetration leads to larger attenuation, which makes the data rate in a low level. Occupying excessive air time of STA with small MCS will be unfair to the STAs with large MCS (here, assuming the QoS requirement is similar) when the air resources have already run out.

Written like only a committee could… To state it more clearly, Airtime Fairness generally describes techniques that try to ensure that slower devices don’t slow down faster devices. Implementations vary, but the general idea is to adjust an AP’s airtime scheduler to give more airtime to faster devices. This is illustrated below.

Airtime Fairness explained

(graphic courtesy of TP-Link)

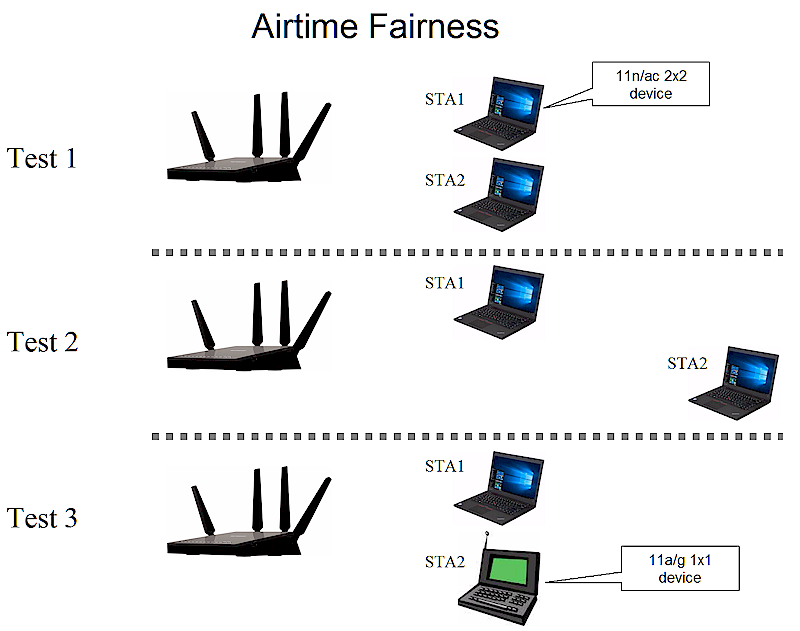

TR-398’s Airtime Fairness test (6.2.3) runs three scenarios shown below.

Test 1 has two two-stream STAs at equal strong-signal distance. Test 2 moves the second STA to a "medium" distance that BBF equates to adding 38 dB path loss in 2.4 GHz and 32 dB in 5 GHz. The STAs are configured as 802.11n devices for 2.4 GHz and 11ac devices for 5 GHz for these tests.

Test 3 puts both STAs back at strong signal distance, but changes the second STA to a single-stream "legacy" device (802.11g for 2.4 GHz, 802.11a for 5 GHz). Although TR-398 specs the "legacy" device as 802.11a/b/g, I configured the octoScope Pal for 11g for the 2.4 GHz test.

Each test runs traffic simultaneously to both STAs and measures throughput to each. As is TR-398’s standard method, tests are run separately on both bands.

Airtime Fairness test

The Airtime Fairness tests use Variation and Total throughput benchmarks to judge performance. Basically, the Variation tests check how far the STA1 reference STA’s throughput varies from the ideal case of two equal-capability STAs. The reference STA’s throughput is allowed to change +/- 5% compared to the mean of the Test 1 throughput for both devices, i.e. Mean (STA2_throughput_1, STA1_throughput_1). When the STA is "moved" (Test 2) or when a "legacy" STA is used (Test 3), STA1’s throughput can change only +/- 15% in 2.4 GHz and +/- 25% in 5 GHz.

The Total Throughput tests check throughput totals against set throughput limits of 80, 54 and 50 Mbps for 2.4 GHz and 475, 280 and 230 Mbps for 5 GHz for tests 1,2 and 3, respectively.

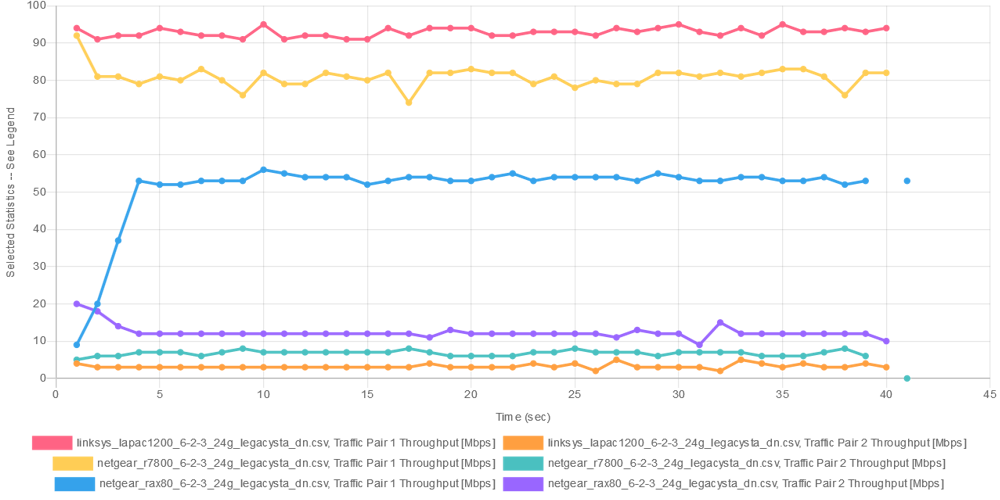

The 2.4 GHz results in Table 2 show the RAX80 failing both 5% limit tests and each product failing one or more of the 15% limit tests.

| Linksys LAPAC1200 | NETGEAR R7800 | NETGEAR RAX80 | ||

|---|---|---|---|---|

| 5% limits | 49.8 | 55.1 | 53.7 | 59.3 | 56.9 | 62.9 | |

| 15% limits | 44.6 | 60.3 | 48 | 65 | 50.9 | 68.9 | |

| Variation (Mbps) | STA1_throughput_1 | Pass [53.7] | Pass [56.5] | Fail [55.7] |

| STA2_throughput_1 | Pass [51.2] | Pass [56.5] | Fail [64.2] | |

| STA1_throughput_2 | Fail [86.2] | Pass [57.7] | Fail [110.2] | |

| STA1_throughput_3 | Fail [93] | Fail [80.7] | Pass [51.8] | |

| Totals (Mbps) | STA1_throughput_1 + STA2_throughput_1 |

Pass [104.9] | Pass [113] | Pass [119.9] |

| STA1_throughput_2 + STA2_throughput_2 |

Pass [97.1] | Pass [113.5] | Pass [117.47] | |

| STA1_throughput_3 + STA3_throughput_3 |

Pass [96.2] | Pass [87.23] | Pass [63.8] | |

Table 2: Airtime Fairness Test result summary – 2.4 GHz

I used octoScope’s Expert Analysis tool to generate some plots to gain insight into the failures. Sharp-eyed readers will notice the 40 second test time instead of the 120 seconds TR-398 specifies for most of its tests. I’ve been using shorter test times while developing the scripts to speed things along.

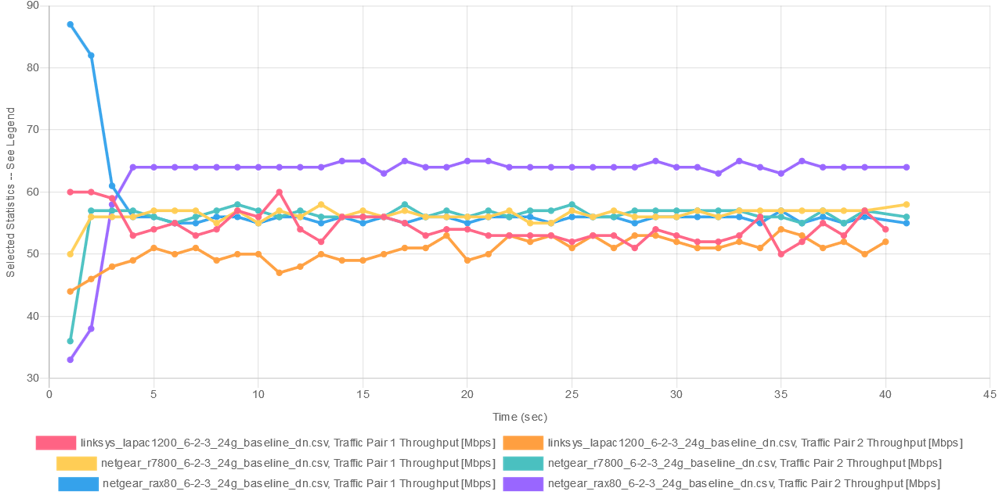

The Test 1 plot below shows a wider gap between the RAX80’s two throughput lines, which accounts for its failures on the first two +/- 5% limit tests. Note these are borderline failures and +/- 5% variation is a pretty tight given the nature of the Wi-Fi beast.

Airtime Fairness – 2.4 GHz "Test 1" reference

The 5 GHz results in Table 3 show all three products failed the 25% variation test limits when comparing the "legacy" STA’s throughput to the mean of STA1 and 2’s throughputs. The NETGEAR RAX80 also fails the total throughput test for the total of STA1 and "legacy" STA 3…by a lot.

| Linksys LAPAC1200 | NETGEAR R7800 | NETGEAR RAX80 | ||

|---|---|---|---|---|

| 5% limits | 271.2 | 299.8 | 319.6 | 353.3 | 299 | 330.4 | |

| 25% limits | 214.1 | 356.9 | 252.3 | 420.6 | 236 | 393.4 | |

| Variation (Mbps) | STA1_throughput_1 | Pass [289.9] | Pass [333.7] | Pass [309.4] |

| STA2_throughput_1 | Pass [281.1] | Pass [339.2] | Pass [320.0] | |

| STA1_throughput_2 | Pass [331.4] | Pass [333.6] | Pass [314] | |

| STA1_throughput_3 | Fail [384.3] | Fail [539.67] | Fail [16.7] | |

| Totals (Mbps) | STA1_throughput_1 + STA2_throughput_1 |

Pass [571] | Pass [673.9] | Pass [629.4] |

| STA1_throughput_2 + STA2_throughput_2 |

Pass [534.5] | Pass [675.6] | Pass [612] | |

| STA1_throughput_3 + STA3_throughput_3 |

Pass [391.4] | Pass [544.7] | Fail [37.9] | |

Table 3: Airtime Fairness Test result summary – 5 GHz

Here’s the STA1 and 2 5 GHz reference plot. All three products have a good amount of variation, but not enough to fail the 5% limit tests.

Airtime Fairness – 5 GHz "Test 1" reference

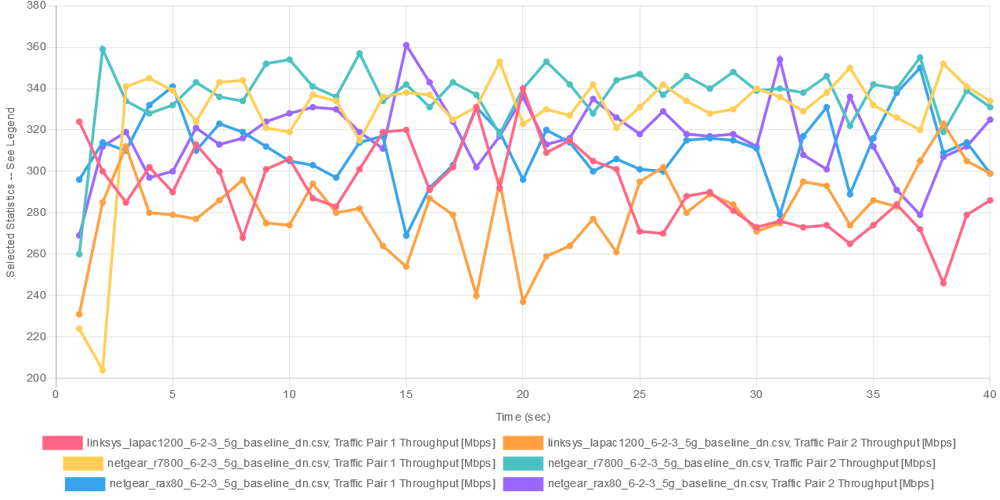

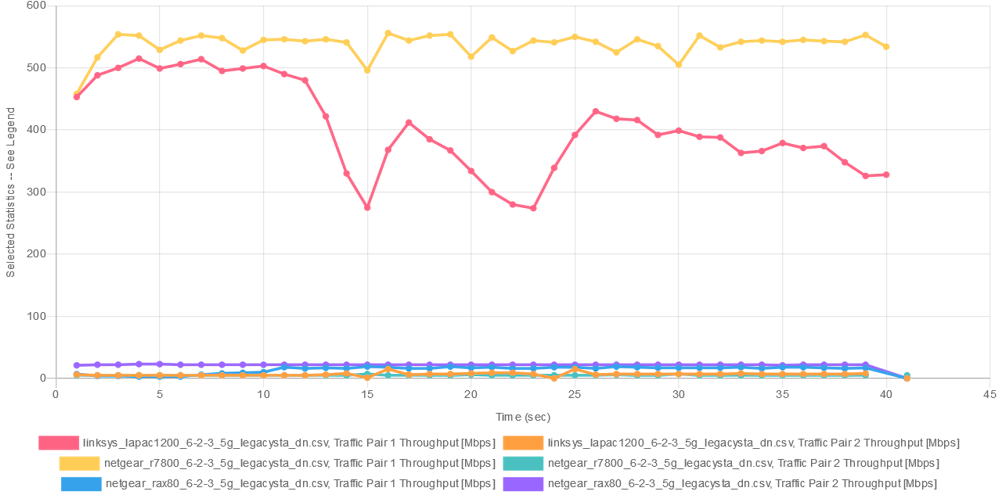

This plot shows the three products for the 2.4 GHz "legacy" test run. "Pair 2" is the "legacy" STA in each run and it appears the faster STAs are not being held back by the slower ones.

Airtime Fairness – 2.4 GHz reference + "legacy"

The 5 GHz "legacy" plot is different. The Linksys and NETGEAR R7800 properly allocate more bandwidth to the faster 802.11ac STAs, but the NETGEAR RAX80 does not. Both the 11ac and 11a STAs are down in the mud. This is why the RAX80 fails the STA1_throughput_3 variation and STA1_throughput_3+ STA3_throughput_3 total throughput tests.

Airtime Fairness – 5 GHz reference + "legacy"

One flaw I found in this benchmark is there are no tests that verify that the second station in Tests 2 and 3 has non-zero throughput. This is not captured in the total throughput tests. During test debug, I found the second STA in these tests could become disassociated or have essentially 0 throughput and the test could still pass.

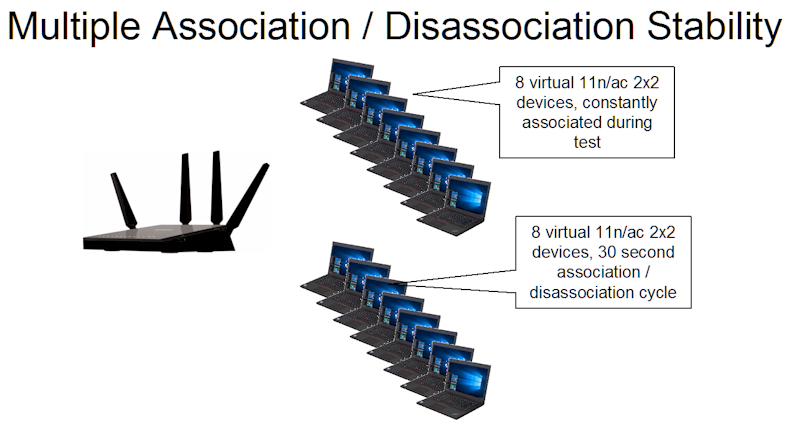

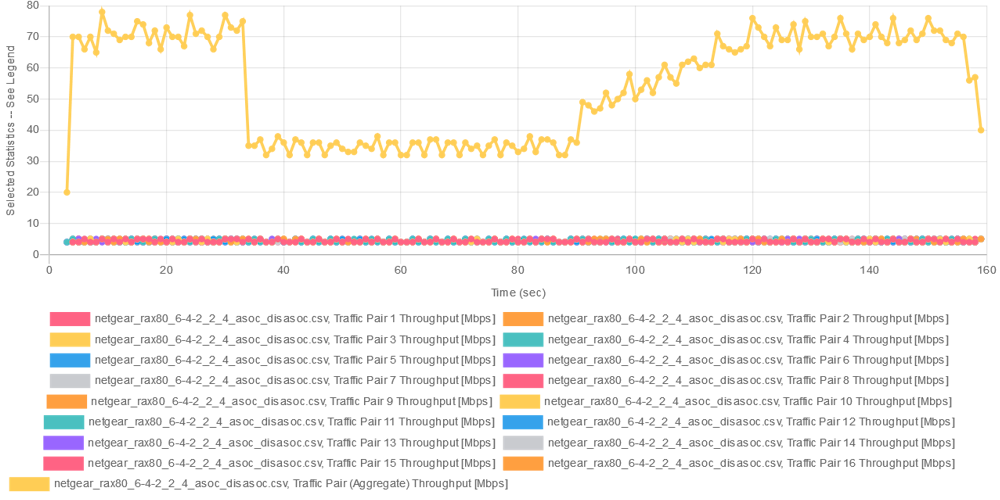

Multiple Association / Disassociation Stability

The Multiple Association / Disassociation Stability (6.4.2) test’s goal is to see how well throughput on multiple STAs is maintained while STAs connect and disconnect. 16 2×2 vSTAs are first associated, with 8 of the 16 running 4 Mbps of UDP traffic in 2.4 GHz or 8 Mbps in 5 GHz. Traffic runs for 30 seconds, then the 8 vSTAs that are not running traffic are disassociated. After 30 seconds, the 8 vSTAs are reassociated and the test ends about 10 seconds later. Throughput and packet loss are measured for each vSTA throughout the test.

Multiple Association / Disassociation Stability test

For the test to pass, none of the traffic pairs can drop packets and each pair must maintain at least 99% of its programmed throughput for the duration of the test.

Table 4 shows all products pass the test. I’m not surprised, since the traffic loads of 32 Mbps @ 2.4 GHz and 64 Mbps @ 5 GHz are pretty light.

| Linksys LAPAC1200 | NETGEAR R7800 | NETGEAR RAX80 | ||

|---|---|---|---|---|

| Maximum Connection Throughput (Mbps) |

2.4 GHz Dn | Pass | Pass | Pass |

| 5 GHz Dn | Pass | Pass | Pass | |

| Maximum Connection Packet Loss (%) |

2.4 GHz Dn | Pass [No loss] | Pass [No loss] | Pass [No loss] |

| 5 GHz Dn | Pass [No loss] | Pass [No loss] | Pass [No loss] | |

Table 4: Multiple Association / Disassociation Test summary

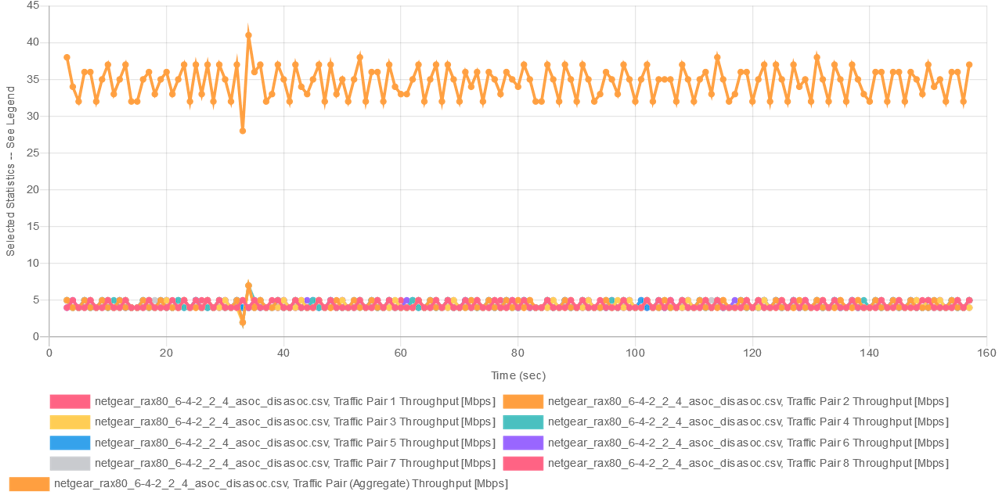

Here’s what the results from one the tests looks like. But you can’t really see whether each STA is meeting its test limit.

Multiple Association / Disassociation Stability test

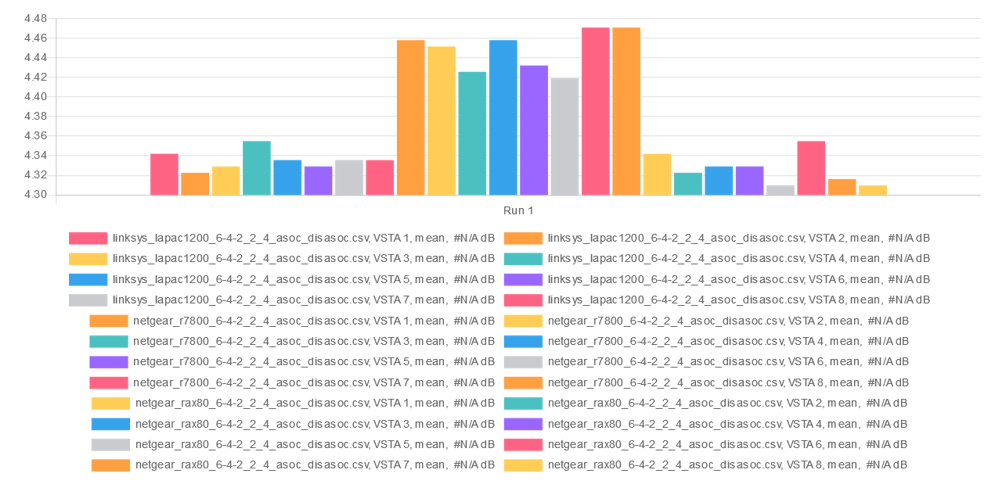

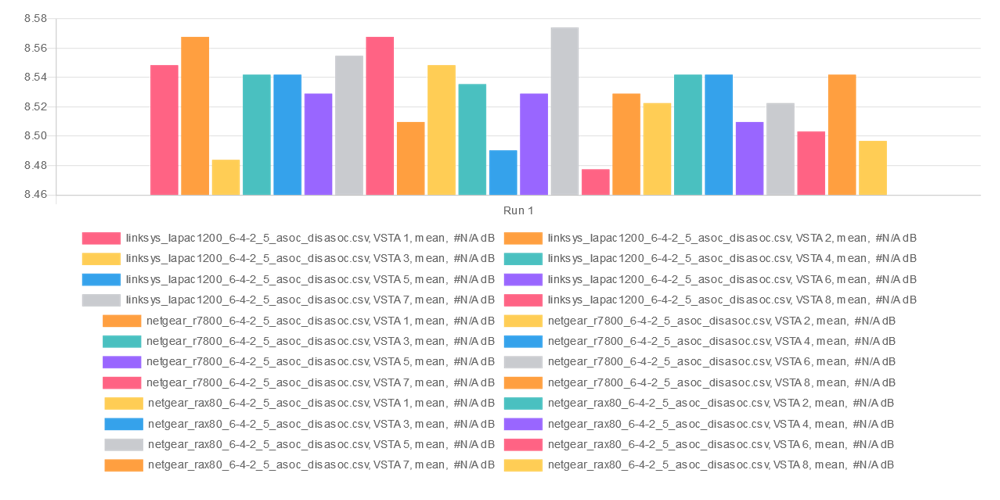

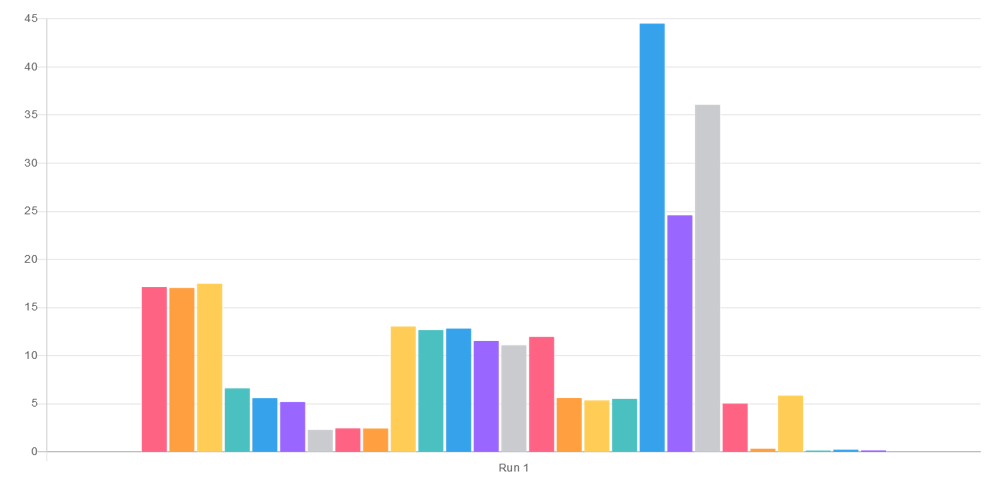

So turning again to the Expert Analysis tool, I generated a histogram of each STAs average throughput for all three products. Since each STA’s throughput must be at least 99% of 4 Mbps, all STAs pass.

Multiple Association / Disassociation Stability test – Average STA throughput histogram – 2.4 GHz

Similar results are shown for the 5 GHz test; all good.

Multiple Association / Disassociation Stability test – Average STA throughput histogram – 5 GHz

I’ve created an alternative version of this test that runs traffic on all pairs. So you get a more interesting plot that shows how throughput increases as STAs reconnect. This doesn’t seem to affect throughput, however. All traffic pairs still pass.

Multiple Association / Disassociation Stability test – traffic on all pairs

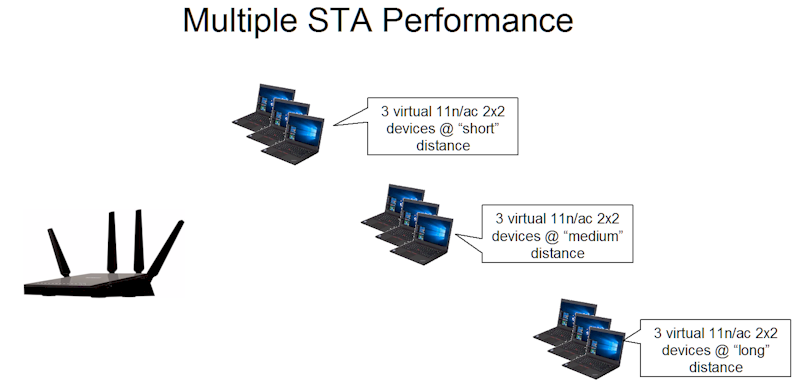

Multiple STA

The last test I’m showing is the Multiple STAs Performance Test (6.4.1) It’s is similar to the Airtime Fairness test in that it uses mixed traffic from devices. This time, however, three sets of equal capability STAs (2×2 n for 2.4 GHz 2.2 ac for 5 GHz), are set at different "distances" from the DUT. This test also differs from the Airtime Fairness test in that each set of three STAs are actually virtual STAs.

The "distances" are defined as:

- 10 dB of additional path loss in both bands for "short" distance

- 38 dB of additional path loss in 2.4 GHz and 32 dB in 5 GHz for "medium" distance

- 48 dB of additional path loss in 2.4 GHz and 42 dB in 5 GHz for "long" distance

Multiple STA Performance test

Unlimited TCP traffic is run to all three "short" distance STAs in downlink, then uplink. Then the "medium" distance STA trio is associated and down and uplink tests run again. Finally the "long" distance STAs are connected and the tests run again. Total throughput in each direction is calculated for each test run and compared to the limits in the table below. Note that each group of STAs remains associated as others are added.

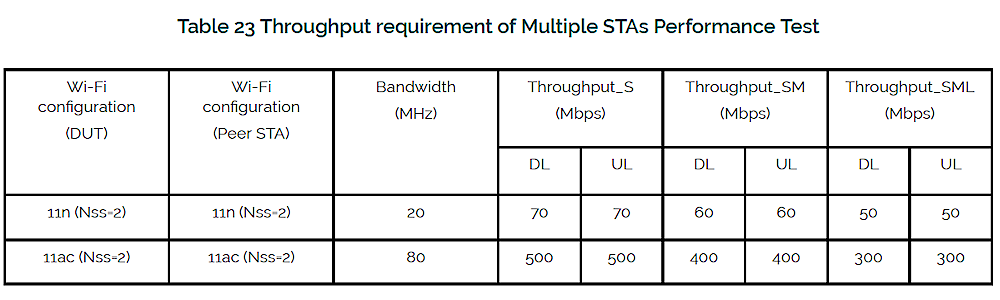

Test limits are shown in the Table 23 graphic below.

Multiple STA Performance test limits

The 2.4 GHz part of this test was a challenge for the two-stream LAPAC1200. Section 5.2.2 of TR-398 allows DUT and STAs to be separated by a specific distance (for large-chamber or open-air testing), or by path loss for small(er) chamber testing. The usual default distance is 2 meters, which equals 46 dB of path loss in 2.4 GHz and 53 dB for 5 GHz. The requirements for this test add the "distance" path losses shown above to these starting values.

The other factor to be considered is the maximum input level of – 30 dBm for the octoScope Pals. With the Revision 10 test setup, I found I needed to add 10 dB of attenuation for 2.4 GHz tests to avoid overloading the octoScope Pal when testing four-stream devices. To keep a level playing field, that means that all devices are tested with that additional attenuation.

The Broadband Forum testbed has somewhat lower path loss, so I’ve found I need to increase the added attenuation to between 15 and 20 dB so that four-stream APs don’t overload the Pal.

But when I used 20 dB to set the "default distance" for 2.4 GHz, this pushed levels for the "medium" and "long" distance STAs down to the point where vSTAs would disassociate during the test.

The upshot of all this is that the Linksys’ results in Table 5 were obtained using 10 dB of starting attenuation vs. 20 dB for the two four-stream NETGEAR products. I don’t intend for this to be the case once my test implementation is done. But it’s been a useful exercise to show the challenge TR-398 users will have trying to compare results among the wide range of test setups allowed.

Table 5 shows 2.4 GHz results. All three products did pretty well, with only the R7800 having one failure.

| Linksys LAPAC1200 | NETGEAR R7800 | NETGEAR RAX80 | ||

|---|---|---|---|---|

| Downlink Throughput (Mbps) | Short | Pass [109.6] | Fail [59.9] | Pass [120.2] |

| Short+Medium | Pass [85.4] | Pass [115.3] | Pass [118.7] | |

| Short+Medium+Long | Pass [73.9] | Pass [87.9] | Pass [112.5] | |

| Uplink Throughput (Mbps) | Short | Pass [81.5] | Pass [109.7] | Pass [105.6] |

| Short+Medium | Pass [69.5] | Pass [113] | Pass [73.3] | |

| Short+Medium+Long | Pass [53.3] | Pass [94.9] | Pass [71.2] | |

Table 5: Multiple STA Performance – 2.4 GHz

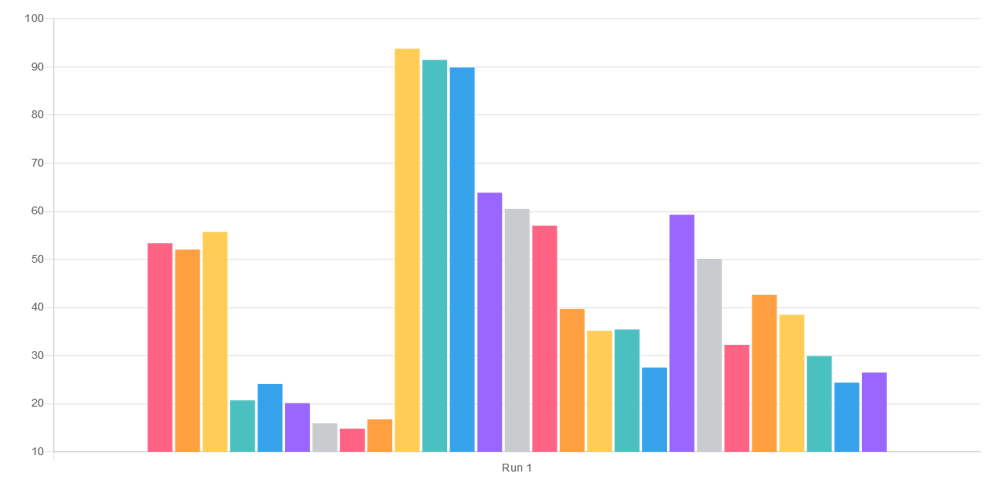

To get some insight into the 2.4 GHz downlink results, let’s go to the histogram plots. I removed the legend to increase the bar size for easier viewing. Linksys LAPAC1200, NETGEAR R7800 and NETGEAR RAX80 are plotted left to right. Each product includes short, medium and long distance throughput for three vSTAs. For the following plots, the test limit is compared against the sum of all nine test results for each product.

The plot below is for the 2.4 GHz downlink Short+Medium+Long test. It shows that there is more than one way to exceed the 50 Mbps bar for this test.

Multiple STA Test – 2.4 GHz downlink – short+medium+long test

Table 6 contains the 5 GHz test summary. The Linksys AP fails the most tests, so we’ll look into that more closely in a bit.

| Linksys LAPAC1200 | NETGEAR R7800 | NETGEAR RAX80 | ||

|---|---|---|---|---|

| Downlink Throughput (Mbps) | Short | Fail [456.6] | Pass [692.3] | Pass [624.9] |

| Short+Medium | Fail [329.4] | Pass [660.4] | Pass [496.8] | |

| Short+Medium+Long | Fail [265.7] | Pass [562.9] | Fail [281.5] | |

| Uplink Throughput (Mbps) | Short | Fail [448.9] | Pass [678.8] | Pass [618.1] |

| Short+Medium | Fail [322.4] | Pass [599.9] | Pass [576.3] | |

| Short+Medium+Long | Pass [336.5] | Pass [506.7] | Fail [511.8] | |

Table 6: Multiple STA Performance – 5 GHz

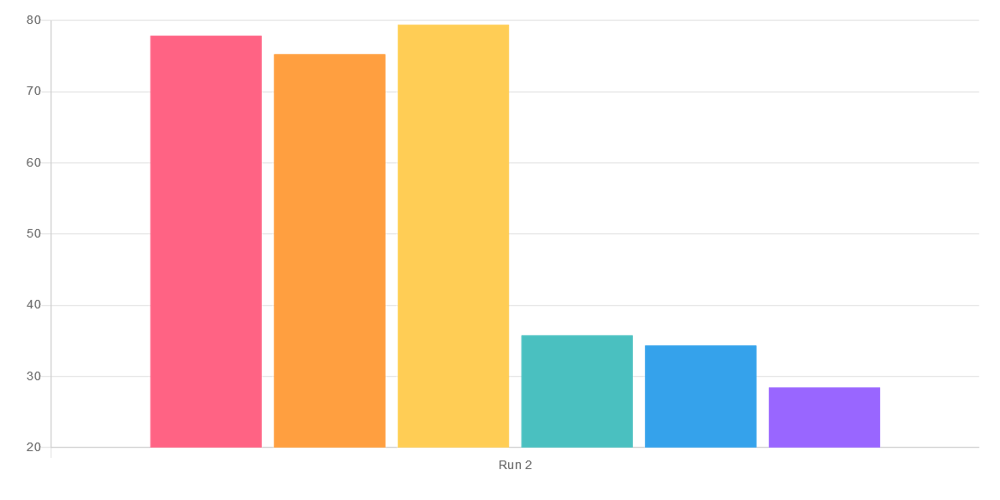

5 GHz downlink Short+Medium+Long test results are again shown. Both the Linksys and NETGEAR RAX80 fail to achieve the 300 Mbps minimum total throughput required to pass this test, while the NETGEAR R7800 easily exceeds it.

Multiple STA Test – 5 GHz downlink – short+medium+long test

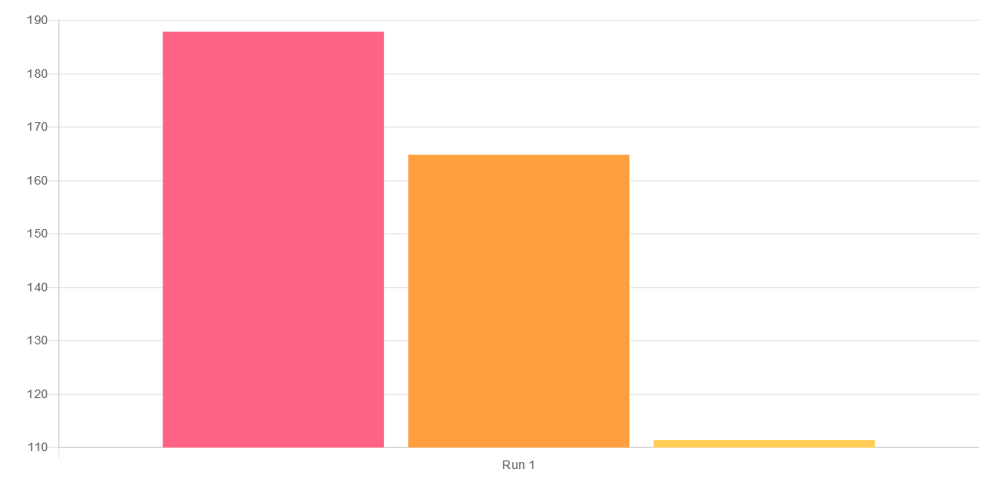

Looking again at the Linksys, here’s what the short+medium downlink results look like.

Multiple STA Test – 5 GHz downlink – short+medium test

Finally, just the short test results. That third vSTA wasn’t doing very well.

Multiple STA Test – 5 GHz downlink – short+medium test

Closing Thoughts

TR-398 is by no means a comprehensive Wi-Fi Performance test suite. But that fact that it exists at all is a major accomplishment for the Wi-Fi industry. So kudos to the Broadband Forum for taking this on and seeing it through to release.

Now that a standard exists, I’ll be incorporating some of its tests into an upcoming SmallNetBuilder Wireless Test process. But my quest for a good way to tell whether a $600 Wi-Fi 6 (draft 11ax) router really delivers more value than a $200 top-of-line Wi-Fi 5 (11ac) router continues.